The untold tale of Bonsai.

In the 1990s there was a flurry of interest and developments in digital compositing. Many VFX studios had taken on tools like Quantel’s Harry, Kodak’s Cineon, Wavefront’s Composer or Discreet Logic’s Flame, while a number of node-based solutions, such as Digital Domain’s (later Foundry’s) Nuke and Nothing Real’s (later Apple’s) Shake, further emerged in this era.

Meanwhile, some studios also developed proprietary compositing tools, and one of those studios was Sony Pictures Imageworks. Its tool was called Bonsai and it came to the fore during production on the visual effects for Robert Zemeckis’ Cast Away, released two decades ago this month. The tool would continue to be used for several years at Imageworks.

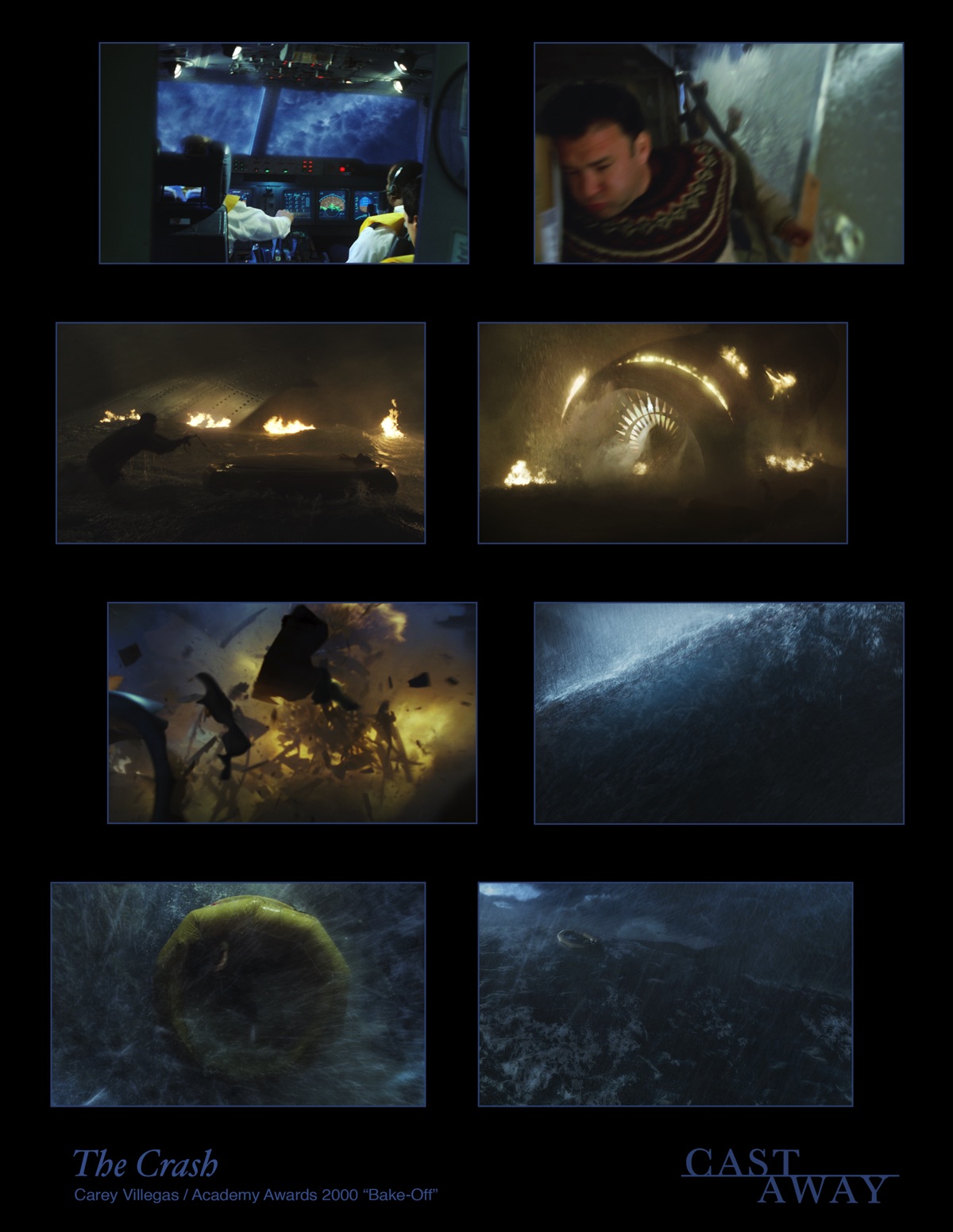

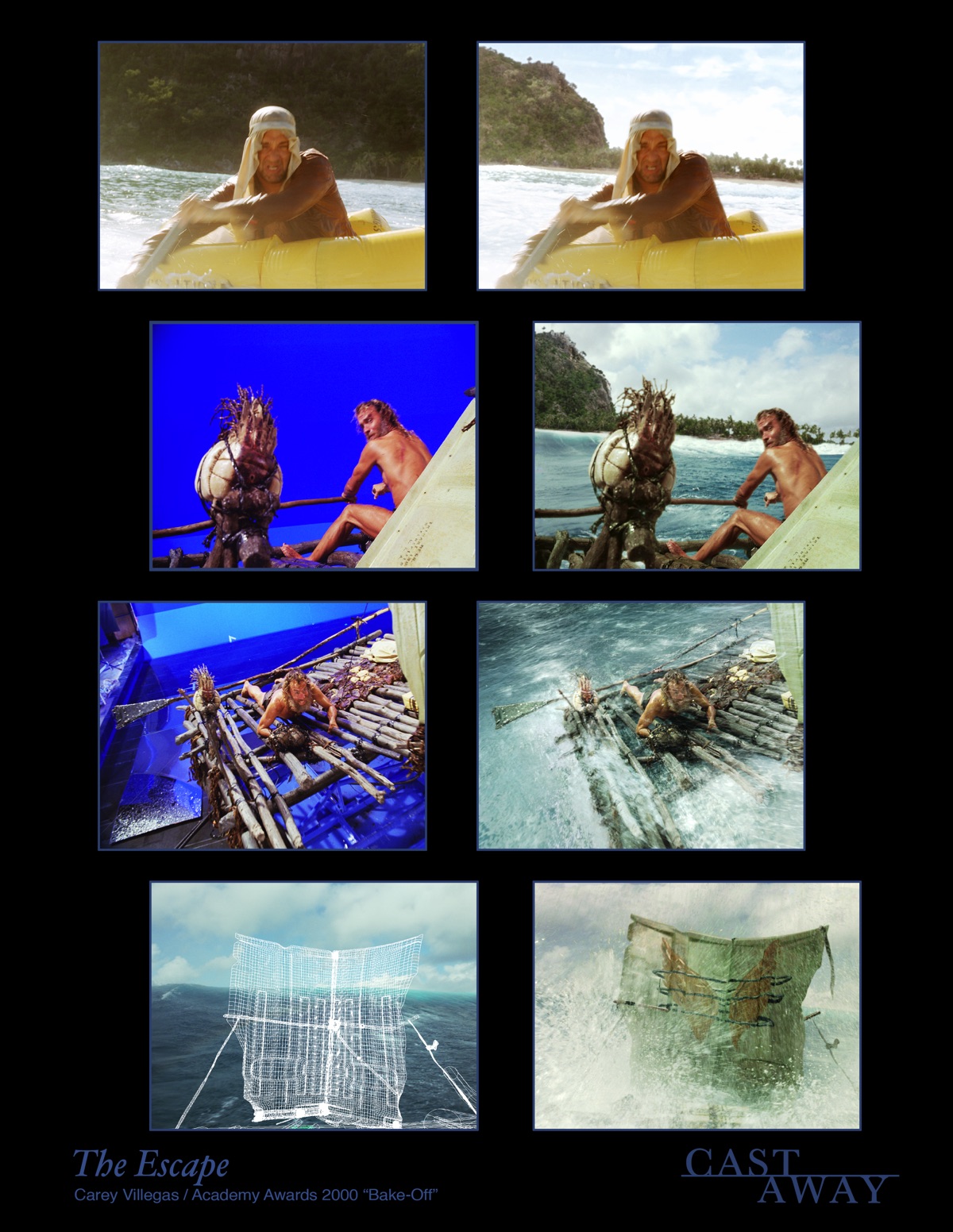

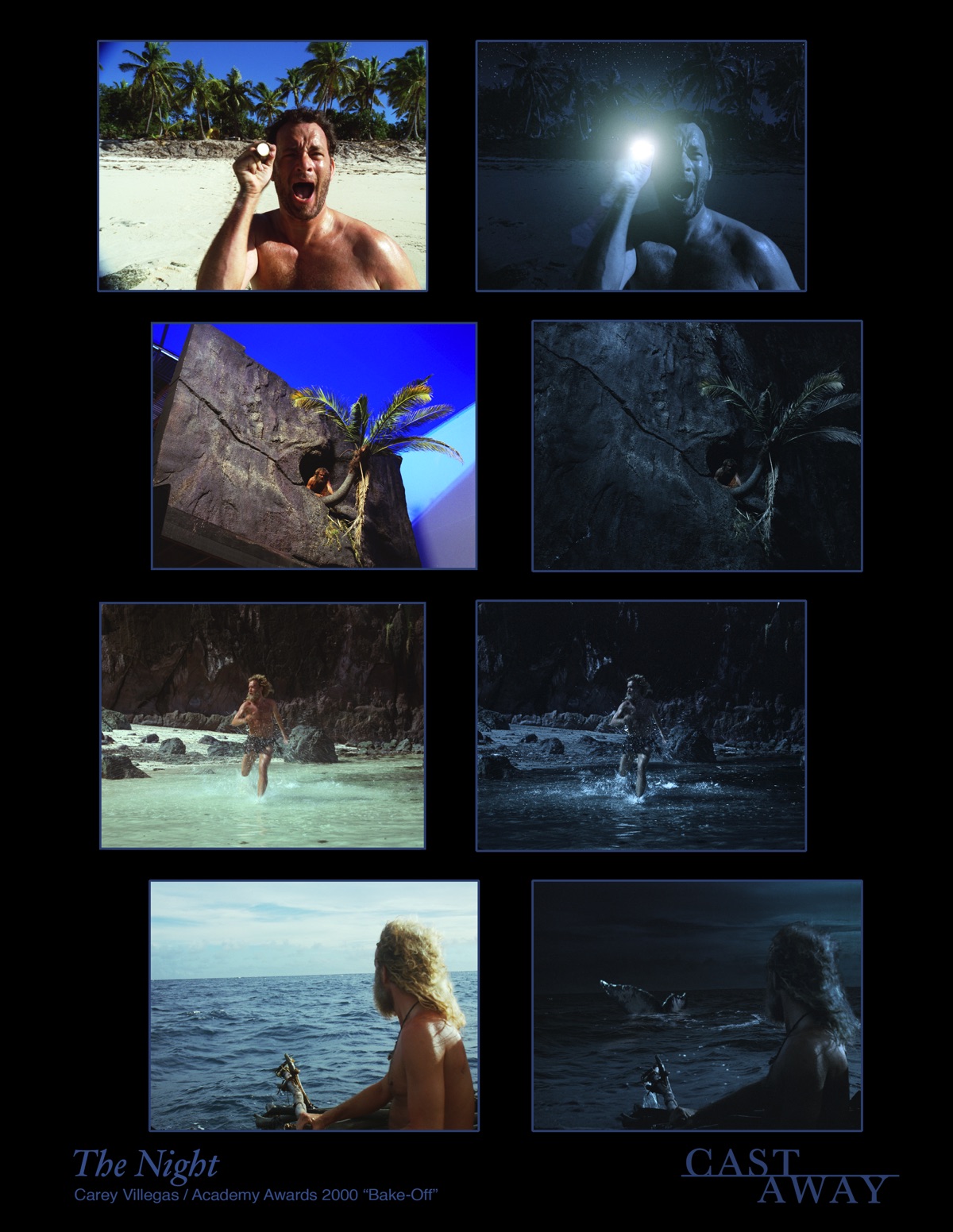

To find out more about this time in VFX compositing history, I asked Carey Villegas—who moved from Digital Domain to Imageworks to work on Cast Away and ultimately spearheaded the Bonsai project—to break down how the compositor came to be. Villegas was co-visual effects supervisor with Ken Ralston on Cast Away, and here he explains Bonsai’s history, the work of Suki Samra that kickstarted the compositor, how it was also part of What Lies Beneath, and what he says set Bonsai apart from other tools at the time.

Before Bonsai

Carey Villegas: After leaving Digital Domain in 1999 for Imageworks with Rob Legato, I put a team together to build the new compositor at Sony (Bonsai). While at DD just prior, I was working with Bill Spitzak and Jonathan Egstad on a major redo of Nuke–basically doing a ground-up rewrite of Nuke 2. We were putting the plan together for the architecture of what would be Nuke 3. On Titanic, I literally broke Nuke on ‘TD35’ (the king of the world shot), having to composite it with numerous scripts and band-aid patches. It was clear that Nuke was long-in-the-tooth and needed a major upgrade. Therefore when I arrived at Imageworks, I had already been thinking about the next steps for a ‘node-based’ compositor for many years and how I would want to improve it and add to its overall functionality.

In 1999, Shake’s toolset and especially its user-interface was still in its early infancy at Nothing Real, and had a long way to go to be a complete product. And expensive Flame and Inferno systems were only available in very small quantities. You’d be fortunate to have a few for a high-end project. Flames were also very limited for film-work with only 8-bits of color fidelity (until a 12-bit option later), they didn’t allow for batch processing, and overall weren’t very ‘pipeline’ friendly. Therefore, besides Cineon, most VFX facilities like Imageworks relied on Composer for feature film compositing.

And although Imageworks had invested hundreds of thousands of dollars in Composer licenses–and even more time and energy into internal plug-in and pipeline development, Composer just didn’t cut it. Besides being extremely slow and clunky, it had a very limited toolset and did now allow a digital compositor to truly finesse a shot. Unless they were also Flame or Quantel artists, most compositors of the time did not know what they were missing–as they had very few compositing systems against which to compare its performance. Flame was the only tool of its kind that existed (outside of Nuke at Digital Domain) with its capabilities. And although Nuke mirrored much of Flame’s toolset (since it was designed to supplement Flame)–it also lacked interactivity and speed.

Dual films: Cast Away and What Lies Beneath

The interesting part of Cast Away is the transition of Tom Hanks’ physical appearance. After being stranded on an island for four years, naturally his physique was that of a different person. Tom’s body had to dramatically change for the second part. To do that they asked, ‘How can we shoot the film and give Tom Hanks time to lose all this weight?’ So they thought, ‘What if we keep the film crew together and make another movie in between, and then we’ll transition right back onto Cast Away after Tom has slimmed down?’

So that was what happened: the production shifted from Cast Away to filming What Lies Beneath with Bob Zemeckis and the same crew, same DP, same everyone pretty much, while giving Tom the time to lose weight. What Lies Beneath went from pre-production through production and partial post during that time, then the production crew moved back onto Cast Away part two. The majority of Cast Away part one was already photographed with Ken Ralston prior to Rob Legato and I coming to Imageworks, but no VFX had begun yet, and then there was pre-production happening on What Lies Beneath [Rob Legato was VFX supervisor on the film, and Villegas was associate VFX supervisor]. Rob knew he had to concentrate on What Lies Beneath; he brought me along to help him, but then I could also focus on post production from part one of Cast Away. We also brought Richard Kidd in as a digital effects supervisor to help as well.

When we arrived at Imageworks, many of the tools we were used to at Digital Domain didn’t exist. Besides Nuke, Imageworks did not have a ‘pan-and-tile’ pipeline, which we used for pretty much everything at DD. The film color-timing pipeline was also very crude and needed a major upgrade–especially for a film like Cast Away where the tropical island weather varied from setup to setup–requiring detailed color corrections between every shot. There were also many sequences on ocean water with frenetic camera movements. The compositing systems back then didn’t have 3D camera tracking, and the 2D tracking tools outside of Flame were minimal. Practically no blue or green screen was used on Cast Away either, meaning everything had to be rotoscoped. Although Imageworks had a handful of Flames, Composer was the main package used for compositing. Considering the complexity of the live-action work that we were dealing with on Cast Away and What Lies Beneath, we really needed a more robust toolset that only existed in Flame, and to a lesser degree in Nuke at the time.

Coming to Imageworks: the beginnings of Bonsai

And so, upon arriving at Imageworks, I did not yet know how we would composite shows with the complexities and unique one-off challenges that Cast Away and What Lies Beneath presented. After speaking with people at Imageworks, I heard about a software designer named Suki Samra who was leaving Imageworks to start a new ‘venture’. While at SPI, he created the framework for a compositing system referred to as ‘Bonsai’–as in the tree! I immediately connected with Suki to find out more. After our first meeting, I realized that Bonsai was pretty much an incomplete attempt to create a tree or node-based compositor. Basically, he had developed a graphics processing engine with a limited interface and a few basic color correction and keying tools. I immediately realized its potential.

A bit more history here first: Imageworks had previously created their own compositing system prior to Bonsai. This command-line compositing system was called ‘ImgMake’ (Image Make). The team that created ImgMake left Imageworks in 1996 to start Nothing Real and in 1997 created the first command-line 1.0 version of Shake. ImgMake was used to process very basic graphics tasks like resizing to various image resolutions, simple color corrections, and sharpening for film-outs.

With Bonsai, Suki had pitched an idea to another division of Sony outside of Imageworks, and they thought that they could create software commercially that would rival Discreet Logic’s Flame. Suki was going to further develop his graphics engine into a commercial package (this product was later developed under the name ‘Socratto’). Sony formed a separate software company and Suki was no longer at Imageworks. And although at that point Bonsai’s development was incomplete, I realized its underlying graphics engine was a solid foundation in which we could build upon.

A quick look at Nuke

Now, what made Nuke so powerful besides floating-point calculations and being resolution independent, was its ability to do scan-line rendering–literally drawing one row of pixels at a time. Nuke also allowed a practically unlimited number of image viewers and had a completely open graphical interface–much like using shells in the Unix operating system. And although Nuke’s rendering was slow compared to Flame, what made it viable was that you could frame the viewer to only encompass the portion of the image you wanted to work on. So if you had, say, a close-up of someone’s head and face, you could resize your window to one of the person’s eyes and it would only render the 200 by 200 pixel resolution of that area one scan-line at a time.

This worked much in the same way as a traditional region of interest, which is a bounding box you can frame around a portion of the image–except this was the entire viewer. Once a few lines drew in and you could see what you had adjusted, you could continue making modifications until you were satisfied with the results. You could then incrementally resize your viewer to frame more of the image or the entire image, and it would continue to draw in what was missing line by line. So although Nuke was rendering somewhat slowly, because it was only drawing a number of scan-lines as opposed to the whole image, it allowed you to really finesse areas of the composite since you were only focusing on those pixels. And the entire time, you are working at a ‘one-to-one’ pixel resolution and not at some ‘proxy’ resolution. Therefore, you could really do detailed work on that one aspect of the image.

Whereas, other graphics packages buffer a whole frame at a time, like Flame or Composer. Essentially, if it was a 2K frame in terms of resolution, it would have to render the entire frame. Every time you would do a color correction or any operation, it would have to internally calculate what that operation would do across all of the pixels. And then, depending on the number of total graphics operations stacked on top of one another, after 30 seconds or more, the whole image would change in front of your eyes.

That’s what separated Nuke from the other systems–that you would have the ability to visualize various scan-lines at a time. Composer didn’t allow that; plus you could only work in either 8 bits or 16 bits and it was always one or the other. By comparison, Nuke was floating-point and didn’t really care about the bit resolution per image–as the majority of the internal operations were calculated at 32 bits. And then again, being able to work with abstract image resolutions, without having the entire system set to a fixed image format, also made Nuke special.

With Bonsai, we could do that and more, although it was implemented much differently. Bonsai would allow us to render tiles or blocks of pixels as opposed to single scan-lines. And we could choose to render the tiles either horizontally or vertically across the screen. So depending on the aspect ratio of the part of the image you were working on–you could optimize its rendering to better visualize changes and adjustments while compositing.

Conceptually, it’s similar to the block sizes used by compression algorithms for various codecs. You could choose to render with an 8 by 8 block of pixels and it would draw an 8 by 8 block, followed by another 8 by 8 block of pixels next to that. Or you could choose any size tiles for that matter, since the tile size was user-defined. Instead of a scan-line at a time or one pixel at a time, it would be blocks of tiles at a time. And we could also decide the orientation of the blocks relative to one another–either segmenting the image into horizontal or vertical ‘slices’ of blocks.

Making a compositor

It was only with the ‘pull’ of someone like Rob Legato that we were able to push Bonsai into further development. At the time, Tim Sarnoff was the vice president of Imageworks, and with his help Rob and I initiated a meeting with Ken Williams–who aside from being co-founder of Sony Imageworks, was also executive vice president of Sony Pictures Entertainment and president of Sony’s Digital Studios Division. We requested that Suki be allowed to work with us for a limited time in order to get other software engineers up to speed on some of the underlying code and other things that he had created.

My pitch was, if you’re truly trying to create a commercial software package, what better way to market that than to say, ‘It was actually used on two high-profile feature films for the first time.’ That was the thing that caught the lure of the executives at Sony and allowed us to borrow Suki’s time to help take my concepts and ideas and implement them into something viable.

We then brought in a team of software programmers. The software project leader was Manson Jones who was already at Imageworks, along with a number of other software engineers, Brian Hall, Bruce Navsky, Min-Zhi Shao, Rob Engle and Sam Richards, to name a handful. This was all happening at pretty much the same time–we were starting post on Cast Away part one and in pre-production on What Lies Beneath, while simultaneously developing this compositing package!

Bonsai guinea pigs

Once we had some fundamental tools in place, we had to start to composite actual shots. I had a few guinea pig compositors, people who had to work through the growing pains of trying to composite a shot with software that was full of quirks and very much still in development. I mean, literally you’re trying to create a software package and do work at the same time. I brought in compositor Ethan Orsmby, who I had previously worked with at Digital Domain to start things off, followed by Layne Friedman, Colin Drobnis, Bonjin Byun, Clint Colver, and Bob Peitzman, among others.

It was very difficult, and we got to the point where Imageworks wanted to pull the plug on the whole thing. I had to convince many executives to continue the development and promise that we were going to get the work done. It was a juggling act of the usual politics and the technical challenges typical for any software development process.

Eventually, I had plenty of die-hard Composer users on Cast Away, and of course they couldn’t believe how fast the package was compared to what they were used to. But then, at the same time, they wanted it to function like what they were used to. So you would have all these compositors complaining about how, ‘It’s not layer-based.’ They certainly liked the speed, but ultimately what they wanted was a ‘faster’ version of Composer. Node-based compositing is a different conceptual way of working. After a while, though, everyone got used to it. Especially once they realized just how powerful it was.

The VFX of Cast Away, and what lay ahead for Bonsai

As with most Robert Zemeckis film’s, Bob likes to incorporate many long, continuous and elaborate shots. The average VFX shot length for Cast Away was about 300 frames. And we had more than a dozen shots that were 2000-plus frames long. In total, there were around 350 shots in the film, which by today’s standards is not a lot. But the visual effects add up to an hours’ worth of the film’s 144 minute run-time. In addition to Bonsai, we also used Flame for the compositing.

After some time a few people wondered, ‘Why does Carey get to decide for the whole company how this package works?’ So after a few years of solely driving Bonsai’s creative development, Imageworks formed a series of committees or ‘town councils’ which were put together to make group decisions on the future of the company’s many proprietary tools and software. Eventually, Bonsai was ported from Unix to Linux and continued as the company’s primary compositing system for all its feature films. But in all actuality, Bonsai’s continued development pretty much stalled after that and it was primarily just ‘maintained’ from show-to-show.

As an aside, around 2004, a development team at Imageworks started to design the successor to SPI’s previous lighting and look development software, ‘Birps’. This new package was called ‘Katana’. By 2009 or so, most of the individual tools created for Bonsai were moved into Katana. Therefore, Katana eventually incorporated identical tools for compositing that Bonsai had, except that Katana was a system designed from the ground up for ‘color and lighting’.

Up until Alice in Wonderland—for which we started preproduction in mid-2008—Bonsai was the primary compositor for the company. It was with Alice that I decided to bring Nuke into Imageworks. Although it still hadn’t been widely adopted outside of Digital Domain, Nuke was once again the strongest node-based compositing system in the industry. Rob Bredow, Imageworks’ former CTO, brokered the deal that secured for our production team the Nuke licenses we needed and Katana eventually became a Foundry product. So for Alice in Wonderland, the most difficult live-action compositing tasks were accomplished in Nuke and Flame, and the rest of the compositing was done using Katana–along with all of the CG look development and color and lighting.

At the end of the day, Bonsai completely changed everything at Imageworks. Throughout its nine or so year run, Bonsai was used to composite more than 50 feature films. For those first few years, because the shows I supervised were more or less funding Bonsai’s development, it was all under my direction in terms of how things would be approached, which gave it a very specific focus.

What a nice trip to memory lane already in the first couple of sentences. 🙂

Wonderful article, great to read on things like this. It makes you understand how easy we have it these days 😉

Thanks for reading, Rob!

Great article Ian. It was the heyday of brute force compositing and practical VFX before everything went totally CGI. Thanks

Ian, this is a wonderful telling of that period of VFX development before century’s end. It’s a great article, and it’s so wonderful to have Carey’s voice driving the narrative. Carey was one of the hardest working people at DD during the Cameron era, and before moving with Rob over to SPI. During Titanic he was legendary for working insane shifts, sleeping under his desk, pulling stretches of 36 hours straight. As a proud member of the DD alumni association…thank you!

Thanks for posting, Bob!

“After some time a few people wondered, ‘Why does Carey get to decide for the whole company how this package works?… ”

“But in all actuality, Bonsai’s continued development pretty much stalled ”

How typical. Stand by the side and watch / wish someone fails or try and bring them down by your own mediocrity. Then things stagnate and die . Quelle surprise !

I like this Carey guy.

Super article and story Ian. you’re a machine.

cheers,

b