Bashing the Bash — Replacing Shell Scripts with Python

Can we talk about that old hoodie you’re wearing? Yes, it’s got an obscure logo with a complex, personally significant story. Yes, it keeps the sun off your head. Holding the pockets together with duct tape doesn’t really seem to be optimal though. And there’s that hole in the elbow. And what’s that stain. If you were to wash it, would anything be left…

Let’s admit it, an application that looks like a free hoodie from a defunct web site doesn’t create a lot of confidence. How the application looks is important, just like with clothes. So lots of software offers a glitzy, fancy experience but we all know there’s more to software than what meets the eye. Under that exterior there are layers of processing the users don’t see. There are all manner of buttons, zippers, belts, and suspenders making sure things are holding together.

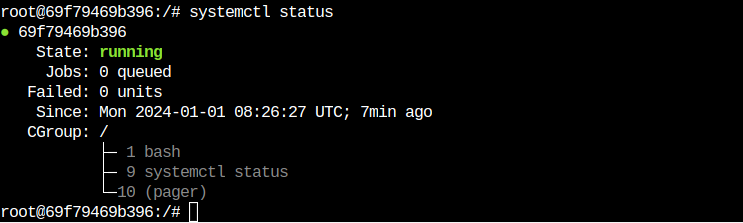

For instance, the technical operations folk (TechOps) have to start the server, confirm that it’s running, stop the server, maybe backup the data, maybe export or import data. In addition to operations, the development (DevOps) folks have a suite of tools for integration and deployment. This DevOps tooling is often sewn together with shell scripts to make sure that a merged branch in GitHub is turned into a deployable artifact.

That nice-looking outfit sure is held together from many separate parts.

Now, your hoodie may be the universal cover-up — you can wear it everywhere — in the same way that the shell is what holds a lot of software tools together. While it’s universal, it’s also one of the recurring DevOps/TechOps problems. It’s a problem because we often abuse the shell and treat it like a programming language.

Here are some concerns:

- The syntax can be obscure. We all get used to it, but that doesn’t make it good.

- It’s slow. While the speed of a shell script rarely matters, trying to use the shell like a programming language will waste system resources.

- We can often omit crucial features of a script. Checking the status of programs using $? can be accidentally left off, leading to inconsistent behavior.

- The shell language’s only data structure is the string. There are ways of decomposing strings into words. The expr program can convert strings to numbers to do arithmetic. The date program allows some date manipulations, but the rules can be obscure.

- Unit testing isn’t easy. There are some packages like Bats that can help with unit testing. A language like Python has a much more robust unit testing capability.

It’s not that the shell is broken. The shell isn’t a complete programming language. It doesn’t do everything we need.

Which Brings Us to Bash-Bashing

The point of bash-bashing is to reduce use of the shell. Without much real work, it’s easy to replace shell scripts with Python code. The revised code is easier to read and maintain, runs a little faster, and can have a proper unit test suite.

Because shell code is so common, I’ll provide some detailed examples of how to translate legacy shell scripts into Python. I’ll assume a little familiarity with Python. The examples will be in Python 3.6 and include features like pathlib and f-strings. If you want to follow along, consider creating a virtual environment or using conda.

The shell script examples are pure bash, and will run anywhere that bash runs. The design patterns apply to Windows; of course the syntax will be dramatically different.

It’s A Big World

Before I dive into code, I want to put some boundaries on the example. It’s tempting to provide examples of Git hooks, or Jenkins jobs, or UrbanCode Deploy.

I think these kinds of examples are little too specific; I want to look a little more broadly at shell scripting in general. I’d like to make the distinction between generic sportswear and the specific gear we might wear to go rock climbing. My code example is not tailored for a particular DevOps tool chain.

The shell can be used for a wide variety of things. I’m going to use an example that has a few common kinds of OS-level resource manipulations in it:

- Reading configuration files

- Killing (and creating) processes

- Doing date arithmetic

- Creating (and deleting) directories

- Running applications

- Managing files

There are also some higher-level considerations than managing OS resources. These considerations include conditional processing and iterating over objects. I’ll show an example of iterating over files, but scripts also iterate over processes or even lines in a file.

Shell scripts often manage network resources. This means accessing remote resources via curl and wget. Using these programs makes managing network resources look a lot like running applications and managing local files. Because of this, I won’t treat network resources as a distinct class of objects.

A Representative Script

I have a little shell script with some examples of different resource operations. This script manipulates some OS resources, and runs an external application. (Yes, the application is secretly in Python.)

This script seems to have four important steps:

- It kills a process. This involves reading a file to get a process id, then executing the ps and kill commands. The example.pid file was created by some other process and read by this script.If the file doesn’t have any content, there’s a logic path to handle that. What if the file doesn’t even exist?

- It creates a directory with a name based on the current date. This directory is used in subsequent steps.If the directory already exists, there’s an annoying message. Is this masking a problem?

- It runs an analytic app. There’s a confusing for loop that processes only one file from a sorted list of file names. I’ll dig into the nuances of that construct below. I’ve included it as representative of algorithms that can be simplified when using Python.

- Finally, it copies the output file to a second location, named current.txt. The copy operation is qualified by an if statement which checks to see if one file is newer before replacing another file.

I emphasize the phrase “seems to have” because shell scripts can have hard-to-discern side-effects. This specific example is explicit about the files and directories it creates and the process it kills. Generally, the original idea behind the shell is to make resource management abundantly clear. However, this ideal isn’t always met in practice.

You may like your hoodie. But it has duct tape holding it together.

An example of a shell obscurity is the way the current working directory is set. The cd command is clear enough, but in the presence of sub-shells using (), can make it difficult to discern a stack of nested shell invocations and how the working directory changes when the sub-shells exit.

Let’s rewrite this example into something that can be unit tested. I’ll start from the top and work my way down into the details.

Start at the Top

By “top” I mean the high-level summary of what the script does. This script appears to have four steps. Here’s some Python code that reflects the overall synopsis:

I’ve idealized the four steps as separate functions. This leaves a space to implement each function. I’ve tried to avoid making too many assumptions. As a practical matter, we often have a clearer picture of what a shell script does; this allows us to define the functions in a way that better fits the script’s intent.

From Configuration File to Process Management

One of the shell’s ickier features is that variables tend to be global. There are some exceptions and caveats, however, that lead to shell scripts that are broken or behave inconsistently. This means that environment variables like PID_FILE and PID are potential outputs from a step and potential inputs to a later step. It’s rarely clear.

Part of a rewrite means identifying the global variables which are used in other parts of a script. This can be difficult in complex scripts. In this example, it’s easy to check the code to be sure that these two variables are effectively local.

Another part of a rewrite means identifying things which are more like configuration parameters than simple variables. Things like literal directory and file names are obvious candidates for being treated as configuration parameters. There are other configurable items like search strings and wild-card patterns which may also need to be treated as part of an external configuration. I suggest taking it slow with the parameterization pass.

My advice is to get things to work first. Generalize them later.

Here’s an implementation of the kill_process() function:

I’ve replaced the shell’s idiom of read PID <$PID_FILE with code based on the Pythonic idiom of pid = pidfile_path.read_text(). The original shell script doesn’t have any documentation for the format of the file; we haven’t added documentation to the Python. A summary of the file content is an important addition.

Here’s another change between the shell and Python: the shell works with strings, but the Python psutil library uses integers for the process ID’s. This leads to converting the string to an integer using the int() function. This also means the script needs to do something useful when the file doesn’t contain a valid number for a PID.

The original shell script will silently do obscure and difficult-to-predict things if the file has an invalid PID. Try running ps what or ps ick to see some of this less-than-obvious behavior yourself.

Similarly, the kill command will write a message if a process can’t be found. When the process can’t be found, the script does not stop; it quietly ignores the problem. Maybe this situation is not really a problem. It’s hard to tell what the original intent was. Was this an omission? Or was it intentional?

Both the ps and kill programs set a status code in $? to a value of one to indicate that the program failed. Omitting a check of the value of $? means that the success or failure of these programs was being ignored.

Unlike the shell, Python consistently raises an exception when programs don’t complete with a status code of zero. Our Python script must handle this exception to make it explicit that the various kinds of failures doesn’t matter in general. In example, the Python code writes a warning message and continues processing. The pass statement can be used instead of a print functions to silence the exceptions.

Note, the psutil package is not part of the standard library. You’ll need to install this separately.

Creating Directories

Here’s the implementation of the make_output() function to create the output directory:

The shell’s date program does a lot of things with relatively cryptic syntax. I’ve written a simple function that emulates one feature of the date program. It’s easy to expand this to include the date ± offset feature, also.

I’ve included a type hint on the make_output_dir() definition. This summarizes the return value produced by this function. If you haven’t seen Python 3 type hints before, I need to emphasize the “hint” aspect. They don’t change the run-time behavior at all. There’s a separate quality check via the mypy program (http://mypy-lang.org/) to confirm that the type hints are all used consistently.

This function leverages the Python 3 pathlib library. The output_dir variable is called a “pure” path — a potential path that could exist. It’s built from a Path object and a string. The / operator builds complex paths from a base Path object.

After the pure path is built, the .mkdir() method creates an actual path from the pure path. Using the exist_ok option silences the FileExistsError exception raised if the directory already exists.

In the case where the directory already existed, the legacy shell script wrote an error message. In contrast to this, the Python code is silent. The Python code can be modified to emulate the error message behavior, if it’s important. Omit the exist_ok=True and handle the FileExistsError exception by printing a f”{output_dir}: File exists” message

A search of the source shows that the value of the output_dir variable is used elsewhere in the script. Because of this, I’ve returned the value as an explicit result of this function.

If you’re new to Python, it’s easy for a function to return multiple values. In the rare case that a step of a shell script sets multiple variables that need to be returned, just list all of them on the return statement.

Propagating the Change

When I first sketched the script, the signature of the make_output_dir() had no arguments or return values. As I dug into the details, I found that the output directory was used by run_analytics() and copy_to_current().The discovery process for return values and parameters can be challenging. In many cases, it involves unit tests failing because a variable is missing.

Changing the make_output_dir() function means I need to also change the overall script. Here’s a version that reflects all the variables that are needed by each step:

The contrived example doesn’t involve many environment variables being used to pass values around. Don’t be surprised by lots of shared variables in real-world scripts. In some cases, copy-and-paste programming may mean that a variable name is reused in separate steps.

If you look back at the copy_to_current() block of code, it has two global variables: output_dir and name. However, these are used to build one result path: “${output_dir}/summary_${name}.txt”. Rather than provide two variables, I’ve made a tiny redesign to return the entire path from the run_analytics() function.

Sorting and Searching

The next big step, the run_analytics() function executes an external program for a particular input file. Here’s the overall code. We’ll look at sorting filenames first, then we’ll look at the check_call() function to run a program.

The glob() method of a Path object enumerates all of the files in that directory. This is like using * outside of any quotes in the shell. Except that it’s explicit.

I’ve preserved the original shell design in this code. There’s a loop that includes a break. It’s not clear why this loop exists in the first place. But, I’m following my strategy to get things to work first and generalize them later. Once we have unit tests in place, we can rewrite this to optimize it.

This code contains an example of some processing that is inefficient in the shell.

The syntax is terse, but it involves a sub-shell with a pipeline. The pipeline involves running two separate programs and piping the output of one to the input of the other. While Linux does this quickly, it involves overheads that are avoidable.

In Python, we can use the following:

While the Python code is more efficient, it has another gigantic advantage. In Python, it’s very simple to handle sophisticated sort keys.

In the shell, we’ll often have contrived sort pipelines like this:

The first sed step extracts some numeric value from the filename so that it can be used for sorting. The last sed step removes the decoration after the sorting is complete. Sometimes this is done with awk. Either way, it’s clutter.

In Python, we can more simply provide a key function to the sorted() function.

For example, we might have files with names like prefix_yyyymmdd.ext and we want to sort by the date after the _, not the prefix. We can write a sort key function that looks like this:

This function will extract the stem of the file’s name, partition it, and return just the yyyymmdd portion of the name. This will be the sort key. We can, of course, do any kind of string or numeric calculation here in the unlikely case we have very complex sorting rules.

We can use this key function as follows:

This applies the object-to-key transformation function to create sort keys from each object to be sorted.

The optimization? We can replace the for/break construct with this:

This will take the one item from the sorted sequence of file names.

Here’s an example of how this kind of thing looks, using some fake-ish data. I’ll use strings instead of fabricating Path objects.

The suffix strings were stripped off the file names; these strings are used for key comparison when sorting. Here’s how it might look to take the next step.

These are sorted into ascending order, including reversed=True will switch the sense of the key comparisons.

Running A Program

One gigantic difference between Python and the shell is the way the shell runs programs implicitly. If the first word on the line is not a built-in feature of the shell, the name is found on the OS $PATH and executed as a program. In Python, the subprocess module is used to run programs. The check_call() function is what I used here. There are some other choices, depending on the precise behavior required.

The transformation from the original shell command to Python involves replacing three shell parsing features:

- A line of shell code is parsed as words based on whitespace and quotes. Quoted strings are treated as a single word. The shell provides two kinds of quotes to provide some control over the process. For Python, I’ve parsed the command into a list of words at design time. We don’t have to use complex quoting rules. Instead we provide the list of words. There’s a static list in the APP_NAME variable. A filename is appended to this list of words.

- Each line of shell code is processed to “glob” filenames. Any * is used as a wild-card file specification. To defeat this feature, we must provide * in quotes. In Python, I’ve replaced the shell’s implied globbing with an explicit call to the glob() method of a Path object. https://docs.python.org/3.6/library/pathlib.html#pathlib.Path.glob

- In the shell, the > and < redirection operators are used to connect open files to the program to be executed. In Python, I opened the files explicitly. I can then pass the open file connections to the check_call() function to be mapped to the child process stdout.

This transformation turns the terse shell command of $APP $filename >”${output_dir}/summary_${name}.txt” into something like this in Python:

First, I’ve build the target filename using a Path and the / operator. Then, I’ve build the command as a list words from APP_NAME and the path to be processed. After opening the target file, I’ve run the resulting command with the standard output directed to the open file.

“Hey, wait a minute,” you might say. “You’re just running a Python program from another Python script.”

Good point. You’ve identified the next level of script rewriting. We can probably merge these steps into a composite application that imports the original module_design_analytics application. It may also be sensible to use the runpy module to run the application from inside this script. These are important potential optimizations.

Checking Modification Times and Copying Files

The final step will copy a file if it’s newer than some target file.

The shell uses the [ file -nt file ] to compare modification times of two files. I’ve replaced this with a very explicit operation to get the os.stat() structure for each file. Then I compared the modification time, called st_mtime within each OS status structure.

The file copy is done with the shutil module. This has a copy2() function to copy the data as well as the metadata. There are alternatives to this in the shutil module that provide more control over how the metadata is handled.

The Imports

Here’s the list of the modules imported by this script:

In some cases (i.e., datetime, shutil), I’ve used the entire module. In other cases (i.e., pathlib, subprocess), I’ve imported only the specific class, function, or exception that was necessary. I’ve done this to show both styles. When a function like check_call() is imported, then the name doesn’t require any qualification. When a module like shutil is imported then the function requires the module as an explicit namespace qualification: shutil.copy2(). There are good reasons for each style.

psutil is not part of the standard library. It will have to be installed separately.

Testing

Once there’s some — allegedly — working code, the next step is to create unit tests. It’s a good practice to have testing drive development. Ideally, we’d write unit tests for the four high-level function steps in the shell script. That ideal is difficult to achieve when we’re not sure we know everything a given step does that’s relevant for subsequent steps. There’s a lot of global, shared state that needs to be understood.

If we’re using Python instead of the shell, then we have the advantage of starting from scratch. We can use test-first development techniques.

In either case, it’s essential to mock the OS-level resources that our Python applications will be touching. For this, I used the unittest.mock library. To build proper mock objects, it helps to look at the code. Some testing experts call this “white box” testing because I’m tailoring the mock objects to the software under test.

Here’s a representative test for one of our script’s functions. This will test the copy_to_current() function for one relationship of modification times:

The test imports the script to be tested; it has the unimaginative name of script.py. I’ve applied two patches to the namespace in which the script executes.

- The import of shutil is replaced by a Mock object.

- The import of pathlib.Path is replaced by a Mock object, also.

Both of these mock objects become arguments to the test function so I can use them. When there are more than one @patch decorators, note the inner-to-outer ordering of the parameter names. This is a consequence of Python’s rules for applying decorators.

I’ve also created a mock for the result_path argument, and assigned it to the variable mock_result_path. In this case, I’m not patching an imported name, I’m providing an argument to a function.

The function under test uses code like this

To be sure this will work, I created a stat attribute. It’s a Mock with a return_value. The returned value is a Mock with a st_mtime attribute. I’ve mocked the behavior that is used by the code under test.

The mock_path argument is a stand-in for the Path class definition. The function under test will use code like this:

We expect that the mock Path will be evaluated once to create a Path object. The default behavior of a function call is to create a Mock object. A test can reference that created mock with mock_path.return_value. Based on the code under test, the returned Mock object must have a stat() function, and the result of that function must have a st_mtime attribute.

When evaluating script.copy_to_current() in this test context, I have a bunch of expectations. The most important one is that shutil.copy2 will be called. I formalized this expectation as follows:

I’m also be interested in confirming the stat() method was called for each argument. I can add this as an assertion.

The whole point here is to add tests with different time stamp relationships to test other behaviors. The py.test package can find and run these tests because they have a consistent name pattern.

Next Steps

We’re not done, of course. I haven’t really addressed logging or configuration. Does this script use external environment variables? A configuration file? Command-line arguments?

Some shell scripts use the getopt command to parse options. Other shell scripts use $0, $1, etc., to gather positional arguments. A lot of these features can be buried inside a script, making it difficult to discover what the script really does and how it’s expected to be used.

Further, there’s another round of optimization possible. As noted above, this script runs a Python program. It may be appropriate to combine the application and the script into a composite application.

Conclusion

The shell is a primitive programming environment. It works well, but it’s not a very sophisticated language. I think of it as a 1970’s leisure suit, maybe made of double-knit polyester, with contrasting top-stitching. You know — dated. And definitely inappropriate for rock climbing or a day at the beach.

The two biggest problems are the paucity of useful data structures and the difficulty of unit testing.

We don’t need to eliminate all use of the shell. But we can benefit from reducing the use of the shell to the few things that it’s particularly good at. I’m a fan of relegating shell scripts to a few lines of code that set environment variables, establish the current working directory, and run a target program.

What we want to do is replace complex shell scripts with code written in a programming language that offers us a family of rich data structures. In many cases, we can find big blocks of shell code that are doing computations that are a simple, built-in feature of a programming language like Python.

Moving to Python means we can leverage smarter data structures. We can work with numbers and dates in a way that’s free from string-handling cruft. The best part? We can write unit tests. A small change to a shell script doesn’t break things. Instead, it gets tested like the rest of the application. We can put it into production with a lot of confidence that it will really work.

STATEMENT: These opinions are those of the author. Unless noted otherwise in this post, Capital One is not affiliated with, nor is it endorsed by, any of the companies mentioned. All trademarks and other intellectual property used or displayed are the ownership of their respective owners. This article is © 2017 Capital One.