AI 1.03 — Dartmouth Conference And The First Wave Of AI (1956–1973):

Coining of the term and Surge in funds

Father of AI and his LISP Machine:

The dawn of AI. Dartmouth Workshop is often cited as the Birthplace of Artificial Intelligence and rightly so. It was in the summer of 1956 that a virtuoso mathematician named John McCarthy, then only an assistant professor at Dartmouth college decided to call up on a group of mathematicians to discuss thinking machines. With the help of the Rockefeller foundation, 10 other participants attended the workshop in Dartmouth college with formal proposals from Dr. McCarthy including three others, Marvin Minsky, Nathaniel Rochester and Claude Shannon as to introducing the term ‘Artificial Intelligence’.

Dr. McCarthy who would later be called the father of AI, coined the term Artificial Intelligence and its relevance today is due to his meticulously planned choice for the word, neutrality being key, avoiding being very specific like automated machines or cybernetics and hence holding its congruence even today.

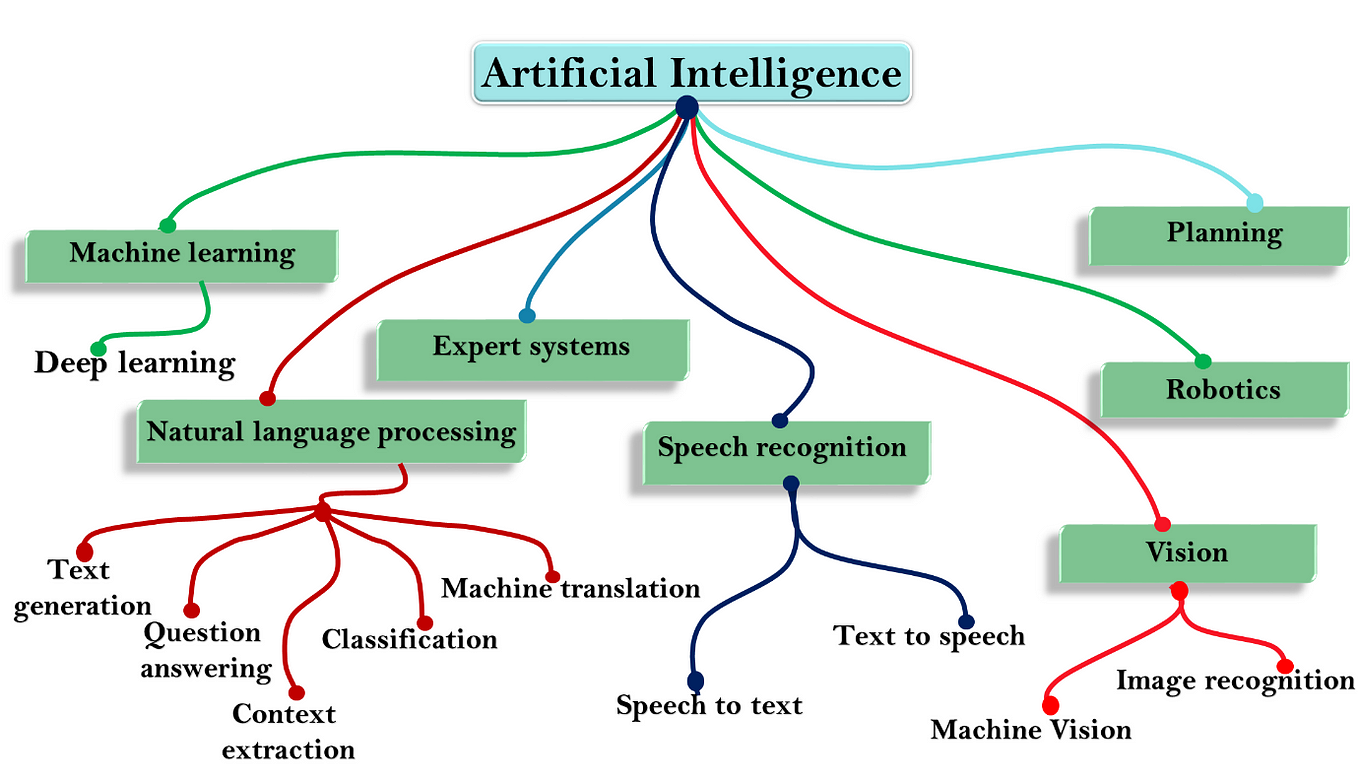

Expert Systems: Created with an idea to rival connoisseurs from different fields, an Expert system simply put, is a computer technology which emulates the decision making of a human expert. Early expert systems in the ’50s were limited by the use of techniques like flow charts or pattern matching.

The LISP Machine was one of such early expert systems. They were the first commercially sold single-user workstation machines. Dr. McCarthy’s Lisp programming language, only the second high-level programming language after Fortran, was the standard go to language for Artificial Intelligence in the 60s and was also the programming language used by the LISP Machine.

These LISP Machines grew in complexity over the years adding functionality relevant even today like Laser Printing, Garbage Collection and Computer graphics rendering. They overall provided only single user support and resemble today’s personal computer setup with a bitmap display, mouse and keyboard and memory just above 1 MB (RAM part not so much).

The complex LISP machines would resemble the later advanced Expert systems which were used in the 60s and 70s which were rule based meaning they learned from previously acquired knowledge. They essentially have two parts:

i) The Knowledge base which has rules and facts.

ii) And the inference engine which applies rules to the already known facts from the knowledge base to infer new facts.

Reward Based System and Chinese Checkers: In 1959, another computer scientist named Arthur Samuel used what we now called Reinforcement learning, a conventional reward-based system, to implement the two player Chinese Checkers game. He also popularised the term ‘Machine Learning’ in a conference the very same year.

He invented an early-stage version of Alpha-Beta pruning. A technique to cut down unnecessary nodes to increase depth in a search tree. This is a technique in use even today and is used on Min-Max Algorithm for two player games like Tic-Tac-Toe, Go and Chess.

Despite casually being cited alongside Dr. John McCarthy, the three names mentioned (Marvin Minsky, Nathaniel Rochester and Claude Shannon) are huge contributors to their respective fields.

Marvin Minsky Vs Frank Rosenblatt and the Battle for Neural Networks:

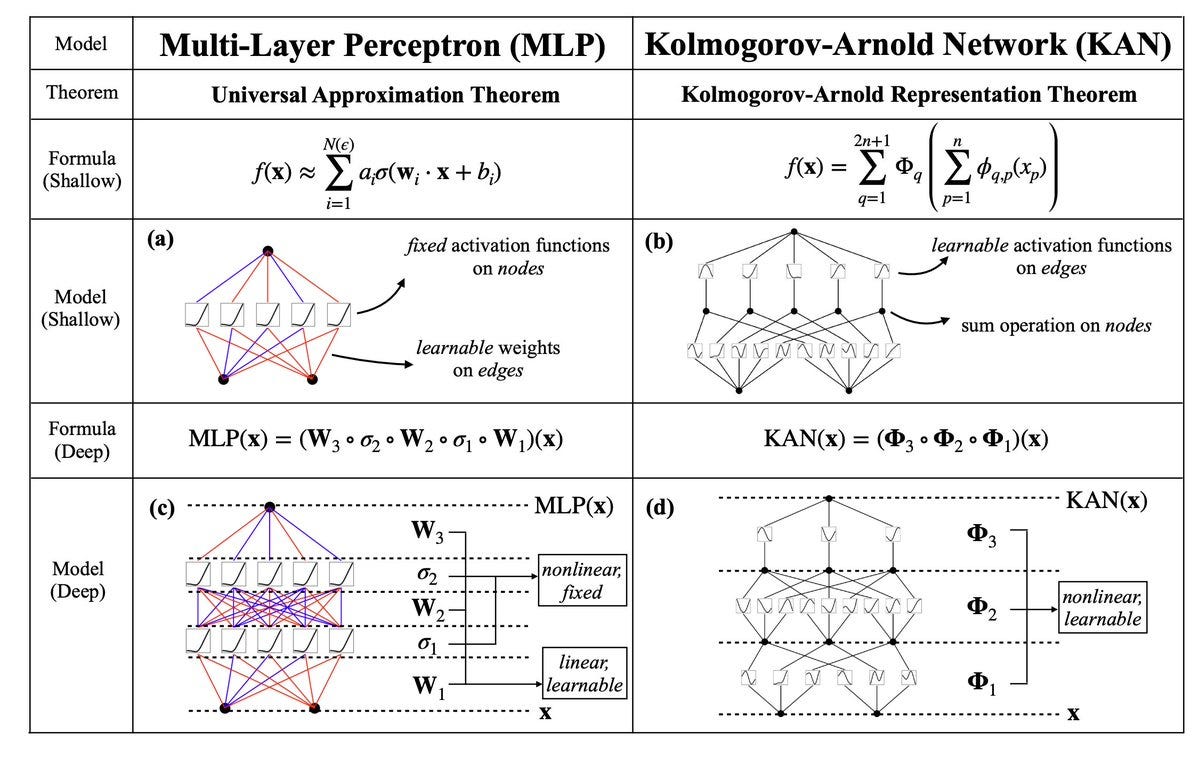

Frank Rosenblatt, a psychologist from Cornell University, in 1960 had written the first modern perceptron model which would now be an integral part of artificial neural networks (ANNs). The model he created, the Mark I Perceptron, was the first computer ever created to use the trial-and-error method to learn new skills and to apply it for prediction. This was achieved with the help of the neural network model that he created.

He updated the modern neural network from the version suggested in 1940’s (the cybernetics era, where attempts were made to combine many concepts from biology, psychology, engineering, and mathematics) which was simpler. To create this, he used a two-layer feed-forward perceptrons i.e., with one hidden layer and today we have much complex models as well. This mechanism which was built into the computer was able to classify images of different shapes or letters for instance classify an image fed in as man or woman. It was a more physical machine which would need physical photos to train on. It was able to predict for unseen images as well.

Enter: Marvin Minsky, prodigal mathematician, friendly rival of Frank Rosenblatt and one of the important attendees of Dartmouth Workshop. After the well-received and very popular perceptron model by Frank Rosenblatt, it took another 9 years until Dr. Marvin Minsky and Dr. Seymour Papert came up with a book on Perceptron which directly took on Rosenblatt’s version of Perceptron.

Minsky’s Book ‘Perceptron’ which he published along with Seymour Papert gave a mathematical proof on the limitations present in the two-layer feed forward Perceptrons and said that it sometimes even failed on simple XOR problems. The book explained the difficulty that was present in the training process of multilayer perceptrons.

But here’s the twist. Minsky’s claim on neural networks was verified incorrect as it was released, but was realized seventeen years later. Backward Propagation or Backpropagation was thought to be invented seventeen years after Minsky’s book but this was developed even before the book released (Backpropagation is a method to adjust weights to compensate for error found during the process of learning thus making it easier to process Multilayer perceptrons nullifying Minsky’s claim on Rosenblatt’s model).

ELIZA, The first AI chatbot (1964–1966):

ELIZA by Dr. Weizenbaum in MITs AI lab was the first recorded attempt of an AI Chatbot. It certainly is astounding to hear that AI chatbots existed in the ’60s almost four decades before the internet era. It used simple pattern matching and substitution techniques to answer queries. And of course, the main goal of ELIZA and other chatbots which came along the way, including Parry (1972) and Jabberwacky (1988), was to pass the Turing test.

ELIZA in particular attempted at being people-centred psychotherapy. I say attempted because ELIZA was certainly hit or miss with regards to answering questions. But we must remember that even Siri was, just a few years ago, only now has it improved quite substantially. Siri and Google Now come under what are called Query based AI, they just answer our questions, not entirely conversational.

Google’s AI Chatbot Meena, a conversational AI, is said to be 79% accurate with regards to conversational strength and accuracy against 86% accuracy posed by Human beings.

The AI Winter:

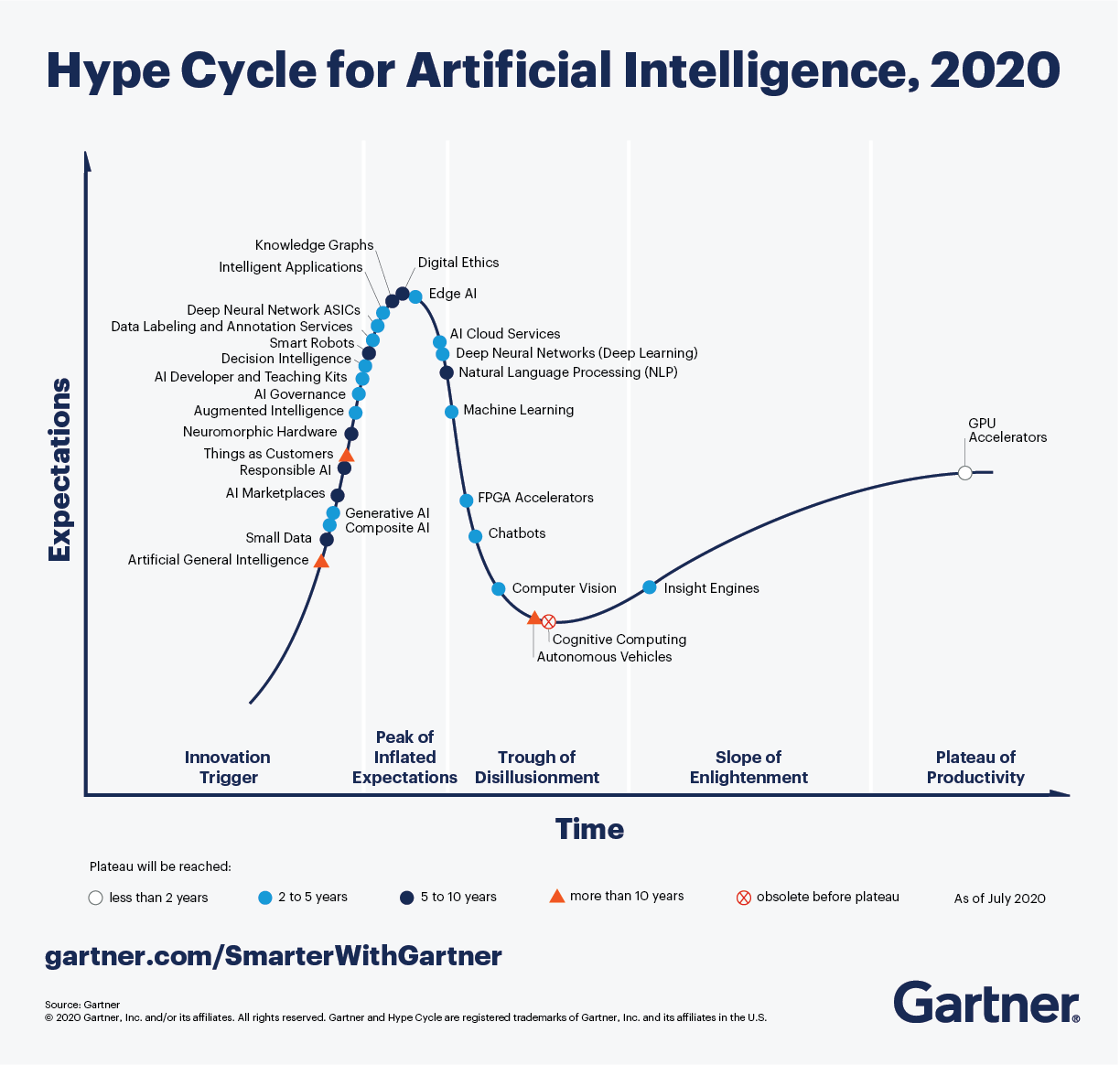

The first wave of AI is called the first wave of AI because this was the time from the Dartmouth Conference until the year 1973 where there was a huge interest (for the first time) in the field of AI and funds flowed in from many organisations and most importantly from DARPA (The Defense Advanced Research Projects Agency).

But after realization that they were not equipped with the technology needed for AI and prolonged duration of time (in years) of not getting much progress, there was a natural drop in the interest shown in the field. The natural drop in interest resulted in plummeting of funds that flowed into AI research labs.

Again, this drop in funds was mainly by DARPA. No support from such a huge organisation meant the AI community was void of any support from the others as well. Despite research going on, the number of people working were low and the rate of input was drastically down and this time between 1974 and 1980 when the funds were frozen was called the AI Winter. This period was very short lived as new breakthroughs paved way for the Second Wave of AI.