Abstract

A new approach for enhancing the reliability and practicality of online tool condition monitoring (TCM) is introduced in the current research. This new method is based on analyzing raw force signals and processing cutting tool images. A new vision system based on a CCD camera is used to monitor the tool condition by collecting images of the rotating cutting tool during the milling operation, making it a convenient and feasible process. Firstly, image processing wear extraction based on the projection of the rotating tool is investigated in this study. This method demonstrated a high correlation with the experimental flank wear, reaching 99.37%. Then, the tool wear prediction method was developed by combining a hybrid deep learning algorithm with a raw signal multiresolution analysis method based on the wavelet transform to improve the accuracy of identifying tool wear. This method involves combining a hybrid deep learning algorithm that consists of a Convolutional Neural Network (CNN) and Bidirectional Long-Short Term Memory (BiLSTM) with Maximal Overlap Discrete Wavelet Transform (MODWT) for preprocessing signals. Cutting experiments using different tool sizes and parameters were performed on the vertical CNC milling machine. Finally, to evaluate the performance of the proposed model, its identification accuracy was compared to that of other deep learning and machine learning models. According to the experimental result and in contrast to available TCM methods, the proposed method improves the accuracy of tool wear condition recognition. The proposed model demonstrated the highest regression coefficient R compared with common prediction methods, equal to 99.5% on average.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The evolution of tool wear inspection and detection models has proven advantageous for enhancing productivity and efficiency. Accurate tool condition monitoring (TCM) models are essential for improving productivity and increasing the quality of the machined parts. Considering this viewpoint, a significant amount of research is being carried out worldwide in the field of tool condition monitoring systems (TCMs) [1]. The utilization of cutting tools in machining technology holds great importance due to their crucial role in achieving optimal quality and high efficiency. Cutting tool monitoring methods can be classified into two primary monitoring approaches: direct and indirect monitoring [2]. Indirect approaches have gained significant popularity due to their superior flexibility compared to direct methods. The frequently used indirect monitoring signals include vibration signals [1, 3, 4], cutting force signals [5], acoustic emission or sound signals [6, 7], current signals [8], temperature [9, 10], and machined surface image [11].

The accelerometer is attached to the machine table or its main spindle to acquire the vibration signal. The vibration sensor’s installation position might impact the vibration signal’s behavior, so applying it in tool monitoring conditions is inconvenient. Additionally, more signal processing techniques are required to efficiently filter out irrelevant vibrations unrelated to the cutting tool’s condition from the external environment. The acoustic emission signal can be acquired using an acoustic emission sensor. Ambient noise can affect the accuracy of the acoustic emission data, similar to how it may interfere with vibration signal limitations. Interpreting acoustic emission data can be complex and may necessitate experience in signal processing. Also, the acoustic emission signal may suddenly fluctuate due to the tool intermittently cutting. Moreover, accurate features extracted from AE signals related to tool wear status might provide significant challenges. Temperature in metal cutting processes can be detected using a thermocouple installed near the cutting tool [10]. The temperature signal is more suitable for specific tools, such as turning tools, and may provide challenges and limitations when applied to other cutting operations. Also, the accurate placement of temperature sensors is crucial for obtaining reliable measurements. Placing the sensor too close to the cutting area may result in inaccurate readings due to the heat dispersion of the cutting chips. On the other hand, placing the sensor too far from the cutting zone may not provide real-time temperature data. Additionally, temperature changes may not effectively detect problems associated with tool wear, such as using a high cutting speed and different properties of the cutting materials.

Cutting force signals can be acquired using a dynamometer during the cutting operation. The dynamometer can be mounted on the machine table, while in other cases, rotational dynamometers are included within the tool holder. Despite its high cost, the force sensor can provide the most precise signals regarding changes in tool condition, and it is considered the most accurate signal related to tool status changes [12]. This study proposes a new method for tool wear extraction based on tool image processing. One of the current study objectives is to establish a correlation between the sensor signal and image processing tool wear. Therefore, the highest accuracy of the sensor signal is recommended, regardless of the associated expenses incurred in implementing the sensor system. Force sensors can measure the forces acting on the tool and workpiece interaction area, providing precise data on the cutting tool conditions. They can provide real-time feedback, allowing for quick response to tool wear or tool breakage.

In the direct approach, tool conditions can be visualized using an imaging system, such as a CCD camera [13]. Visual inspection through imaging systems can provide accurate information for tool edge conditions. This study proposes a combination of sensor signal and vision systems in tool condition monitoring to provide numerous benefits. Combining the two systems can offer a powerful method to improve the accuracy of the tool detection process. A few recent studies have proposed combining the vision system and sensor signal. Yuan et al. [14] built a tool state classification model using a random forest method based on sensor signal and machined surface image processing. Chen et al. [15] proposed a combination method that used an AE signal with a vision system for tool wear monitoring during milling operation. The authors constructed a mapping system between the wear amount extracted from the machine vision system and the AE signal features. They applied classical machine learning algorithms such as support vector machines and back propagation neural networks. The recognition accuracy of their model reached 96.11%. The research area of direct and indirect combination methods is a crucial challenge in TCM development and still needs more investigation to improve the efficiency of the TCM identification model. Sensor signals often provide real-time measurements, enabling prompt monitoring of tool wear, and by integrating it with a vision system, the accuracy and reliability of the tool wear monitoring can be improved.

In this study, we proposed a deep learning model based on raw force signals and an image processing method for tool wear prediction to improve the accuracy of tool recognition using different milling parameters. With the increasing amount of data-driven TCM problems and the need to incorporate new operating conditions and take advantage of deep learning methods, deep learning prediction models have become more accurate and utilized than traditional machine learning algorithms. Furthermore, the growing amount of data used to train machine learning algorithms makes the traditional models insufficient and inadequate. As a result, deep learning-based methodologies are increasingly being used in tool monitoring research. Deep learning and multi-layer neural network approaches showed improved learning and prediction capabilities as the amount of data required for training models in TCM increased [16]. Data processing tasks include automatic feature extraction, feature-decreasing dimensionality, novelty detection, and transfer learning, which can be executed with deep learning techniques [17]. Deep learning algorithms frequently employed for intelligent tool monitoring include convolutional neural networks (CNNs), autoencoders (AE) and their variants, recurrent neural networks (RNNs), and deep belief networks (DBNs).

Cao et al. [18] utilized a deep convolutional neural network to extract features to provide automatic online tool wear monitoring. Nguyen et al. [19] presented a deep-learning network that utilizes stacked auto-encoders and a Softmax classifier to identify tool conditions in the context of cast iron cutting. The authors acquired the vibration signal and then extracted the signal features related to the tool state in the frequency domain. Their deep learning model achieved high classification accuracy and could recognize various tool wear states accurately. Ma et al. [20] designed a deep learning model using two models based on the CNNs for tool wear prediction using force signals during titanium alloy milling. The authors utilized two evaluation criteria, RMSE and MAE, to assess their performance differences from other prediction models. Their model displayed better performance compared with other deep learning models. Xia et al. [21] developed a deep network based on an auto-encoder to recognize the different levels of faults in bearings utilizing raw vibration signals. A deep neural network that integrates an LSTM network and a stacking autoencoder was developed by Wang et al. [1] to achieve the adaptive extraction of wear characteristics in varying machining parameters. They employed three statistical indicators to assess the identification accuracy of their model. Zhao et al. [22] suggested combining deep learning algorithms to extract local signal characteristics from raw data. They applied the CNN network, and then BiLSTM was subsequently utilized to encode temporal information, allowing them to realize tool life prediction. An et al. [23] used a CNN deep learning tool to extract features and minimize feature dimensions before combining unidirectional and bidirectional LSTM layers with CNN to predict the cutting tool’s remaining useful life throughout the machining process. Li et al. [24] applied a deep learning model based on a 1D convolutional neural network (CNN) and long short-term memory (LSTM) to predict the tool wear progression based on cutting force signals in the milling of CFRP material.

Various studies on TCM applications recommended using single sensors instead of multi-sensors to avoid the limitations of using multi-sensors, such as costs, large data amounts, complex information, installation problems, and manual labeling. Ghosh et al. [25] applied four different sensors and revealed that the spindle motor’s current and cutting force signals were more informative regarding feature content than the remaining four. They suggested that the degree to which fusion improves recognition accuracy is limited or possibly reduced.

The current state of research on tool condition monitoring (TCM) applications, which explore integrating direct and indirect methodologies, remains insufficiently investigated to effectively address challenges associated with tool monitoring techniques and enhance the effectiveness of monitoring models. This work proposes a comprehensive approach integrating direct and indirect TCM methods to analyze and predict endmill tool wear across different milling conditions. The indirect method depends on force signals, while the direct approach offers an innovative technique utilizing projected tool images for tool wear identification. This paper’s contributions can be outlined as follows:

-

For different endmills, wear features, such as white pixel count, are extracted based on projected rotating tool images acquired from the vision system. Then, the deep learning models are trained using both the sensor signal and pixel tool wear method.

-

For tool condition prediction, a novel approach combining the MODWT method for multiresolution signal analysis with hybrid deep learning tools such as CNN with BiLSTM has been applied.

-

The three-phase proposed model was validated through a case study involving sensor data collection. Comparative models, such as Support Vector Regression (SVR), Backpropagation Neural Network (BPNN), Long Short-Term Memory (LSTM), Convolutional Neural Network (CNN), Bidirectional LSTM (BiLSTM), CNN-CNN, and CNN-BiLSTM, were employed to assess the efficacy and predictive accuracy of the proposed model.

The following subdivisions of the current study are structured: a detailed overview of the structure of the TCM system and the mathematical basis for the CNN, BiLSTM, and MODWT algorithms is provided in Sect. 2. The experimental methodology used in the investigation is presented in Sect. 3, which also includes a brief description of the online tool wear inspection system and signal processing techniques used. The comprehensive formulation and establishment of the tool wear identification model, based on the combination of three-phase tools (MODWT-CNN-BiLSTM), is presented in Sect. 4. Section 5 reported a comparative result involving other methods for identifying tool wear. Finally, Sect. 6 summarizes conclusions and directions for future study.

2 Proposed method and theoretical bases

2.1 Monitoring system

Tool monitoring methods are categorized as direct and indirect. Indirect methods utilize sensor signals to evaluate tool state. Indirect approaches depend on machine learning or deep learning to analyze sensor and wear data, enabling predictive maintenance by detecting small changes indicative of tool wear or damage. While indirect methods offer real-time insights into tool performance and are relatively implemented in practical scenarios, they may not always provide accurate tool wear identification due to several limitations. Sensor signals might not capture minor wear variations or be influenced by material hard spots, leading to delayed detection or false negatives. Moreover, interpreting sensor data is challenging and influenced by factors like environmental noise, sensor placement, and complexity of signal analysis. Most indirect TCM systems require tool wear offline tool measurement and signal feature extraction. In the offline measurement, the tool and tool holder are loaded and unloaded repeatedly, which reduces work efficiency and produces a significant deviation. Proper feature selection that is highly correlated with tool state is one of the challenges that sensor systems face.

Direct methods, relying on cutting tool images, aim to overcome the limitation of indirect methods. By directly acquiring tool images, the direct approach enables more accurate corrective actions for tool replacement or machine operation stopping, enhancing TCM effectiveness. Direct approaches often involve physically monitoring the tool’s wear by visually inspecting and focusing on studying the tool surface images after cutting a significant distance on the workpiece. This can include interrupting the machining process for tool evaluation, which is time-consuming and stops production. Also, the direct approaches may encounter several constraints, such as a lack of tool breakage detection or tool condition variation when the tool is engaged with the workpiece during cutting. Also, direct approaches may not capture the gradual progression of tool wear over time, making it difficult to estimate the wear development accurately rate.

The current study proposes an integrated TCM system combining direct and indirect methods to detect the tool wear during the cutting process. This system utilizes a vision system integrated with the machine tool control unit and can operate in the machine’s automatic mode to measure tool wear without stopping machining. Tool image projection method that is based on the vision system enables simultaneous inspection of rotating endmills from radial and axial directions, effectively identifying changes in tool contour due to wear. At the same time, the force signal is acquired and processed during the cutting operation. The vision and force sensing systems operate seamlessly during milling, allowing in-process monitoring without interrupting cutting. Combining sensor-based and vision-based methods, it gathers comprehensive information about tool conditions, including real-time data on cutting signals and direct observation of physical wear. This integration can enhance reliability and robustness in tool condition assessment, providing redundancy in case of method failure. Combining direct and indirect methods may allow for early detection of tool wear and enable predictive maintenance strategies to replace tools before failures occur, minimizing downtime and improving process efficiency. Figure 1 illustrates two key stages of the monitoring process: establishing the prediction model and conducting online tool wear inspection.

The deep learning model is constructed using sensor signals and tool wear features extracted from images captured by the vision system. During the training phase, the vision module provides real-time measurements of tool wear features to the monitoring system, using white pixel features as wear indicators in projected tool images. Simultaneously, force segments are directly input into the deep learning model without feature extraction. The study proposes a three-phase deep learning approach integrating MODWT with the hybrid CNN-BILSTM model to handle raw force signals. After training, the model yielding the lowest prediction errors between actual and predicted values is selected. During online tool condition inspection, this optimized model detects tool wear conditions based on sensor signal segments obtained during cutting. To ensure accurate decision-making regarding tool replacement or continuation of cutting operations, the vision system is employed to verify tool conditions, thereby enhancing the accuracy and robustness of the tool wear monitoring model.

Combining vision systems with sensor systems can enhance understanding of wear mechanisms and process stability. Vision systems, aided by image processing algorithms, quantify wear features such as wear area and progression in real time, facilitating precise analysis and comparison of tool conditions. Decision-making regarding tool change or continuation of cutting depends on wear threshold and other machining criteria. The proposed TCM system can offer flexibility in this decision-making. To evaluate the method’s feasibility in TCM applications, the current research will investigate the use of the proposed system with different cutting conditions and tool geometries. Subsequent sections will detail the theoretical basis of the hybrid deep learning proposed model.

2.2 Maximum overlap discrete wavelet transform (MODWT)

Grossmann and Morlet developed the mathematical basis for wavelet analysis in 1984. The primary aim of wavelet transformation is to obtain a localized and time-scale representation that effectively captures transient phenomena across various time bands. A time domain signal’s frequency content and associated temporal variations can be identified through wavelet analysis. There are two main types of wavelet transforms: the discrete wavelet transform (DWT) and the continuous wavelet transform (CWT). The discrete wavelet transform (DWT) is more suitable than the continuous wavelet transform (CWT) for analyzing discrete multiscale time-domain data.

The discrete wavelet transform (DWT) still limits the data sample size, requiring it to be a multiple of two in the wavelet decomposition level. Moreover, the decimation effect linked to the discrete wavelet transform (DWT) can potentially cause information loss during model training and pose bias in the subsequent estimates. Employing the maximal overlap discrete wavelet transform (MODWT) in signal processing can assist in addressing these shortcomings. MODWT offers the capability to overcome the constraints associated with the discrete wavelet transform (DWT), such as limitations in sample size, down-sampling requirements, and the necessity for the dataset to adhere to a dyadic structure, among other considerations [26]. Both methodologies, DWT and MODWT, utilize dual filters to partition the frequency band of the input time signal into low and high frequencies. The low-frequency component is termed “scaling,” while the high-frequency component is referred to as “wavelet coefficients.” The MODWT exhibits translation invariance, meaning shifts in the signal do not alter the pattern of coefficients, thus allowing for the analysis of signals with various sample sizes. Moreover, an equal number of wavelets, scaling factors, and observations are accommodated at every transformation level.

The multiresolution orthogonal of MODWT differs from the DWT in that its decomposition procedure does not include a down-sampling operation, as shown in Fig. 2 [27]. Due to the simultaneous calculation of all coefficients in a single matrix operation, the DWT generally has a lower calculation load than the MODWT.

The M/2 wavelet coefficients in the DWT can be acquired through matrix multiplication using M samples as an input signal. This process entails multiplying the M/2 × M transformation matrix by an M × 1 input signal. Conversely, the MODWT algorithm calculates the M wavelet coefficients through a matrix multiplication operation involving an M × M transformation matrix and an M × 1 input signal [28]. This procedure illustrates that the Discrete Wavelet Transform (DWT) results in a reduced set of wavelet coefficients compared to the initial size of the input signal. Furthermore, real-time applications primarily allocate a significant portion of their computational resources towards the computation of wavelet coefficients, notably lower than the sampling frequency. The MODWT also works with any sample size and signal-shifting (transform-invariance) capabilities [29]. These properties are highly valuable and crucial in signal analysis and feature extraction applications. The scaling (V) and wavelet (W) coefficients for a discrete signal, \(X=\left\{{X}_{t}=0\dots \dots M\right\}\), are determined through the mathematical equations of the MODWT-based decomposition, which can be expressed as:

The symbols \({\widetilde{h}}_{j.l}\) and \({\widetilde{g}}_{j.l}\), respectively, represent the wavelet (high-pass) and scaling (low-pass) filters for the jth level of the MODWT. The values j and l reflect the level of decomposition and the filter length, respectively. Equations (3) and (4) can be utilized to identify these filters:

The jth level of the high- and low-pass filters of DWT provides \({h}_{j,l}\) and \({g}_{j.l}\). In the previous equations, the L is the length of the wavelet filter and \({L}_{j}=\left({2}^{j}-1\right)(L-1)+1\). The MODWT wavelet coefficients at each scale will have the same length as the original signal X [30]. It can now be described using matrix notation in the following form:

where the \({\widetilde{\omega }}_{j}\) is M × M matrix and is determined by values of \({\{\widetilde{h}}_{j.l}\}\) as following:

While the \({\widetilde{v}}_{j}\) can be determined also the same as matrix \({\widetilde{\omega }}_{j}\) but by replacing the \({\{\widetilde{h}}_{j.l}\}\) by \({\{\widetilde{g}}_{j.l}\}\). The original time series X can be reconstructed from its MODWT using the following formula:

which indicates a multiresolution analysis (MRA) of the original time series signal X using MODWT at the jth level of details \({\widetilde{D}}_{j}={\tilde{\omega }}_{j}^{{\text{T}}}{\widetilde{W}}_{j}\) and the \(J\) level MODWT smooth \({\widetilde{S}}_{J}={\tilde{v}}_{J}^{{\text{T}}}{\widetilde{V}}_{J}\).

2.3 Convolutional neural network (CNN)

CNN was originally developed with a primary focus on image processing. In recent decades, there has been enormous growth in the discipline that extends several topics, such as one-dimensional (1-D) signals processing and time series analysis, including the study and manipulation of data. A significant amount of scholars have conducted analyses on time-domain sensor signals using one-dimensional convolutional neural networks [31,32,33]. The fundamental concept underlies the analysis of Convolutional Neural Networks (CNNs), which involves the utilization of multiple filters within the convolution layer. These filters are designed to smoothly move across the input data arranged in a time-series format, enabling the extraction of significant features. The item under observation can be characterized as sample X, which consists of elements y1 through ym and their associated labels Y. The samples are characterized by n features, represented as {xi1, xi2, xi3…, xim}. Every feature (xi) that feeds into the CNN is an element of the n-dimensional vector space Rnxd, where d is the vector’s dimension and n is the number of features. Convolution operates to extract the primary objective of high-dimensional features from the given dataset. The following equation (Eq. 9) describes the convolution operation:

In the provided context, \({x}_{i:i+l-1}\) refers to the input time domain signal of length l, \({w}_{i}\) denotes the weight matrix, \({b}_{i}\) denotes the parameter of bias, and f (⋅) signifies the activation function. The resulting feature graph obtained from the convolution operation is described as follows:

After the convolution layer, reducing of dimension of the feature map is often performed. A pooling layer can be used to implement dimensionality reduction. The pooling layer may employ the average or maximum pooling method, depending on the situation. The procedure of dimensionality reduction can be stated as follows for average or maximum pooling:

Figure 3 illustrates the architecture of the extraction of feature based on two layers of 1D-CNN.

2.4 Bidirectional long short-term memory neural network (BiLSTM)

The Bidirectional Long Short-Term Memory model is derived by combining both forward LSTM and backward LSTM cells, as depicted in Fig. 4b. BiLSTM models augment the functionalities of conventional LSTM models by incorporating bidirectional processing, thereby enabling the discovery of dependencies from both preceding and subsequent contexts. BiLSTM’s internal nodes are similar to LSTM since they contain three main gates: forget, input, and output. Figure 4a demonstrates the core principle of LSTM, wherein the symbols i, f, g, and o represent the input gate, forget gate, cell candidate, and output gate, respectively.

The forget gate controls the information flow within the LSTM cell. In contrast, the input gate is responsible for selecting the relevant information to keep from both the current input \({x}_{t}\) and the output \({h}_{t-1}\) of the preceding LSTM cell.

Furthermore, the output gate applies the hyperbolic tangent function (tanh) to the cell state to compute the output \({h}_{t}\) of the LSTM cell. The formal mathematical representation of the three gates is articulated as follows:

The data collected from the previous time series is represented by \({h}_{t-1}\), while \({x}_{t}\) indicates the input at the recent time. The network parameters that are subjected to learning are represented by the symbols w, R, and b, while σ is the activation function. The activation function σ is a critical component of long short-term memory (LSTM) network structures. It gives the network non-linearity, which enables it to find intricate links in the data. The sigmoid, tanh (hyperbolic tangent), and ReLU (Rectified Linear Unit) functions are common activation functions. Within the LSTM architecture, the activation function σ is commonly applied within the gates and the cell state calculation. For example, the sigmoid function is widely employed within the input gate, forget gate, and output gate to manage the flow of information. In contrast, the hyperbolic tangent function (tanh) is often utilized to govern the cell state values. Using the activation function within the LSTM gates and cell state computation, the network can control the information flow and the memory content stored within the cell state, allowing it to capture both short-term and long-term dependencies in sequential data. The following are the expressions for short-term memory (\({{\text{h}}}_{t}\)) and long-term memory (\({{\text{c}}}_{t}\)):

The signs ⊗ and \(\oplus\) represent the mathematical processes of multiplication and addition, respectively. The model of BiLSTM incorporates a reverse layer in addition to the forward layer of the LSTM architecture. Combining the hidden layer vectors for the forward and backward directions is possible, resulting in a more comprehensive consideration of contextual information and improved tool wear monitoring effectiveness. The network structure is depicted in Fig. 4a, and the corresponding mathematical formula is presented below.

The final outputs of the forward, backward, and output layers at time t are represented, respectively, by the variables \({{\text{h}}}_{t}^{R}\), \({{\text{h}}}_{t}^{L}\), and \({{\text{h}}}_{t}\). The weight parameters of the BiLSTM model that are affected by the learning process are represented by the variable “w.” After the BiLSTM processing, the dense layer is utilized, and the activation function is the hyperbolic tangent (tanh). This is done to perform the predictive task and monitor the tool wear value.

3 Experimental setup and data description

3.1 experiment setup

Milling wear experiments were performed using different cutting parameters on a CNC vertical milling machine to investigate tool wear in the actual manufacturing process. The specifications of the vertical milling machine are as follows: Haas control, the maximum speed of the work shaft is 6000 rpm, and the maximum traveling in x, y, and z axes are 406, 305, and 254 mm, respectively. The experimental setup and study framework are presented in Fig. 5. The milling process under different working conditions can generate a reasonable dataset that can be used for training and testing the deep learning regression model proposed in this work. The milling parameters for training and testing data sets are listed in Table 1. Carbide endmill tools with different sizes and geometries are utilized in this work. Different cutting speeds, feeds, and depth of cut were selected based on machining experience.

Several random factors, such as coolant and tool setup, can influence the accuracy of acquired signal data. An alloy steel block is cut without cutting fluid to control random factors in the machining process. The tool overhang distance is maintained at 30 mm for all cutting tests. A flat endmill with a diameter of 20 mm is used for the facing operation at the start of each test to guarantee the top surface flatness and a consistent depth of cut for the subsequent operation. Hardened AISI H13 steel has been chosen as the workpiece material due to its remarkable qualities, including high-temperature strength and wear resistance. This steel type finds wide application in die and mold manufacture, particularly in scenarios requiring impact loads. Examples of such applications include forging dies, hot extrusion dies, and precision forging dies. The dimensions of the H13 steel block are as follows: 150 mm in width, 250 mm in length, and 40 mm in height.

In this work, a force sensor is applied to detect the influence of changes in the tool condition on the signal force outputs. The three cutting forces components are acquired using a Kistler piezoelectric dynamometer (type 9257B) installed on the machine table, with a sampling rate of 2 kHz cycles. A Kistler charge amplifier of type 5070A is utilized in conjunction with the dynamometer. For data acquisition, a DEWE-43 data acquisition card is chosen as the acquiring hardware, permitting the recording of all force signals during the cutting process. The data acquisition is managed using DEWEsoft software, version x. The flank tool wear (VB) is measured using an optical microscope designed for industrial purposes with suitable magnification reaching 5X.

3.2 Tool wear measurements

The tool wear measurement was extracted using two methods: online and offline. Several research works have been implemented in the field of TCM that have applied optical microscopes in the offline system [6, 34, 35]. This study applied the optical microscope to capture and measure the progressive flank wear values (VB) based on the ISO 8688 standard. The flank wear width in a microscopic photograph taken by the microscope in this study was measured to calibrate the wear value that will be extracted from the vision system. In the offline measurement, repetitive loading and unloading of the tool and tool holder affects productivity and can produce a significant deviation in the machined parts [36, 37]. To overcome this problem, this study proposed a tool wear inspection method based on projection tool images. The tool wear was detected and acquired during the milling operation without the tool being removed from the machine’s main spindle. To reflect the tool condition and to monitor the tool life, the progressive worn area of the rotation end mill is selected in this work. A novel vision system configuration for online tool condition monitoring has been established in the online method. This intelligent system attempts to optimize component quality by extracting tool wear from the tool-projected image during cutting, as represented in Fig. 6.

The vision system aims to check the tool condition automatically without stopping the cutting operation. The system comprises an image acquisition unit that depends on a CCD camera and a control calculation unit. This device can efficiently assess the condition of milling cutters from both radial and axial perspectives. The components of the vision system comprise various pieces, including the CCD camera, telecentric lens, diffusing plate for the light source, transmission cable, LED light source, jet-air nozzle, and protective shell. Compressed air jets are utilized through the nozzle to clean the body of the rotating tool by directing air onto the tool to remove chips and other deposits. The final output of the vision system is a single composite image formed from several images of each tool acquired during tool rotation between the CCD camera and the reflecting backlight. The measurement resolution of the developed vision-based tool inspection device is 1.2 micro/pixel. Figure 7 presents the progressive wear of the tool using the microscopic method, vision system, and image processing method. Progressive tool wear values using cutting parameters of test C#4 (cutting speed = 2000 rev/min, feed = 300 mm/min, axial cutting depth = 1.2 mm, and radial cutting depth = 2 mm) are shown in the figure (Fig. 7).

It is clear from this figure that the experimental tool wear condition is strongly correlated to the tool image extracted from the vision system. Tool wear increases with increasing the cutting distance, and the area of interest in the tool will change in the projected tool images. The width of the white gap increases with increasing the wear on the tool cutting edge, and the number or percent of white pixels of the worn area can be a strong indicator of tool wear. Considering this method, a vision-based method was proposed in our previous publication work for a small-sized endmill for tool condition classification [38]. In the microscopic method, tool wear is quantified by measuring the dimension of the wear land based on the ISO 8688 standard for tool wear. Then, the white pixel area is correlated with the experimental VB of the flank wear.

The flow chart of prediction model building, machining procedure, and projected-tool image processing is presented in Fig. 8. After capturing the tool images, the images were subjected to additional processing to separate the worn area. First, the cropping process is applied to the original image, which had a resolution of 1024 by 1280 pixels. A new image size appropriate for the interested worn region was extracted from the original tool image due to the cropping process. Following this, Otsu’s binarization approach was employed to convert the image that had been cropped into grayscale. Then, worn tool images were calibrated with the initial image corresponding to the fresh tooth. At the end, a percentage of white pixels representing the tool wear area was identified and acquired. Figure 9 depicts the image processing procedure applied in the tool wear extraction.

This work investigated flank wear on the endmill teeth using the offline approach. With the increase in cutting time, the tool flank wear gradually increased. The cutting continued until the tool’s wear reached the maximum values. The ISO 8688 criteria indicated that the tool teeth used for cutting had a maximum wear threshold of more than 400 µm (Fig. 10). The machining will stop when the maximum flank wear values reach the maximum wear threshold.

Figure 10 presents the distinguishing characteristics between the tool teeth conditions, fresh and worn, using a microscope and vision system. As the cutting distance and machining time increase, the tool wear region becomes more extensive. Consequently, the white pixel area for the worn tool expands directly due to progressive wear. In the context of tool wear assessment, Fig. 11 delineates three distinct categories, each corresponding to three different tool wear states: small, medium, and high-worn areas. Notably, the evolving state of tool wear over time results in alterations in the tool’s contour, and this transformation is pivotal for enabling a vision system to monitor the condition of the cutting tool effectively.

A significant advantage of this approach is its real-time capability, as it enables the in-process tool wear evaluation without interrupting the cutting process. This contrasts with traditional optical methods that employ offline microscopic techniques, which typically involve halting the cutting process for tool wear assessment. Utilizing the white pixel count as a tool wear indicator offers a more streamlined and efficient means of assessing and responding to tool failure, thereby contributing to enhanced machining processes and increasing productivity. Figure 12 presents the tool wear by white pixel values for the four tests used in this study. It is clear from the wear plot that there are variations in the white pixel counts due to different cutting conditions for individual tests.

Figure 13 shows the correlation between pixel count and flank wear (VB) for the four tests. The correlation coefficients are 94.92%, 98.17%, 99.37%, and 94.92% for tests C#1, C#2, C#4, and C#6, respectively. The strong correlation observed between the flank wear (VB) of cutting tools and white pixel counts is a significant advancement in machining and manufacturing. It highlights the potential of image processing techniques for real-time tool wear assessment and process control. With correlation coefficients ranging from 94.92 to 99.37% in different milling experiments, it is evident that white pixel counts accurately reflect tool wear progression. This finding has broader implications for industries reliant on precise machining, as it underscores the effectiveness of image processing methods in facilitating timely interventions and improving the overall efficiency and quality of the manufacturing process.

3.3 Data preprocessing

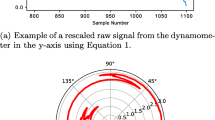

The seven milling cutting experiments are conducted to evaluate the proposed model performance. Time series sensor data with different time intervals was recorded in each milling test; for instance, in test C#1, the total length of the cumulative signal is more than 400 thousand time steps. Processing such a lengthy time sequence through the prediction model can be challenging, and downsampling the time series at a low rate, such as 1%, may lead to signal distortion. This lengthy signal needs to be divided into manageable segments to improve the regulation of the model training during the prediction process. Furthermore, the training approach for deep learning models requires a substantial volume of data to maximize the neural network’s learning effectiveness. All three force signal components, Fx, Fy, and Fz, were segmented using the signal segmentation process. Only steady-cutting portions of the signals corresponding to the actual full engagement between the tool and workpiece are employed for segmentation purposes. Before being segmented, the force signals are preprocessed to eliminate signal segments that do not correspond or do not reflect the actual state of the cutting pass, such as the cutting pass entry and exit portions, as shown in Fig. 14.

The task of signal processing becomes more manageable, and the overall complexity is reduced when three force signals are combined into a single signal rather than when dealing with and analyzing three independent signals. This decrease in dimensionality can improve processing performance and simplify model training. Additionally, it makes data visualization and understanding easier because it is frequently more straightforward to visualize one integrated signal than multiple signals simultaneously. Thus, the force signals are normalized and combined into one signal. The study encompassed the extraction of 2398 signal segments for all tests. Each segment consisted of 1000 sample points and was subsequently utilized as input for the model. The signal segments corresponding to C#1, C#2, C#3, C#4, C#5, C#6, and C#7 are 380, 295, 295, 312, 312, 402, and 402, respectively. C#1, C#2, C#4, and C#6 are utilized for training prediction models, whereas the test datasets C#3, C#5, and C#7 are employed for evaluating the trained models.

Because different tool wear values correspond to different lengths of acquired signals during the cutting process, to estimate the tool wear values corresponding to signal segments, a gridded interpolation is applied to increase the wear values within the lowest and highest wear values. This study compares the results of two commonly employed machine learning techniques and five deep learning approaches with the result of the proposed deep learning model. Support vector regression (SVR) and back propagation neural network (BPNN) were selected as machine learning models for this comparative result due to their wide application in manufacturing. The fundamental basis of machine learning approaches is the signal features that are fed into the model. Signal features are extracted to train the machine learning algorithms. Fourteen features representing the time, frequency, and time–frequency domains have been obtained and utilized to train and test the machine learning models. All acquired features were normalized initially before being fed into the SVR and BPNN models. The mathematical expressions of all signal features are presented in Table 2. The feature plot of test C#2 is figured out in Fig. 15a, and the corresponding correlation coefficients are shown in Fig. 15b. Pearson’s correlation coefficient (Rcp) was used in this study, and it can be calculated using the following equation:

where x is the signal feature, while y is considered tool wear, most extracted features in the three domains achieve a strong correlation with the tool wear.

4 Establishing a three-phase hybrid model

4.1 Multiresolution based on MODWT layer

This study combined the MODWT approach with the hybrid deep learning CNN-BiLSTM algorithm to analyze raw force signals effectively. Figure 16 presents the fresh and worn signal reconstructed using the MODWT method for milling tests. MODWT algorithm decomposes the original cumulative force signal into scaling coefficient and 5-level detail components. Only three levels of reconstructed signals are chosen for training a deep learning model, as presented in Fig. 16.

The selection of the ideal mother wavelet in constructing the three-phase hybrid model does not precisely follow a predetermined set of rules. As a result, a trial-and-error method is applied to determine the suitable mother wavelet. With this approach, different candidate wavelets are tested iteratively, and their acceptability is evaluated according to predefined standards or performance indicators. Studies using a wide range of mother wavelets from the families Daubechies, Haar, Coiflet, and Symmlets were conducted by García Plaza et al. [39] at different decomposition levels. Their study employed force signal analysis for monitoring surface finish during cylindrical turning. Aralikatti et al. [40] analyzed the vibration and force signals to diagnose faults in cutting tools using the Haar mother wavelet. The previous studies did not indicate the most significant mother wavelet. Therefore, this study used Symmlets mother wavelets, commonly employed in tool condition applications.

4.2 CNN-BiLSTM model design

This paper presents a fundamentally integrated deep learning model with a vision system to improve the stability and effectiveness of the tool wear identification approaches. The model combines the MODWT layer for signal multiresolution analysis with deep learning tools like CNN and BiLSTM networks. The main goals are to automate the extraction of features relevant to tool wear and to optimize the monitoring of tool wear operations. To enhance the performance of the CNN-BiLSTM model, a normalization layer is associated with the pooling layer and ReLU activation layer. Convolutional Neural Network (CNN) layers were followed by a flattened layer to condense the input’s spatial dimensions into the channel dimension. Secondly, BiLSTM layers are added to extract deeper bidirectional temporal features based on a double BiLSTM layer. A dropout layer with a probability of 20% is employed to prevent the model from overfitting. A dense layer was added after the BiLSTM layers. Adding a dense layer after the BiLSTM layers permits the model to process the information extracted by the BiLSTM layers further and transform it into a format suitable for the tool wear prediction task. Different hyperparameters are adopted based on trial and error to evaluate the model performance. Optimizers are adopted in training to reduce the loss value, thereby improving the model’s performance. In this study, various optimizers are used on the proposed networks; the optimizers include stochastic gradient descent with momentum (SGDM), Adam (Adaptive Moment Estimation), and RMSprop (Root Mean Square Propagation) to evaluate the model performance. The training behavior of the CNN-BILSTM model is compared with the behavior of the proposed model (MODWT-CNN-BILSTM). Figure 17a and b show the optimizer’s effect, keeping all the other parameters constant. It can be seen that the optimizer significantly impacts the network’s performance; SGDM adaptation has demonstrated poor performance for the hybrid deep learning models. Based on the model’s performance in Fig. 17, the Adam optimizer offers several advantages compared to other optimization algorithms, making it a good choice for deep learning models.

The proposed model with the Adam optimizer in this study has produced a better performance than the other optimizers. Adam optimizer is applied in most deep learning models in the TCM literature due to its robustness and efficiency. By employing it, the model may efficiently learn from the training data and adjust its parameters to reduce the prediction error. Adam’s adaptive learning rate feature enables the model to converge faster and more reliably to an optimal solution compared to traditional optimization algorithms with fixed learning rates. It combines the concepts of adaptive learning rates and momentum to improve the performance of gradient-based optimization algorithms. The adaptive learning rates computed by Adam allow the algorithm to automatically adjust the step size for each parameter based on the magnitude of the gradients and the decay rates, which will help to converge faster and more reliably.

The improved model’s prediction accuracy and feasibility are verified through milling experiments. The model’s performance is compared to that of commonly used machine and deep learning algorithms. The proposed model network structure is depicted in Fig. 18, and the corresponding hyperparameters are illustrated in Table 3.

4.3 Comparative models design

To evaluate the accuracy and effectiveness of the proposed MODWT hybrid deep learning model, we have developed five common deep learning models for comparison purposes, including Long Short-Term Memory (LSTM), Convolutional Neural Network (CNN), Bidirectional LSTM (BiLSTM), CNN-CNN, and CNN-BiLSTM. Additionally, we have included two machine learning models, Support Vector Regression (SVR) and Backpropagation Neural Network (BPNN), in the comparative analysis. Deep Network Designer and MATLAB code have been utilized to construct the models. The following is a summary of the TCM algorithms’ parameters, which were developed via knowledge and experience:

SVR exhibits strong capabilities in detecting tool states and is widely utilized in tool identification systems. The training parameters employed for the SVR model were as follows: the kernel function used was the Radial Basis Function (RBF), the Box Constraint was log-scaled within the range of [1e-3, 1e3], the Kernel Scale was set to positive values by default and log-scaled within the range of [1e-3, 1e3], and the Epsilon parameter was searched among positive values and log-scaled within the range of [1e-3, 1e2]. The interquartile range of the data set Y, where Y represents the projected response, was divided by 1.349. This calculation was performed after standardizing the data.

BPNN, a backpropagation feed-forward algorithm, is widely recognized as a prevalent neural network in various industrial applications. The multilayer perceptron (MLP) is widely recognized as the predominant form of backpropagation feed-forward network. The MLP architecture comprises three distinct layers: an input layer, several hidden layers, and an output layer. The neural network was trained using the Levenberg–Marquardt (LM) learning algorithm, employing a learning rate of 0.01. The training of the artificial neural network (ANN) model involved the utilization of increment and decrement factors of 0.5 and 10, respectively. During the modeling process, the tansig transfer function was employed to connect the input and hidden layer neurons and the hidden layer and output layer.

LSTM

The model is structured as follows: The LSTM layer contains one hundred hidden units. Only one neuron presents the tool’s predicted value in the dense layer, and a dropout probability of 20% is applied. The maximum number of epochs is set to three hundred, the validation frequency is set to twenty, and the starting learning rate is set to 0.001. The BiLSTM model is structured similarly to the LSTM model, with the critical difference being substituting the LSTM layer with a BiLSTM layer.

CNN

The model is structured in the following manner: The Conv1D layer is configured with a total of 32 filters, each having a filter size of 3. The Rectified Linear Unit (ReLU) activation function is employed in this layer. The model incorporates a causal padding mechanism, a minimal batch layer normalization layer, and a down-sampling technique using a 1-D global average pooling layer. The dense layer number of neurons is equal to one. The maximum number of epochs is set to three hundred, the batch size is set to one hundred and fifty, the validation frequency is set to twenty, the initial learning rate is set to 0.001, and the likelihood of dropout is set to 20%.

CNN-CNN

The model is organized as a CNN model with double Conv1D layers, and the number of filters was 64 in the second Conv1D layer.

CNN-BiLSTM

The model is structured as follows: A Conv1D layer with 32 filters, each with a filter size of 3, and a ReLU activation function. The padding is causal with a maximum pooling layer of size five and the same padding. It includes a minimal batch layer normalization layer followed by a Conv1D layer with 64 filters and a filter size of 3, using ReLU as the activation function. The padding consists of a causal layer, a minimal batch layer, a normalizing layer, and a flattened layer. The BiLSTM layer has one hundred hidden units. There is only one neuron in the dense layer. The maximum number of epochs determined is 300, the batch size is 150, the validation frequency is 20, and the initial learning rate is set at 0.001.

MODWT-CNN-BiLSTM

Sect. 4.2, Fig. 18, and Table 3 describe the proposed model structure. Figure 18 demonstrates the proposed model’s architecture, while Table 3 lists the model hyperparameters. Different hyperparameters are adopted based on trial and error to evaluate the model performance and construct the proposed model.

4.4 Performance indicators

The current study examines various data sets involving different cutting parameters in tool wear end-milling processes, as outlined in Table 1. Each experiment consisted of various tool wear data samples that corresponded to force signal segments. Cross-validation methodology is used to demonstrate the effectiveness and generalizability of the proposed model. The data sets are partitioned into training and cross-validation data sets using a random allocation method. The training dataset consisted of 90% of the data samples, while the remaining 10% was allocated for cross-validation of the prediction model. The training dataset consists of C#1, C#2, C#4, and C#6, while the testing dataset includes C#3, C#5, and C#7. To evaluate the effectiveness of the proposed TCM model in comparison to other networks, three performance evaluation indicators have been utilized: mean absolute error (MAE), root mean squared error (RMSE), and correlation coefficient (R). The expressions are presented in the following manner:

where \({y}_{i}\) and \({\widehat{y}}_{i}\) are the actual and predicted wear, respectively.

5 Results and discussion

The wear prediction curves for the proposed model compared with common machine learning models utilized in this study for training and testing data sets are illustrated in Figs. 19 and 20, respectively. It is clear from the figures that the proposed model has a stronger correlation with the true values of the tool wear. Deep learning achieved higher accuracy for tool wear prediction than the two common machine learning algorithms. SVR is widely applied in TCM applications, particularly when dealing with small to medium-sized datasets. However, it may not perform optimally in complex, high-dimensional data, and its predictive accuracy can be limited when handling intricate relationships in tool wear prediction scenarios. On the other hand, BPNN is a traditional artificial network that has been employed in different TCM applications. It is flexible and capable of learning complex patterns. Still, it has a reputation for suffering from issues like vanishing gradients and overfitting, which may require careful hyperparameter tuning to mitigate. Another significant aspect to consider is the model’s adaptability and robustness. SVR and BPNN algorithms are known for their hyperparameter sensitivity and may require extensive fine-tuning to deliver optimal results. Because machine learning is not the goal of this study, optimizing hyperparameters for the machine learning models is not applied.

In contrast, the proposed model, which integrates the three algorithms, highlights its ability to create complex relationships in raw force signal and tool wear data. Conversely, with SVR and BPNN, the proposed model is designed to extract relevant features automatically, reducing the time consumed in the preprocess data preparation and enabling it to perform better than machine learning methods. The proposed model excels at feature extraction, allowing it to automatically identify critical features for tool wear prediction, reducing the need for manual feature engineering problems. The wear prediction curves for the proposed model compared with common deep learning models utilized in this study for training and testing data sets are illustrated in Figs. 21 and 22, respectively. The proposed model is compared with five different deep learning models such as LSTM, BiLSTM, CNN, CNN-CNN, and CNN-BiLSTM, but only two methods, LSTM and CNN-BiLSTM, are selected and presented in Figs. 21 and 22, because of the limitation of article size.

The proposed model also achieved higher accuracy than all deep learning models. It is clear from the figures that the proposed model has a stronger correlation with the true values of the tool wear compared with other deep learning models. The performance indicators of MAE and RMSE calculated from Eqs. (17) and (18) are illustrated in Figs. 23 and 24 for training and testing data, respectively. The correlation coefficients calculated from Eq. (19) are shown in Table 4. The highest R values are bolded, and it is clear from the values of R that the proposed model demonstrates the highest prediction accuracy compared to other models. The proposed model presents an average value of R equal to 99.5%. It is higher than R achieved by other common prediction methods, which are 95.4%, 96.5%, 97.1%, 95.2%, 96.9%, 97.3%, and 97.5% on average for the SVR, BPNN, LSTM, CNN, BiLSTM, CNN-CNN, and CNN-BiLSTM, respectively.

In the test results of C#1, the deep learning models generally achieved comparative results compared to machine learning tools. SVR and BPNN demonstrated root mean square prediction error (RMSE) values of 6.72 and 7.47. While the deep learning models, LSTM, CNN, BiLSTM, CNN-CNN, and CNN-BiLSTM, achieved RMSE values of 5.31, 4.87, 5.04, 5.07, and 4.45, respectively. A lesser value of RMSE indicates the superior performance of the prediction model. Deep learning models, known for their ability to automatically learn and extract intricate patterns from data, promising results in the study.

Compared to the machine learning models, the RMSE values indicate a notable reduction, showcasing the power of deep learning in tool condition monitoring. However, the prediction indicator values for the deep learning models are lesser than the prediction indicator errors for SVR and BPNN in C#1; this achievement is not the same as in the other tests. In some test results, the SVR and BPNN demonstrated MAE and RMSE values better than other deep learning models. For instance, in test C#2, the BPNN model achieved MAE and RMSE values of 5.81 and 7.5, respectively. These values are better than errors achieved by CNN and BiLSTM in the same test results. CNN presented MAE and RMSE values equal to 7.76 and 6.62, respectively. Furthermore, SVR achieved good results compared with the CNN model in the testing data set of C#7.

The hybrid models, such as CNN-CNN and CNN-BiLSTM, achieved comprehensive results compared with a single deep learning tool. Hybrid models are designed to leverage the strengths of both CNN and BiLSTM. CNN excels at feature extraction from structured grid data, making it a powerful choice for signal-based tool wear prediction. Combining CNNs for feature extraction with BiLSTMs for sequential modeling, the CNN-BiLSTM model can capture both local and temporal patterns, providing a comprehensive approach to tool wear prediction. For instance, in test C#2, the CNN-CNN demonstrated an RMSE equal to 4.96, while the CNN model achieved 10.08, and the prediction accuracy improved by 50.79% for the hybrid model. The achievement of the CNN-BiLSTM model is verified, and it is similar. However, the hybrid algorithms can improve the prediction model’s capability and efficiency. Still, unfortunately, in tests C#6 and C#7, its results were less accurate than those of a single deep learning model.

In contrast, among all prediction models, the proposed deep learning model, which incorporates the maximum overlap discrete wavelet transform layer with hybrid deep learning tools such as CNN-BiLSTM, offers a distinctive approach to tool wear prediction in all experimental tests. For instance, compared with the two common machine learning regression models, the MAE of the proposed method calculated according to Eq. (17), is reduced by 80.4%, 84.6%, 81.54%, 77.62%, 86.57%, 87.54%, and 81.35% on average for test data sets of tests C#1, C#2, C#3, C#4, C#5, C#6, and C#7 respectively. On the other hand, compared with the five deep learning methods, the proposed model achieved the lowest MAE and RMSE values. According to MAE, it reduced by 44.33%, 64.07%, 55.21%, 33.65%, 34.85%, 71.45%, and 62.46% for test data sets of tests C#1, C#2, C#3, C#4, C#5, C#6, and C#7, respectively, on average.

The curves displayed in Fig. 25 illustrate the training loss values’ trend of progression and RMSE of validation data using the prediction comparative methods. The decrease in the loss function value of the models can be observed in Fig. 25 as the number of training iterations increases. The loss function eventually drops off to a dramatically reduced value. During the training phase, the recommended MODWT-CNN-BiLSTM model exhibits a notable decrease in loss and convergence. The observed reduction indicates that the model may learn from the training dataset, improving its prediction capacity. Furthermore, as the iterations progress, the loss function reaches a stable state, indicating convergence and establishing a robust and reliable model.

In conclusion, the comparison between commonly used multiple regression methods and the proposed deep learning methods, which integrate the MODWT layer with CNN and BiLSTM for tool wear prediction, underscores the unique advantages of each approach. The proposed deep learning model stands out by harnessing the wavelet-based feature extraction and multi-resolution analysis provided by the MODWT layer, making it well-suited for diverse tool wear prediction scenarios, whether dealing with sensor readings. Its balanced combination of spatial and temporal modeling, interpreting, and the ability to adapt to varying data sizes and complexities positions it as a robust and versatile solution for optimizing tool monitoring and prediction in industrial settings.

6 Conclusion

This study presents novel methodologies for detecting tool wear in milling operations. Tool wear can be identified using a vision system that applies image processing techniques to extract pixel counts. This method is a reliable indicator for assessing the condition of the tool during the cutting process. Additionally, we have put forward a novel deep-learning model for predicting tool wear. This model integrates the maximal overlap discrete wavelet transform with a hybrid combination of deep learning tools: CNN and BiLSTM. The accuracy of the proposed model’s predictions is assessed through cutting operations employing various milling parameters. The model’s performance is compared with commonly used prediction methods in the TCM application. In summary, the paper’s conclusion can be summarized by the following key points:

-

This study proposes an online and in-process tool wear extraction technique based on projected tool images. Using different rotating cutting tools, a strong correlation between the flank wear (VB) and the pixel method is observed. In various milling experiments, correlation coefficients range from 94.92% to 99.37%, indicating that white pixel counts accurately reflect tool wear progression. This finding significantly affects precise machining and can improve the overall efficiency and quality of the manufacturing process.

-

A novel prediction method is proposed by utilizing the MODWT method for signal analysis combined with a hybrid deep learning algorithm, CNN-BiLSTM, for tool wear recognition. Applying the MODWT technique significantly enhances efficiency and prediction accuracy. The proposed model has the highest regression coefficient R compared with common prediction methods, equal to 99.5% on average.

-

Comparative models, including SVR, BPNN, LSTM, CNN, BiLSTM, CNN-CNN, and CNN-BiLSTM, were used under segmented raw force signals and multi-domain features to compare the prediction accuracy of the proposed model. Compared with SVR, BPNN, LSTM, CNN, BiLSTM, CNN-CNN, and CNN-BiLSTM, the proposed model demonstrates a decrease in the cumulative RMSE by 66.4%, 61.1%, 55.8%, 60.5%, 54.2%, 46.7%, and 50%, respectively. The proposed model achieved the lowest cumulative RMSE and MAE for all experimental data, improving prediction accuracy.

The identification of tool wear states is of significant importance in intelligent manufacturing. The proposed methodology aims to tackle the problem of tool wear detection by employing direct and indirect methods. This research presents an efficient and reliable method for monitoring tool wear by combining machine vision and force signals. Utilizing machine vision data and hybrid deep learning tools enables the streamlined and effective acquisition of tool wear images and the accurate labeling of force signals. This significantly enhances the simplicity and efficiency of the model. The high level of recognition accuracy further serves as evidence of the effectiveness and adaptability of the proposed integrated tool wear monitoring model. Manufacturers and researchers can significantly improve efficiency, quality, and cost-effectiveness by leveraging advanced imaging technology and integrating it with CNC control systems. This innovative approach underscores the potential of Industry 4.0 technologies to revolutionize manufacturing processes for process automation and drive competitive advantage in the global marketplace and academic research studies. Future research should focus on improving the accuracy of tool monitoring model identification by optimizing model parameters. In addition, different sensors, such as vibration or acoustic emission signals, will apply in the future direction.

Data availability

The data supporting this study's findings are available from the corresponding author upon reasonable request.

References

Wang M, Zhou J, Gao J, Ziqiu Li Z, Li E (2020) Milling tool wear prediction method based on deep learning under variable working conditions. IEEE Access XX:1–9. https://doi.org/10.1109/ACCESS.2020.3010378

Zheng H, Lin J (2019) A deep learning approach for high speed machining tool wear monitoring. Proc 2019 3rd IEEE Int Conf Robot Autom Sci ICRAS 2019. https://doi.org/10.1109/ICRAS.2019.8809070

Mohanraj T, Yerchuru J, Krishnan H, NithinAravind RS, Yameni R (2021) Development of tool condition monitoring system in end milling process using wavelet features and Hoelder’s exponent with machine learning algorithms. Meas J Int Meas Confed 173:108671. https://doi.org/10.1016/j.measurement.2020.108671

Bai L, Liu H, Zhang J, Zhao W (2023) Real-time tool breakage monitoring based on dimensionless indicators under time-varying cutting conditions. Robot Comput Integr Manuf 81:102502. https://doi.org/10.1016/j.rcim.2022.102502

Wang G, Guo Z, Yang Y (2013) Force sensor based online tool wear monitoring using distributed Gaussian ARTMAP network. Sensors Actuators, A Phys 192:111–118. https://doi.org/10.1016/j.sna.2012.12.029

Madhusudana CK, Kumar H, Narendranath S (2017) Face milling tool condition monitoring using sound signal. Int J Syst Assur Eng Manag 8:1643–1653. https://doi.org/10.1007/s13198-017-0637-1

Anahid MJ, Heydarnia H, Niknam SA, Mehmanparast H (2020) Evaluating the sensitivity of acoustic emission signal features to the variation of cutting parameters in milling aluminum alloys: part A: frequency domain analysis. Proc Inst Mech Eng Part B J Eng Manufhttps://doi.org/10.1177/0954405420949127

Zhou Y, Sun W (2020) Tool wear condition monitoring in milling process based on current sensors. IEEE Access 8:95491–95502. https://doi.org/10.1109/ACCESS.2020.2995586

He Z, Shi T, Xuan J, Li T (2021) Research on tool wear prediction based on temperature signals and deep learning. Wear 478–479:203902. https://doi.org/10.1016/j.wear.2021.203902

Cao K, Han J, Xu L, Shi T, Liao G, Liu Z (2022) Real-time tool condition monitoring method based on in situ temperature measurement and artificial neural network in turning. Front Mech Eng 17:1–15. https://doi.org/10.1007/s11465-021-0661-3

Mannan MA, Mian Z, Kassim AA (2004) Tool wear monitoring using a fast Hough transform of images of machined surfaces. Mach Vis Appl 15:156–163. https://doi.org/10.1007/s00138-004-0137-6

Vaishnav S, Agarwal A (2020) Machine learning-based instantaneous cutting force model for end milling operation. J Intell Manuf 31:1353–1366. https://doi.org/10.1007/s10845-019-01514-8

You Z, Gao H, Guo L, Liu Y, Li J (2020) On-line milling cutter wear monitoring in a wide field-of-view camera. Wear 460–461:. https://doi.org/10.1016/j.wear.2020.203479

Yuan J, Liu L, Yang Z, Bo J, Zhang Y (2021) Tool wear condition monitoring by combining spindle motor current signal analysis and machined surface image processing. Int J Adv Manuf Technol 116:2697–2709. https://doi.org/10.1007/s00170-021-07366-y

Chen M, Li M, Zhao L, Liu J (2023) Tool wear monitoring based on the combination of machine vision and acoustic emission. Int J Adv Manuf Technol 125:3881–3897. https://doi.org/10.1007/s00170-023-11017-9

Goodfellow I, Bengio Y and Ac (2016) Deep Learning. The MIT Press

Tercan H, Meisen T (2022) Machine learning and deep learning based predictive quality in manufacturing : a systematic review. J Intell Manuf 33:1879–1905. https://doi.org/10.1007/s10845-022-01963-8

Cao D, Sun H, Zhang J, Mo R (2020) In-process tool condition monitoring based on convolution neural network. Jisuanji Jicheng Zhizao Xitong/Computer Integr Manuf Syst CIMS 26:. https://doi.org/10.13196/j.cims.2020.01.008

Nguyen VT, Pham VH (2020) Deep stacked auto-encoder network based tool wear monitoring in the face milling process. J Mech Eng 66:227–234. https://doi.org/10.5545/sv-jme.2019.6285

Ma J, Luo D, Liao X, Zhang Z, Huang Y, Lu J (2021) Tool wear mechanism and prediction in milling TC18 titanium alloy using deep learning. Measurement 173:108554. https://doi.org/10.1016/j.measurement.2020.108554

Xia M, Li T, Shu T, Wan J, Silva C, Wang Z (2019) A two-stage approach for the remaining useful life prediction of bearings using deep neural networks. IEEE Trans Ind Informatics 15:3703–3711

Rui Zhao, Ruqiang Yan JW and KM (2017) Learning to monitor machine health with convolutional bi-directional LSTM networks. Sensors (Switzerland) 17, 273:1–18https://doi.org/10.3390/s17020273

An Q, Tao Z, Xu X, El Mansori M, Chen M (2020) A data-driven model for milling tool remaining useful life prediction with convolutional and stacked LSTM network. Meas J Int Meas Confed 154:107461. https://doi.org/10.1016/j.measurement.2019.107461

Li B, Lu Z, Jin X, Zhao L (2023) Tool wear prediction in milling CFRP with different fiber orientations based on multi-channel 1DCNN-LSTM. J Intell Manufhttps://doi.org/10.1007/s10845-023-02164-7

Ghosh N, Ravi YB, Patra A, Mukhopadhyay S, Paul S, Mohanty AR, Chattopadhyay AB (2007) Estimation of tool wear during CNC milling using neural network-based sensor fusion. Mech Syst Signal Process 21:466–479. https://doi.org/10.1016/j.ymssp.2005.10.010

Patnaik B, Mishra M, Bansal RC, Jena RK (2021) MODWT-XGBoost based smart energy solution for fault detection and classification in a smart microgrid. Appl Energy 285:116457. https://doi.org/10.1016/j.apenergy.2021.116457

Costa FB, Neto CMS, Carolino SF, Ribeiro RLA, Barreto RL, Rocha TOA, Pott P (2012) Comparison between two versions of the discrete wavelet transform for real-time transient detection on synchronous machine terminals. 2012 10th IEEE/IAS Int Conf Ind Appl INDUSCON 2012 1–5. https://doi.org/10.1109/INDUSCON.2012.6453533

Liu D, Dysko A, Hong Q, Tzelepis D, Booth C (2022) Transient wavelet energy-based protection scheme for inverter-dominated microgrid. IEEE Trans Smart Grid 13:2533–2546. https://doi.org/10.1109/TSG.2022.3163669

Ashok V, Yadav A (2020) A real-time fault detection and classification algorithm for transmission line faults based on MODWT during power swing. Int Trans Electr Energy Syst 30:1–27. https://doi.org/10.1002/2050-7038.12164

Zhu L, Wang Y, Fan Q (2014) MODWT-ARMA model for time series prediction. Appl Math Model 38:1859–1865. https://doi.org/10.1016/j.apm.2013.10.002

Chen HY, Lee CH (2021) Deep learning approach for vibration signals applications. Sensors 21:. https://doi.org/10.3390/s21113929

Hong CW, Lee K, Ko MS, Kim JK, Oh K, Hur K (2020) Multivariate time series forecasting for remaining useful life of turbofan engine using deep-stacked neural network and correlation analysis. Proc - 2020 IEEE Int Conf Big Data Smart Comput BigComp 2020 63–70. https://doi.org/10.1109/BigComp48618.2020.00-98

Wu C, Jiang P, Ding C, Feng C, Chen T (2019) Intelligent fault diagnosis of rotating machinery based on one-dimensional convolutional neural network. Comput Ind 108:53–61. https://doi.org/10.1016/j.compind.2018.12.001

Javed K, Gouriveau R, Li X, Zerhouni N (2018) Tool wear monitoring and prognostics challenges : a comparison of connectionist methods toward an adaptive ensemble model. J Intell Manuf 29:1873–1890. https://doi.org/10.1007/s10845-016-1221-2

Tang L, Sun Y, Li B, Shen J, Meng G (2019) Wear performance and mechanisms of PCBN tool in dry hard turning of AISI D2 hardened steel. Tribol Inthttps://doi.org/10.1016/j.triboint.2018.12.026

Sun XG, Sun L, Wang EH (2014) Study on joint surface parameter identification method of shaft-toolholder and toolholder-tool for vertical CNC milling machine. Mach Tool Hydraul 42:106–109

Wang B, Sun W, Wen B (2012) The finite element modeling of high-speed spindle system dynamics with spindle-holder-tool joints. Jixie Gongcheng Xuebao(Chinese J Mech Eng 48:83–89

Abdeltawab A, Zhang X, Zhang L (2023) Tool wear classification based on maximal overlap discrete wavelet transform and hybrid deep learning model. Int J Adv Manuf Technolhttps://doi.org/10.1007/s00170-023-12797-w

García Plaza E, Núñez López PJ (2018) Analysis of cutting force signals by wavelet packet transform for surface roughness monitoring in CNC turning. Mech Syst Signal Process 98:634–651. https://doi.org/10.1016/j.ymssp.2017.05.006

Aralikatti SS, Ravikumar KN, Kumar H, Shivananda NH, SugumaranV (2020) Comparative study on tool fault diagnosis methods using vibration signals and cutting force signals by machine learning technique. SDHM Struct Durab Heal Monit 14:127–145. https://doi.org/10.32604/SDHM.2020.07595

Acknowledgements

Thanks to Shanghai WPT Company for providing all the hardware to support this study. Thanks to the Chinese government scholarship (CSC) for financial support.

Funding

This study was funded by the Chinese government scholarship (CSC).

Author information

Authors and Affiliations

Contributions

Ahmed Abdeltawab: writing original draft, writing review editing, idea, experimental work, signal and image processing, CNC machining and programming, data analysis investigation, methodology, validation.

Zhang Xi: project administration, idea, review editing, experimental resources support, methodology, validation, and supervision.

Zhang Longjia: vision system application, experimental work, data analysis investigation, methodology, validation.

Corresponding author

Ethics declarations

Consent to participate

Not applicable

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Abdeltawab, A., Xi, Z. & Longjia, Z. Enhanced tool condition monitoring using wavelet transform-based hybrid deep learning based on sensor signal and vision system. Int J Adv Manuf Technol (2024). https://doi.org/10.1007/s00170-024-13680-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00170-024-13680-y