Abstract

Convolutional neural networks, recurrent neural networks, and transformers have excelled in representation learning for large-scale multi-label text classification. However, there have been very few works that have incorporated topic information in the process of encoding textual sequential semantics, partly because the text’s topic needs to be modeled separately. To address this, we introduce the latent topic-aware encoder (TAE), designed for large-scale multi-label text classification. The TAE features two key components: a latent topic attention module that correlates latent topic vectors with word hidden vectors and a topic-fused channel attention module that processes topic-specific text representations to produce a refined final text representation. Our experiments demonstrate that TAE seamlessly integrates with existing deep models, significantly enhancing their classification accuracy and convergence speed across various datasets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The information age has brought about a surge in text data, turning it into a valuable asset. However, the inherent unstructured nature of text poses significant challenges in its organization and use. Text classification has become essential in areas like sentiment analysis, information retrieval, dialogue systems, and text-driven recommendation systems [1]. Precise text classification is crucial for further processing. Traditional multi-class text classification involves assigning a single label to each text sample. In contrast, multi-label text classification (MLTC) involves tagging each text with multiple relevant labels from a set list, addressing the complexity of texts associated with various labels [2]. When dealing with large-scale multi-label classification, the label space can include thousands or even tens of thousands of labels. The scarcity of connections between texts and labels, along with the skewed distribution of label frequencies, presents notable difficulties in such scenarios. Consequently, refining multi-label text classification approaches is a focal point of research in both the academic and industrial spheres.

High-quality text representation is essential for multi-label classification tasks; among the approaches, topic models and word embedding methods stand out as notable techniques for text modeling. The topic model, exemplified by Latent Dirichlet Allocation (LDA) [3], is a widely used text analysis tool in fields like machine learning, natural language processing, and data mining [4, 5]. It extracts themes from a corpus based on word associations within documents, thus revealing semantic links between words and producing coherent topics comprising semantically related terms. Despite its effectiveness, traditional topic modeling, which treats documents as a collection of unordered words, overlooks the semantic sequence of words. Conversely, word embedding methods, such as those used in various deep learning architectures including convolutional neural network (CNN) [6], recurrent neural network (RNN) [7], and Transformer [8], concentrate on word-level relationships by representing words as continuous vectors in a low-dimensional space, capturing their similarities and connections. These neural network models have proven highly adept at semantic text representation through word vectors. In the realm of text classification, many deep-learning models prioritize feature extraction from text and the binary association between text and labels. By aligning word representations with labels, they strive to enhance classification accuracy, as demonstrated by models like TextCNN [6], XML-CNN [9], and AttentionXML [10].

Merging topic models and word embeddings has emerged as an effective strategy for creating sophisticated text representations, as evidenced by recent studies [11, 12]. This combination retains the distinct advantages of both methods in multi-label text classification, where a given text’s topic might align with multiple labels. For instance, a text labeled ”rock” likely relates to the ”band” and ”music” labels, all within the broader music category. High-frequency words often define topics in a text, and in texts tagged with ”music,” terms like ”guitar” and ”band” highlight the musical theme. Word embeddings, in contrast, focus on the contextual relationships between words, offering superior representations of related terms and enhancing sentence and overall text representations. Innovative models such as Topic-bert [5], which integrates topic models with pre-training methods, and MLC-LWL [13], which employs labeled topic models for word-level tagging, demonstrate the utility of topic information in classification. Moreover, [14] shows the effectiveness of incorporating topic-related information in a multimodal classification model. However, these models often rely on separately constructed traditional topic models to extract topics, a practice that may hinder their capacity to leverage topic information for classification improvements in the age of advanced deep learning models.

Accordingly, this paper introduces a lightweight model named TAE that enables deep learning architectures to assimilate latent topic information directly within the text sequence modeling process, eliminating the need for a separate traditional topic model. TAE, as an autonomous module, integrates latent topic vectors to gauge each word’s relevance to various topics, thus capturing the text’s feature representations across different latent topics. After combining and reinforcing these features, the model yields text feature representations enriched with potential topics. The TAE module can be incorporated into deep learning frameworks such as CNN, RNN, and Transformer, enhancing their classification capabilities. Empirical studies on three datasets validate that the TAE model significantly boosts the performance of various foundational network architectures.

The main contributions of this paper are as follows:

-

We propose TAE, which extracts latent topic information without additional topic models while preserving the semantic order of the text. By introducing latent topic vectors, it efficiently captures the latent topics of the text.

-

The TAE can be easily integrated into existing neural network architectures for text classification. Specifically, the base net-work is used to obtain the hidden vectors of text words, which can be subsequently fed into TAE.

-

The performance of TAE has been evaluated across three different datasets and several neural networks, demonstrating its remarkable adaptability and efficacy. Notably, it boosts the performance and accelerates convergence in large-scale multi-label classification problems.

The remainder of this paper is organized as follows: Section 2 presents a comprehensive review of the related literature. Section 3 describes our proposed model, including the latent topic and fused attention modules. This section also presents the overall framework of the variant models. Section 4 evaluates the proposed model and analyzes the results. Finally, Section 5 presents the conclusion of our research.

2 Related works

Our research concentrates on multi-label text classification, particularly utilizing methods that assess the binary relevance between texts and labels and approaches that leverage label embeddings.

2.1 Binary-relevance based methods

TextCNN [6], a seminal work, employed convolutional neural networks to extract features from text and classify them, surpassing previous methods when employing pre-trained word embeddings. DEP-CNN [15] proposes a dynamic embedding projection with a gated convolutional neural network that leverages gate units and shortcut connections to process and maintain word information while integrating context into the embedding matrix. Long short-term memory (LSTM) and gated recurrent units (GRU), specific RNN variants, use gating mechanisms to manage information over time selectively, yielding impressive results in text classification [7]. Additionally, bidirectional RNNs with self-attention have performed outstandingly in these tasks [16]. To refine the deep value network and mitigate confounding effects on input data, GVN introduces a gating mechanism [17]. CRL [16] begins by factorizing a text-label log matrix for a preliminary document representation, subsequently refining the deep text representation. ML-Reason [18] enhances binary classification by iteratively inferring inter-label relations, mitigating label order sensitivity. Additionally, LACO [19] integrates text and label embedding within a multi-task learning framework, introducing a label co-occurrence prediction task to bolster the main classification objective. A novel Neural Expectation Maximisation framework (nEM) [20] combining neural networks with probabilistic modeling is used for multi-label classification problems. LSFA [21] enhances tail-labeled features in the latent space by transferring second-order statistical information of documents after document representation learning in the high-level latent space to mitigate the long-tail problem.

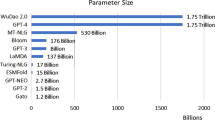

In order to tackle the challenges of text classification with very large label sets, there have been developments in the encoding of text and the partitioning of label space. The XML-CNN model [9] employs a convolutional neural network with a bottleneck layer to manage the vast number of classification parameters. In contrast, hierarchical classification schemes, such as those analyzed in [22], integrate pre-trained word vectors with machine learning methods. AttentionXML [10] applies an attention mechanism with a bidirectional LSTM to better associate text with labels. Furthermore, models like Bidirectional Encoder Representations from Transformers (BERT) [8] and Generative Pre-trained Transformer (GPT) [23] struggle with the long-tailed label distribution and computational demands in large-scale classification despite their contextualized text representation capabilities and efficiency with limited data. LP-ICP [24] proposes to use inductive conformal prediction to solve the large-scale label prediction problem.

These various text classification techniques using binary correlation exploit the relationship between text and labels, typically using neural network techniques such as CNN, RNN, and Transformer to convert sparse text into dense vectors and then using multiple classifiers.

2.2 Embedding-based methods

Several approaches have been proposed for text classification by integrating labels into a latent semantic space. NATT [25] utilizes advanced text feature extraction and label embedding that hinges on the proximity of labels for prediction. Similarly, [26] harnesses label conditional frequencies to refine multi-label text feature selection. In a different approach, LEAM [27] casts all labels into a shared space and discerns their text association patterns through an attention mechanism. GILE [28] provides a versatile framework for document label embedding in text classification. In contrast, CORE [29] applies attention to concurrently learning word and label embeddings, capturing both label relevance and contextual nuances. On another point, LightXML [30] introduces a dual-stage training method employing BERT for text and label encoding. BGNN-XML [31] performs graph convolution for attribute graph clustering using low-pass graph filters to model label dependencies and label features on the extreme multi-label classification problem. Moreover, HTTN [32] transfers insights from prevalent to less common labels utilizing a hierarchical attention network for text encoding. Despite these methods probing the interplay between text words and labels during embedding, they neglect the dynamic between labels, limiting predicting accuracy. To tackle this, LMSD [33] maps both text and labels to the same space for metric learning and employs deep neural networks to discern labels through a distillation process.

Embedding-based text classification approaches emphasize the interaction between label embed-dings and text features. This requires additional label embedding space overheads to represent semantic information effectively while also considering textual label correlations.

The TAE consists of two main parts: (1) a latent topic attention module that inputs a sequence of hidden vectors and uses a topic attention mechanism to determine the relationship between latent topic vectors and word hidden vectors; (2) a topic fused channel attention module that takes the text features generated by the former module and extracts weighted features that are subsequently combined to form a comprehensive topic-fused text representation

3 Methodology

In this section, TAE specific implementation details are presented. TAE mainly consists of the latent topic attention module and the topic fusion attention module, depicted in Fig. 1.

3.1 Latent topic attention

Born in Seattle, Washington, Hendrix began playing guitar at the age of 15. In 1961, he enlisted in the US Army but was discharged the following year. Soon afterward, he moved to Clarksville then Nashville...... The world’s highest-paid performer, he headlined the Woodstock Festival in 1969 and the Isle of Wight Festival in 1970 before his accidental death in London from barbiturate-related asphyxia on September 18, 1970.

The textFootnote 1 in question outlines the life journey of a musician, detailing his origins, migration, musical triumphs, and unexpected passing. When building text representation features, a reasonable deep learning model should capture complicated topics while maintaining sequential semantics, ensuring that it captures events such as the artist’s place of birth, related bands, albums, honors, and untimely death.

Unlike traditional topic models which establish specific architectures to distinguish text topics, TAE attempts to preserve the semantic sequence extracted by neural networks while discovering latent topics. To this end, trainable latent topic vectors \(\textbf{T}=[\textbf{t}_1,\textbf{t}_2,...,\textbf{t}_n]\) are introduced into the TAE to enable the discovery of latent topics underlying different words. Specifically, an attention mechanism is implemented to assign weights to the word hidden vectors corresponding to different topics, and a topic-aware textual representation is formed in the weighted sum. As shown in Fig. 1, the word hidden vectors \(\textbf{H}=[\textbf{h}_1,\textbf{h}_2,...,\textbf{h}_l]\) is generated by the base neural network, calculating the weight \(\mathbf {\alpha }_{ij}\) of word j to latent topic i. The formulas are as follows:

Where \(\textbf{W}_1\), \(\textbf{W}_2\) and \(\textbf{W}_3\) are the weights of attention. The corresponding topic-aware text representation can be obtained by:

It is worth noting that a single attention module is inadequate since the distribution of attention weights should be different when paying attention to different topics but should depend on the corresponding topic. Therefore, each topic should correspond to a distinct set of attention weights. Consequently, n individual attention weights must be trained to reflect different topics. Utilizing these sets of weights derived on \(\textbf{H}\), it produces a set of text representations \(\textbf{C} = [\textbf{c}_1, \textbf{c}_2,...,\textbf{c}_n]\) for different topics.

3.2 Topic fused channel attention

To the best of our knowledge, different topics hold distinct significance concerning the overall semantics of text. Inspired by achievements of the squeeze-excitation network in computer vision [34], TAE focuses more on the significance of different topic representations. Specifically, TAE builds a topic fused channel attention to allocate dynamic ratios among different topic representations, as shown in Fig. 1.

Firstly, ”summary statistics” are aggregated and computed for each topic feature. Precisely, some pooling techniques, such as max or mean, to compress the initial topic feature \(\textbf{C} = [\textbf{c}_1, \textbf{c}_2,...,\textbf{c}_n]\) into a vector \(\textbf{z}=[z_1, z_2,...,z_n]\), where \(z_i\) is a scalar value indicating global information about the i-th topic feature representation. \(z_i\) can be calculated via the ensuing global mean pooling:

where m is the dimension of topic features.

Then, the weight of each topic feature is obtained according to the statistical vector \(\textbf{z}\). Two fully connected layers are used to acquire the weights. The first layer acts as dimensionality reduction with a reduction rate r on parameter \(\textbf{W}_4\), a hyperparameter utilizing relu as the nonlinear function. The second layer enhances dimensionality using parameter \(\textbf{W}_5\) and takes sigmoid as the activation function. Formally, the weights of distinct topic features \(\textbf{s}=[s_1, s_2,...,s_n]\) can be computed as follows:

where \(\textbf{W}_4 \in \mathbb {R}^{m * m/r}\), \(\textbf{W}_5 \in \mathbb {R}^{m/r * m}\).

Next, utilize the obtained weights to reweight the topic features. Concretely, the original topic features \(\textbf{C}\) are multiplied with the weight vector \(\textbf{s}\) to obtain a new set of features \(\textbf{V} = [\textbf{v}_1, \textbf{v}_2,..., \textbf{v}_n]\):

Finally, the newly obtained features are combined to generate the final text encoding that incorporates different latent topics:

where \(\textbf{x}\) is the final text feature representation and \(\textbf{v}_i\) is the new feature obtained for the ith topic.

According to [35], the trained models overfit the common objects that co-occur in a common context, leading to a struggle for generalization to scenes where the same objects appear in unseen contexts. To alleviate the negative impact of the bias of the used dataset, we combine the latent topic attention and topic-fused channel attention to enable the encoder to connect the co-occurred objects with topics other than themselves.

3.3 Complexity analysis

TAE is available as an additional modular component that can go to other neural networks to be combined, and in order to quantify its complexity, we only evaluated this module individually. On the basis of n number of topics and l length of text sequences, the potential topic attention and channel attention are executed n times on each training batch, the time complexity of the factorization component is \(O(nl^2)\). For the space complexity, k is the input hidden layer vector dimension, \(k_1\) is the number of potential topic attention hidden units, n is the number of potential topics, and l is the input sequence length, where the total number of parameters is: \(nk+(n+k+1)k_1+2nk\).

4 TAE Instantiations

The TAE model operates only on the hidden sequences obtained by the base network from the original text. Hence, it is possible to integrate neural networks as backbone models to capture latent topic information while maintaining the semantic integrity of the texts’ sequences. To show this, TAE-enhanced models that combine the TAE module with various established deep neural networks are presented, and specifics of these models are given below.

TAE-XMLCNN

In the TAE-XMLCNN model, TAE augments a convolutional neural network as shown in Fig. 2a. Specifically, the convolutional network of XMLCNN [9] is deployed as the backbone, and one-dimensional convolutional filters are applied to the pre-trained word embeddings to generate feature vectors for the input of TAE. Figure 2a shows these filters operate with a window size of 2, capturing distinctive semantic feature sequences, which are then processed by TAE modules. The gathered topic features from TAE are subsequently input into a fully connected layer for classification.

TAE-BiLSTM

For the TAE-BiLSTM model, an RNN with LSTM units is employed as the backbone, as demonstrated in Fig. 2b. The bidirectional LSTM units in TAE-BiLSTM extract both forward and reverse semantic features from the text, with the number of LSTM units dictating the dimensions of the resultant hidden vectors. These vectors are subsequently put into the TAE module, culminating in classification through a fully connected layer.

TAE-BertXML

The TAE-BertXML model combines the TAE with the transformer structure. The TAE focuses on capturing topic information when modeling the sequence semantics and, therefore, does not use a pre-trained model to assure fair comparisons. As demonstrated in Fig. 2c, the BertXML [36] is used as the backbone, which is improved from BERT. It tackles the parameter oversize problem by reducing hidden layers’ dimensionality and incorporating additional CLS labels to solve large-scale classification. The token sequences generated by BertXML are fed into the TAE, and the prediction utilizes a fully connected layer.

5 Experiments and analysis

In this section, the following questions will be addressed and TAE will be evaluated through a series of experiments:

-

1.

RQ1: Does the Vanilla-TAE model perform competitively with state-of-the-art methods?

-

2.

RQ2: Does the TAE-augmented model improve the original base model?

-

3.

RQ3: How does the TAE model perform in terms of computation time and model size?

-

4.

RQ4: Does the TAE model speed up the convergence during training compared to other methods?

-

5.

RQ5: How do critical parameters affect the performance of the TAE model?

5.1 Datasets

The experiments were conducted on three benchmarks for the large-scale MLTC tasks [16, 37, 38]: EUR-Lex which is a dataset of EU law texts; an online Wikipedia datasetFootnote 2; and CiteULike-t which contains academic papers and manual tags.Footnote 3 Table 1 presents a detailed overview of three datasets.

5.2 Evaluation metrics

Four metrics were used for a comprehensive evaluation: The precision at top-k (P@k), normalized discounted cumulative gain at top-k (NDCG@k), propensity-scored precision at top-k (PSP@k), and propensity-scored normalized discounted cumulative gain at top-k (PSnDCG@k). Notably, the PSP@k and PSnDCG@k metrics have been designed to assess the performance of tail labels. The precision (P@k) and normalized discounted cumulative gain (NDCG@k) metrics have been adopted as two commonly used measures for evaluating the performance of MLTC models. Specifically, P@k measures the proportion of true labels within the top-k predicted labels. It is mathematically defined as:

The binary relevance score of a label i for a given document is denoted by \(rel(i) = 1/0\) to indicate its true or false status. The NDCG@k is commonly used for evaluating the performance of information retrieval systems. It is mathematically defined as:

The DCG is a metric aggregating the relevance scores of items in a ranked list, with a discount applied to each item at lower ranks. The accumulation of DCG occurs from the head to the tail of the prediction result list. The ideal DCG at position k is denoted as IDCG@k. Additionally, the propensity-scored variations of P@k and NDCG@k were employed to examine the performance on tail labels, namely PSP@k and PSnDCG@k. The propensity score \(p_i\) for label i is defined as \(p_{i} \propto 1 /\left( 1+e^{-\log \left( k_{i}\right) }\right) \), where \(k_i\) is the number of positive training instances associated with label i. The formulation of \(p_i\) ensures that \(p\approx 1\) is for head labels, while \(p_i \ll 1\) is for tail labels. Two variants of propensity-scored metrics are given:

5.3 Evaluation methods

Baselines To encompass a variety of approaches, four representative methods are chosen, a.k.a, XML-CNN, BiLSTM-ATT, AttentionXML and BertXML, which respectively takes convolutional neural network, recurrent nerual network, label tree and attention-aware neural network, transformer as the backbone.

-

XML-CNN [9]: It employs CNNs to tackle extreme multi-label classification challenges, adhering to the original paper’s parameter settings with a convolution window size of [2, 4, 8] and 128 convolution kernels. XML-CNN is one of the most-cited and the first CNN-tailored XML classifiers, outperforming FastXML, FastText, Bow-CNN, and CNN-Kim [9].

-

BiLSTM-ATT [16]: A text encoder utilizes a bidirectional LSTM or GRU with an attention mechanism configured with 256 LSTM units. BiLSTM-ATT is one of the most widely used sequential encoders and still competitive before the transformer was proposed [16].

-

AttentionXML [10]: It applies an attention mechanism that captures the relationships between textual features and labels after encoding the text with a Bidirectional LSTM. In the experiments, the AttentionXML-1 model is specifically used. AttentionXML is also one of the most-cited and the first label tree-based attention-aware deep classifier for the XML task and outperforms most representative XML methods, including AnnexML, DiSMEC, MLC2Seq5, XML-CNN, PfastreXML, Parabel, XT and Bonsai [10].

-

BertXML [36]: It enhances BERT by reducing the hidden layer’s dimensionality to handle excessive parameters and adds extra CLS tags for large-scale label classification. The specific configuration was: two BERT layers, each using two attention heads and an embedding dimension of 300, without pre-training. BertXML is one of the most representative transformer-based classifiers for the XML task [36].

TAE and variations The vanilla TAE model and its augmented variant with several prototypical neural networks. The number of topics is set to 30 and the latent topic vector dimension is the same as the hidden vector generated by the base network.

-

Vanilla-TAE: This is the fundamental TAE model. It employs pre-trained word vectors as input, and the latent topic vector dimension is the same as the word vector dimension, which is 300. Specifically, the model directly feeds pre-trained word vectors into the TAE module without utilizing any basic neural network. Subsequently, a fully connected layer is used for prediction.

-

TAE-XMLCNN: The CNN part is the same as XML-CNN, and the latent topic vector dimension is 128. The sequence features extracted by each window are input to the TAE module separately, and the outputs of the three TAE modules are concatenated and input to the fully connected layer for prediction.

-

TAE-BiLSTM: The recurrent neural network part is the same as BiLSTM-ATT, the latent topic vector dimension is set to 512. The sequence obtained by the recurrent neural network is input into the TAE module, and a fully connected layer is used for prediction.

-

TAE-BertXML: When using the transformer as the basic model, the BertXML in the baseline is used with the same settings. The latent topic vector dimension is 300.

5.4 Experimental settings

For each dataset, the dictionary size was normalized to 40,000, with the document length set to 500 for the Eur-Lex and Wiki10-31K datasets and 256 for the CiteULike-t dataset. Padding was added to the document word sequences to meet the minimum length requirement. For maintaining fairness in all experiments, all methods use the same 300-dimensional GloVe word vectorFootnote 4 initialization input, which remains static during training. The number of topics in the TAE module is set to 30 and trained using the Adam optimizer with a learning rate of 0.001. In addition, all methods are implemented on the PyTorch platform.

5.5 Performance comparison (RQ1, RQ2)

This section compares TAE model with baseline models, evaluating their performance across three datasets with results detailed in Tables 2, 3, 4, and 5. Four baseline methods based on CNN, RNN and Transformer are employed for comparison: XML-CNN, BiLSTM-ATT, AttentionXML, and BertXML, alongside TAE-augmented models sharing the same network architecture. XML-CNN, a classic within the CNN framework, utilizes a CNN for feature extraction and a bottleneck layer to handle large-scale labels. Our TAE-XMLCNN, based on the same CNN structure, shows significant improvements, particularly in P@3 and NDCG@3 metrics. For instance, TAE-XMLCNN outperforms XML-CNN by 1.8% and 2.0% on the EUR-Lex dataset, 1.8% and 1.6% on the Wiki10-31K dataset, and 3.3% and 3.6% on the CiteULike-t dataset, as illustrated in Tables 2 and 3. BiLSTM-ATT, a classical RNN model integrating bidirectional LSTM with attention, and AttentionXML, which employs label attention for extreme multi-label classification, demonstrate that RNN methods surpass CNNs across datasets, with AttentionXML leading the baseline owing to its label attention mechanism. The TAE-BiLSTM model built on the same bidirectional LSTM, surpasses AttentionXML with improvements of 0.3% and 0.5% on the EUR-Lex dataset, 0.8% and 0.8% on Wiki10-31K, and 3.0% and 2.7% on CiteULike-t. In the Transformer structure, TAE-BertXML improves upon the base model across all datasets, with gains of 2.6% and 2.0% on EUR-Lex, 2.2% and 2.0% on Wiki10-31K, and 2.4% and 2.8% on CiteULike-t. Remarkably, Vanilla-TAE model achieves highly competitive performance without deep networks, rivaling the strongest baseline, AttentionXML, and outperforming the other deep models.

Tail label prediction performance is assessed in Tables 4 and 5, where TAE consistently outperforms the baseline models. For example, TAE-BiLSTM shows a 1.7% and 0.4% improvement over AttentionXML on EUR-Lex in terms of PSP@3 and S@3, and similar enhancements are seen on Wiki10-31K and CiteULike-t. Notably, Vanilla-TAE excels in predicting tail tags, underlining TAE’s ability to predict tail labels accurately and diversely. The model’s ability to learn latent topics correlating with tail labels, even from limited samples, is a significant factor in its performance. TAE achieves even greater enhancements over the base model with increasing label numbers and sparsity.

Overall, regarding the reason why TAE improves the backbone classifier, this can be attributed to the latent topic attention mechanism, which aids in learning a variety of latent topics in the text, thereby broadening label coverage and improving the accuracy and diversity of label predictions. The original backbone network directly learns the mapping probabilities between words and labels. Still, due to the coarse granularity of this mapping approach and the presence of the aforementioned bias patterns in the dataset, it is easy to mislead ambiguous words to map to incorrect labels. Adding a topic layer between the word and label mappings creates a two-level(a.k.a word-topic-label) mapping, which reduces the error probability of the one-level mapping.

5.6 Computation time and model size comparison (RQ3)

This section compares the models’ time overhead and space overhead on the three datasets. The training and testing time of the different models on each data batch and the number of model parameters are shown in Table 6. The model’s overhead varies between datasets because of the impact of text length and the number of labels. The impact of the number of labels is particularly pronounced in models that incorporate a classification layer. Increasing the number of labels results in a higher dimension of the classification layer and an increased number of parameters to be processed. Specifically for the different models, compared to XMLCNN, which uses the same CNN structure, TAE-XMLCNN directly works on sequences generated by convolutional operations without a bottleneck layer, with fewer parameters and less time-consuming for training and testing. On the model based on LSTM structure, AttentionXML calculates the attention weight of each label and obtains good results while paying much computational overhead.TAE-BiLSTM has a larger number of parameters, but the time overhead is lower than that of AttentionXML, which is the strongest in the baseline. BertXML, based on the Transformer structure, has more overhead in space than the CNN and LSTM structures on all three datasets. TAE-BertXML acts directly on top of the word-hiding vectors produced by the transformer, and the number of parameters and the training and testing overheads are much smaller than those of the original BertXML. Benefiting from the parallel computing of the attention mechanism, TAE achieves an effective boost with minimum computational overhead.

Convergence comparison in terms of P@5 and NDCG@5 on three datasets. Here we evaluate the convergence of the model by referring to its performance on the validation set. Generally, the model is considered to have converged when the accuracy remains stable within a range of one percent. (a) P@5 on EUR-Lex. (b) P@5 on Wiki10-31K. (c) P@5 on CiteULike-t. (d) NDCG@5 on EUR-Lex. (e) NDCG@5 on Wiki10-31K. (f) NDCG@5 on CiteULike-t

5.7 Convergence comparison (RQ4)

In this section, the effect of TAE on model convergence is studied, as illustrated in Fig. 3, which shows the training progress of various models on different datasets under a uniform learning rate. The training steps are plotted along the x-axis, while the y-axis reflects the precision at 5 (P@5) and the normalized discounted cumulative gain at 5 (NDCG@5) scores on the validation set. A dashed line represents the baseline models, and a solid line indicates the TAE-augmented models. Models sharing the same architecture, like TAE-BiLSTM and BiLSTM-ATT, are marked with matching colors to facilitate comparison. TAE notably accelerates the convergence of all underlying models and raises the P@5 score on the validation set. Particularly, in the EUR-Lex dataset (Fig. 3a and d), the standard BiLSTM-ATT model converges more slowly compared to others due to LSTM’s inherent traits, whereas the TAE-BiLSTM displays a marked improvement in convergence speed, surpassing AttentionXML. For the Wiki10-31K dataset (Fig. 3b and e), after an initial swift increase, all models demonstrate consistent progress, with the TAE-enhanced baseline model showing a notably faster convergence. On the CiteULike-t dataset (Fig. 3c and f), while BretXML undergoes significant mid-term variations, the TAE-BertXML model attains quicker and more stable convergence.

5.8 Impact of topic numbers (RQ5)

This section explores how model performance is influenced by different numbers of topics within three datasets, employing the most effective TAE-BiLSTM model with topics ranging from 10 to 50. Figure 4 displays the experimental outcomes in terms of P@k and NDCG@k. It shows a performance improvement with the number of topics growing from 10 to 30, attributable to the richer text representation from a broader range of extracted themes. Contrarily, performance slightly declines when the topic count reaches 50, likely due to an increased risk of overfitting from too many model parameters, which can diminish performance.

5.9 Case study

In this section, a case study is presented to demonstrate the ability of the TAE model to extract information about latent topics from raw text. Considering text length constraints, samples are taken from the test sets of EUR-Lex and CiteULike-t. Vanilla-TAE and TAE-BiLSTM were employed and focused on the distribution of attention weights \(\alpha \) within the topic attention mechanism, as depicted in Figs. 5 and 6. The color intensity in the figure reflects the magnitude of \(\alpha \), with darker colors indicating higher topic attention to specific words. Vanilla-TAE leverages pre-trained word embeddings, shown in Figs. 5a and 6a, and demonstrates its ability to identify topic-relevant words using varied latent topic vectors, thus enhancing the semantic richness of the text features. This ability to capture a wider scope of semantic nuances leads to the TAE model’s heightened predictive performance. Conversely, the TAE-BiLSTM’s results, illustrated in Figs. 5b and 6b, show that while the LSTM significantly influences the encoding of pre-trained word vectors, the topic vectors in TAE are still essential to extract thematic understanding from the concealed vectors of words.

5.10 Sensitivity analysis

Here we focus on sample sensitivity. Different samples are made by randomly dropping words in the sample text according to a ratio, and then experiments are conducted on these different samples. In order to highlight the differences of the samples more obviously, we choose the CiteULike-t dataset which has relatively shorter text length in the three datasets. The drop ratios are set to 0.1,0.3,0.5 and 0.7. The experimental results are shown in Table 7. As the ratio of words dropped from the sample increases, text semantics will shift a lot with the change of words, the performance of both models shows a significant decrease. However, we can also observe that the model with TAE still has a large advantage over the baseline model. The introduction of TAE latent topics works equally well for semantically ambiguous samples, and compared to the original model, TAE can show more consistent predictions.

6 Further discussion

Uncertainty

Aleatoric uncertainty typically refers to data uncertainty, which arises from the inherent randomness or variability of the data itself [39]. On the other hand, epistemic uncertainty pertains to model uncertainty or cognitive uncertainty, which stems from insufficient information to support it [40]. Chen et al. [40] note that aleatoric uncertainty is not influenced by the training dataset size but rather by the dimensionality of the word embedding. Specifically, lower dimensionality results in higher aleatoric uncertainty, while increasing the dimensionality of word embeddings can enhance classification performance. Conversely, epistemic uncertainty is correlated with the training dataset’s size and the word embedding’s dimensionality. A larger training dataset size and lower dimensionality lead to greater epistemic uncertainty. This relationship holds true for classifiers based on TAE, which is commonly used as a layer within an instantiated network. The underlying network generation determines the input data dimension. For the same underlying network, the higher the dimensionality of the intermediate data interpretations, the better the classification performance is.

Limitations of TAE

Although TAE is effective, the introduction of additional parameters makes the computational overhead increase. Meanwhile, TAE is adapted to sequential textual semantic modelling networks, and not all backbone neural networks can be adapted to the TAE component. If the coding capability of the backbone network is already strong, the relative performance improvement by adding TAE may not be significant.

7 Conclusion

Latent topic information positively enhances text encoding feature improvement, however current approaches necessitate modeling topic information independently. The TAE is a proposed topic-aware encoder that enhances model performance by integrating topic information into sequential semantics encoding. It readily combines with traditional neural models as a modular extra component. Experimental evidence confirms that TAE is efficient in enhancing the capacities of various neural networks and speeding up convergence in training. It is worth noting that the potential positive role of topic information in pre-training language models has been explored [41]. It will be interesting to explore the integration of TAE during the pre-training stage of language models to improve classification performance in few-shot settings.

Data Availability

The dataset used during the current study is publicly available, and the available links have been given in the manuscript.

Code Availability

Our source code is available at https://github.com/qsw-code/TAE.

References

Wu H, Duan Y, Yue K et al (2022) Mashup-oriented Web API recommendation via multi-model fusion and multi-task learning. IEEE Trans Serv Comput 15(6):3330–3343

Liu W, Wang H, Shen X et al (2022) The emerging trends of multi-label learning. IEEE Trans Pattern Anal Mach Intell 44(11):7955–7974

Blei DM, Ng AY, Jordan MI (2003) Latent dirichlet allocation. J Mach Learn Res 3:993–1022

Burkhardt S, Kramer S (2019) A survey of multi-label topic models. SIGKDD Explor 21(2):61–79

Zhou Y, Liao L, Gao Y et al (2023) Topicbert: A topic-enhanced neural language model fine-tuned for sentiment classification. IEEE Trans Neural Networks Learn Syst 34(1):380–393

Kim Y (2014) Convolutional neural networks for sentence classification. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1746–1751

Zhao Y, Shen Y, Yao J (2019) Recurrent neural network for text classification with hierarchical multiscale dense connections. In: Kraus S (ed) Proceedings of the twenty-eighth international joint conference on artificial intelligence, IJCAI 2019, Macao, China, August 10-16, 2019. ijcai.org, pp 5450–5456

Devlin J, Chang MW, Lee K et al (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: human language technologies, pp 4171–4186

Liu J, Chang WC, Wu Y, et al (2017) Deep learning for extreme multi-label text classification. In: Proceedings of the 40th International ACM SIGIR conference on research and development in information retrieval (SIGIR), ACM, pp 115–124

You R, Zhang Z, Wang Z, et al (2019) AttentionXML: Label tree-based attention-aware deep model for high-performance extreme multi-label text classification. In: Advances in neural information processing systems 32: annual conference on neural information processing systems 2019, pp 5812–5822

Zhang P, Wang S, Li D et al (2020) Combine topic modeling with semantic embedding: embedding enhanced topic model. IEEE Trans Knowl Data Eng 32(12):2322–2335

Pita M, Nunes M, Pappa GL (2022) Probabilistic topic modeling for short text based on word embedding networks. Appl Intell 52(15):17829–17844

Chen Z, Ren J (2021) Multi-label text classification with latent word-wise label information. Appl Intell 51(2):966–979

Qiu S, Sekhar N, Singhal P (2023) Topic and style-aware transformer for multimodal emotion recognition. In: Rogers A, Boyd-Graber JL, Okazaki N (eds) Findings of the Association for Computational Linguistics: ACL 2023, Toronto, Canada, July 9-14, 2023. Association for Computational Linguistics, pp 2074–2082

Tan Z, Chen J, Kang Q et al (2022) Dynamic embedding projection-gated convolutional neural networks for text classification. IEEE Trans Neural Networks Learn Syst 33(3):973–982

Wu H, Qin S, Nie R et al (2022) Effective collaborative representation learning for multilabel text categorization. IEEE Trans Neural Networks Learn Syst 33(10):5200–5214

Hou Y, Wan S, Bao F et al (2021) Gated value network for multilabel classification. IEEE Trans Neural Networks Learn Syst 32(10):4748–4754

Wang R, Ridley R, Su X et al (2021) A novel reasoning mechanism for multi-label text classification. Inf Process Manag 58(2):102441

Zhang X, Zhang Q, Yan Z, et al (2021) Enhancing label correlation feedback in multi-label text classification via multi-task learning. In: Findings of the association for computational linguistics: ACL/IJCNLP, pp 1190–1200

Chen J, Zhang R, Xu J et al (2023) A neural expectation-maximization framework for noisy multi-label text classification. IEEE Trans Knowl Data Eng 35(11):10992–11003

Xu P, Xiao L, Liu B, et al (2023) Label-specific feature augmentation for long-tailed multi-label text classification. In: Thirty-Seventh AAAI conference on artificial intelligence, AAAI 2023. AAAI Press, pp 10602–10610

Stein RA, Jaques PA, Valiati JF (2019) An analysis of hierarchical text classification using word embeddings. Inf Sci 471:216–232

Radford A, Wu J, Child R et al (2019) Language models are unsupervised multitask learners. OpenAI blog 1(8):9

Maltoudoglou L, Paisios A, Lenc L et al (2022) Well-calibrated confidence measures for multi-label text classification with a large number of labels. Pattern Recognit 122:108271

Qin S, Wu H, Nie R et al (2020) Deep model with neighborhood-awareness for text tagging. Knowl Based Syst 196:105750

Lee J, Yu I, Park J et al (2019) Memetic feature selection for multilabel text categorization using label frequency difference. Inf Sci 485:263–280

Wang G, Li C, Wang W, et al (2018) Joint embedding of words and labels for text classification. In: Proceedings of the 56th annual meeting of the association for computational linguistics( ACL), pp 2321–2331

Pappas N, Henderson J (2019) GILE: A generalized input-label embedding for text classification. Trans Assoc Comput Linguist 7:139–155

Zhang Q, Zhang X, Yan Z, et al (2021) Correlation-guided representation for multi-label text classification. In: Proceedings of the Thirtieth international joint conference on artificial intelligence (IJCAI), pp 3363–3369

Jiang T, Wang D, Sun L, et al (2021) Lightxml: Transformer with dynamic negative sampling for high-performance extreme multi-label text classification. In: Proceedings of Thirty-Fifth AAAI conference on artificial intelligence, pp 7987–7994

Zong D, Sun S (2023) BGNN-XML: bilateral graph neural networks for extreme multi-label text classification. IEEE Trans Knowl Data Eng 35(7):6698–6709

Xiao L, Zhang X, Jing L, et al (2021) Does head label help for long-tailed multi-label text classification. In: Thirty-Fifth AAAI conference on artificial intelligence, pp 14103–14111

Qin S, Wu H, Zhou L et al (2023) Learning metric space with distillation for large-scale multi-label text classification. Neural Comput Appl 35(15):11445–11458

Hu J, Shen L, Albanie S et al (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2023

Hosseini SA, Shahri AA, Asheghi R (2022) Prediction of bedload transport rate using a block combined network structure. Hydrol Sci J 67(1):117–128

Xun G, Jha K, Sun J et al (2020) Correlation networks for extreme multi-label text classification. In: Gupta R, Liu Y, Tang J, et al (eds) KDD ’20: The 26th ACM SIGKDD conference on knowledge discovery and data mining, Virtual Event, CA, USA, August 23-27, 2020. ACM, pp 1074–1082

Loza Mencía E, Fürnkranz J (2008) An evaluation of efficient multilabel classification algorithms for large-scale problems in the legal domain. In: Proceedings of the LREC 2008 workshop on semantic processing of legal texts, Marrakech, Morocco, pp 23–32

Zubiaga A (2009) Enhancing navigation on wikipedia with social tags. In: Wikimania 2009, Wikimedia Foundation

Zhang D, Sensoy M, Makrehchi M et al (2023) Uncertainty quantification for text classification. In: Proceedings of the 46th International ACM SIGIR conference on research and development in information retrieval, SIGIR 2023, Taipei, Taiwan, July 23-27, 2023. ACM, pp 3426–3429. https://doi.org/10.1145/3539618.3594243

Chen W, Zhang B, Lu M (2020) Uncertainty quantification for multilabel text classification. WIREs Data Mining Knowl Discov. https://doi.org/10.1002/WIDM.1384

Peinelt N, Nguyen D, Liakata M (2020) tbert: Topic models and BERT joining forces for semantic similarity detection. In: Jurafsky D, Chai J, Schluter N et al (eds) Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, July 5-10, 2020. Association for Computational Linguistics, pp 7047–7055

Acknowledgements

This work is supported by the National Natural Science Foundation of China (62062066, 61962061, 62362069), partially supported by the Key Program of Basic Research of Yunnan Province (202101AS070056, 202201AS070015), the Yunnan Provincial Foundation for Leaders of Disciplines in Science and Technology (202005AC160005), Yunnan High-Level Talent Training Support Plan: Young Top Talent Special Project (YNWR-QNBJ-2019-188), Program of Yunnan Key Laboratory of Intelligent Systems and Computing (202205AG070003).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qin, S., Wu, H., Zhou, L. et al. TAE: Topic-aware encoder for large-scale multi-label text classification. Appl Intell (2024). https://doi.org/10.1007/s10489-024-05485-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05485-z