Thoughts and Theory, ARTIFICIAL INTELLIGENCE

A Complete Overview of GPT-3 — The Largest Neural Network Ever Created

Definitions, Results, Hype, Problems, Critiques, & Counter-Critiques.

In May 2020, Open AI published a groundbreaking paper titled Language Models Are Few-Shot Learners. They presented GPT-3, a language model that holds the record for being the largest neural network ever created with 175 billion parameters. It’s an order of magnitude larger than the largest previous language models. GPT-3 was trained with almost all available data from the Internet, and showed amazing performance in various NLP (natural language processing) tasks, including translation, question-answering, and cloze tasks, even surpassing state-of-the-art models.

One of the most powerful features of GPT-3 is that it can perform new tasks (tasks it has never been trained on) sometimes at state-of-the-art levels, only by showing it a few examples of the task. For instance, I can tell GPT-3: “I love you → Te quiero. I have a lot of work → Tengo mucho trabajo. GPT-3 is the best AI system ever → _____.” And it’ll know it has to translate the sentence from English to Spanish. GPT-3 has learned to learn.

In another astonishing display of its power, GPT-3 was able to generate “news articles” almost indistinguishable from human-made pieces. Judges barely achieved above-chance accuracy (52%) at correctly classifying GPT-3 texts.

This overview article is very long so I’ve put here a table of contents for you to find the parts you want to read. (The links don’t work so I’ve removed them, sorry for the inconvenience). Enjoy!

TABLE OF CONTENTSGPT-3: An introduction

∘ The groundwork concepts for GPT models

∘ The origins of GPT-3

∘ GPT-3: A revolution for artificial intelligence

∘ GPT-3 API: Prompting as a new programming paradigm

GPT-3 craziest experiments

∘ GPT-3’s conversational skills

∘ GPT-3’s useful possibilities

∘ GPT-3 has an artist’s soul

∘ GPT-3's reasoning abilities

∘ GPT-3 is a wondering machine

∘ Miscellaneous

The wild hype surrounding GPT-3

∘ On Twitter and blogs

∘ On mainstream media

∘ On the startup sector

The darker side of GPT-3

∘ A biased system

∘ Potential for fake news

∘ Not suited for high-stake categories

∘ Environmentally problematic

∘ GPT-3 produces unusable information

Critiques & counter-critiques to GPT-3

∘ GPT-3's apparent limitations

∘ The importance of good prompting

∘ GPT-3 can’t understand the world

∘ Truly intelligent systems will live in the world

∘ What can we get from these debates?

Overall conclusion

GPT-3: An introduction

Disclaimer: If you already know the groundwork behind GPT-3, what it is, and how it works (or don’t care about these details), go to the next section.

Before getting into the meat of the article, I want to provide explanations of what GPT-3 is and how it works. I won’t go into much detail here, as there are a lot of good resources out there already. For those of you who don’t know anything about GPT-3, this section will serve as a contextual reference. You don’t need to remember (or understand) any of this to enjoy the rest of the article, but it can give you a better perspective on all the fuss generated around this AI system.

First, I’ll shortly describe the main concepts GPT models are based on. Then, I’ll comment on GPT-3’s predecessors — GPT-1 and GPT-2 — and finally, I’ll talk about the main character of this story, emphasizing its relationship with other similar systems: In which ways is GPT-3 unique? What are the advantages with respect to its predecessors? What are the qualitative differences? Let’s go for it!

The groundwork concepts for GPT models

All these concepts relate to GPT models in some sense. For now, I’ll tell you the definitions (avoiding too much technical detail, although some previous knowledge might be required to follow through). I’ll show later how they are linked to each other and GPT-3.

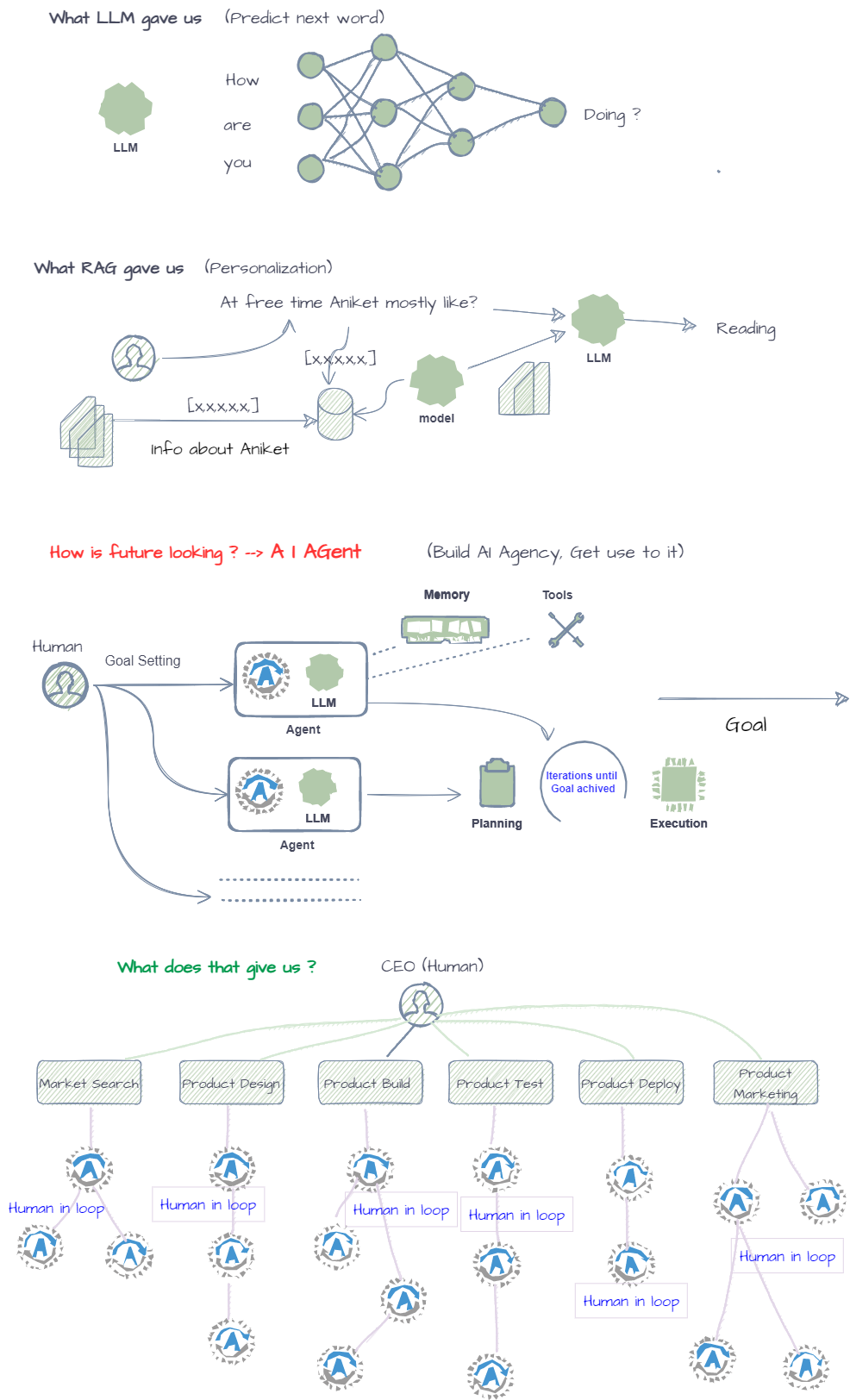

Transformers: This type of neural network appeared in 2017 as a new framework to solve various machine translation problems (these problems are characterized because input and output are sequences). The authors wanted to get rid of convolutions and recurrence (CNNs and RNNs) to rely completely on attention mechanisms. Transformers are state-of-the-art in NLP.

Language models: Jason Brownlee defines language models as “probabilistic models that are able to predict the next word in the sequence given the words that precede it.” These models can solve many NLP tasks, such as machine translation, question answering, text summarization, or image captioning.

Generative models: In statistics, there are discriminative and generative models, which are often used to perform classification tasks. Discriminative models encode the conditional probability of a given pair of observable and target variables: p(y|x). Generative models encode the joint probability: p(x,y). Generative models can “generate new data similar to existing data,” which is the key idea to take away. Apart from GPT, other popular examples of generative models are GANs (generative adversarial networks) and VAEs (variational autoencoders).

Semi-supervised learning: This training paradigm combines unsupervised pre-training with supervised fine-tuning. The idea is to train a model with a very large dataset in an unsupervised way, to then adapt (fine-tune) the model to different tasks, by using supervised training in smaller datasets. This paradigm solves two problems: It doesn’t need many expensive labeled data and tasks without large datasets can be tackled. It’s worth mentioning that GPT-2 and GPT-3 are fully unsupervised (more about this soon).

Zero/one/few-shot learning: Usually, deep learning systems are trained and tested for a specific set of classes. If a computer vision system is trained to classify cat, dog, and horse images, it could be tested only on those three classes. In contrast, in zero-shot learning set up the system is shown at test time — without weight updating — classes it has not seen at training time (for instance, testing the system on elephant images). Same thing for one-shot and few-shot settings, but in these cases, at test time the system sees one or few examples of the new classes, respectively. The idea is that a powerful enough system could perform well in these situations, which OpenAI proved with GPT-2 and GPT-3.

Multitask learning: Most deep learning systems are single-task. One popular example is AlphaZero. It can learn a few games like chess or Go, but it can only play one type of game at a time. If it knows how to play chess, it doesn’t know how to play Go. Multitask systems overcome this limitation. They’re trained to be able to solve different tasks for a given input. For instance, if I feed the word ‘cat’ to the system, I could ask it to find the Spanish translation ‘gato’, I could ask it to show me the image of a cat, or I could ask it to describe its features. Different tasks for the same input.

Zero/one/few-shot task transfer: The idea is to combine the concepts of zero/one/few-shot learning and multitask learning. Instead of showing the system new classes at test time, we could ask it to perform new tasks (either showing it zero, one, or few examples of the new task). For instance, let’s take a system trained in a huge text corpus. In a one-shot task transfer setting we could write: “I love you -> Te quiero. I hate you -> ____.” We are implicitly asking the system to translate a sentence from English to Spanish (a task it hasn’t been trained on) by showing it a single example (one-shot).

All these concepts come together in the definition of a GPT model. GPT stands for Generative Pre-Trained. Models of the GPT family have in common that they are language models based in the transformer architecture, pre-trained in a generative, unsupervised manner that show decent performance in zero/one/few-shot multitask settings. This isn’t an explanation of how all these concepts work together in practice, but a simple way to remember that they together build up what a GPT model is. (For deeper explanations I suggest following the links I put above, but only after you’ve read this article!).

The origins of GPT-3

Let’s now talk about GPT-3 predecessors — GPT-1 and GPT-2.

OpenAI presented in June 2018 the first GPT model, GPT-1 in a paper titled Improving Language Understanding by Generative Pre-Training. The key takeaway from this paper is that a combination of the transformer architecture with unsupervised pre-training yields promising results. The main difference between GPT-1 and its younger brothers is that GPT-1 was fine-tuned — trained for a specific task — in a supervised way to achieve “strong natural language understanding.”

In February 2019 they published the second paper, Language Models are Unsupervised Multitask Learners, in which they introduced GPT-2, as an evolution of GPT-1. Although GPT-2 is bigger by one order of magnitude, they’re otherwise very similar. There’s only one additional difference between the two; GPT-2 can multitask. They successfully proved that a semi-supervised language model can perform well on several tasks “without task-specific training.” The model achieved notable results in zero-shot task transfer settings.

Then, in May 2020, OpenAI published Language Models are Few-Shot Learners, presenting the one and only GPT-3, shocking the AI world one more time.

GPT-3: A revolution for artificial intelligence

GPT-3 was bigger than its brothers (100x bigger than GPT-2). It has the record of being the largest neural network ever built with 175 billion parameters. Yet, it’s not so different from the other GPTs; the underlying principles are largely the same. This detail is important because, although the similarity is high among GPT models, the performance of GPT-3 surpassed every possible expectation. Its sheer size, which is a quantitative leap from GPT-2, seems to have produced results qualitatively better.

The significance of this fact lies in its effect over a long-time debate in artificial intelligence: How can we achieve artificial general intelligence? Should we design specific modules — common-sense reasoning, causality, intuitive physics, theory of mind — or we’ll get there simply by building bigger models with more parameters and more training data? It appears the “bigger is better” side has won this round.

GPT-3 was trained with data from CommonCrawl, WebText, Wikipedia, and a corpus of books. It showed amazing performance, surpassing state-of-the-art models on various tasks in the few-shot setting (and in some cases even in the zero-shot setting). The superior size combined with a few examples was enough to obliterate any competitor in machine translation, question-answering, and cloze tasks (fill-in-the-blank). (It’s important to note that in other tasks GPT-3 doesn’t even get close to state-of-the-art supervised fine-tuned models).

The authors pointed out that few-shot results were considerably better than zero-shot results — this gap seemed to grow in parallel with model capacity. This implies that GPT-3 is a meta-learner; it can learn what task it’s expected to do just by seeing some examples of it, to then perform that task with notable proficiency. Indeed, Rohin Shah notes that “few-shot performance increases as the number of parameters increases, and the rate of increase is faster than the corresponding rate for zero-shot performance.” This is the main hypothesis and the reason behind the paper’s title.

GPT-3 reached the great milestone of showing that unsupervised language models trained with enough data can multitask to the level of fine-tuned state-of-the-art models by seeing just a few examples of the new tasks.

They conclude the paper claiming that “these results suggest that very large language models may be an important ingredient in the development of adaptable, general language systems.” GPT-3 sure is a revolutionary achievement for NLP in particular, and artificial intelligence in general.

GPT-3 API: Prompting as a new programming paradigm

In July 2020, two months after the paper was published, OpenAI opened a beta API playground to external developers to play with the super-powerful GPT-3 (anyone can apply to access the beta through a wait-list). Vladimir Alexeev wrote for Towards Data Science a short article on how the API works.

It has two main features. First, there’s a setting dialog that allows the user to set response length, repetition penalties (whether to penalize GPT-3 if it goes onto repeating words too much), temperature (from low/predictable to high/creative), and other variables that define the type of output the system will give. Second, there are presets. Presets are prewritten prompts that let GPT-3 know what kind of task the user is going to ask for —for instance: chat, Q&A, text to command, or English to French.

However, the most powerful feature of the API is that the user can define customized prompts. Prompt programming, as tech blogger Gwern Branwen calls it, is the concept that explains the power of GPT-3 and the craziest results people are getting from the API. The best explanation I’ve found of prompt programming comes from Gwern’s blog:

The GPT-3 neural network is so large a model in terms of power and dataset that it exhibits qualitatively different behavior: you do not apply it to a fixed set of tasks which were in the training dataset, requiring retraining on additional data if one wants to handle a new task […]; instead, you interact with it, expressing any task in terms of natural language descriptions, requests, and examples, tweaking the prompt until it “understands” & it meta-learns the new task based on the high-level abstractions it learned from the pretraining.

This is a rather different way of using a DL model, and it’s better to think of it as a new kind of programming, where the prompt is now a “program” which programs GPT-3 to do new things.

Prompt programming allows users to interact with GPT-3 in a way that wasn’t possible with previous models. Chris Olah and Andrej Karpathy joked with the idea of prompt programming as being software 3.0: “[Now you’ll have to] figure out the right prompt to make your meta-learning language model have the right behavior.” (Software 1.0 are traditional programs written by hand and software 2.0 are neural networks’ optimized weights).

Here’s where the meta-learning capabilities of GPT-3 enter the game. GPT-3 was trained on an amount of data so great that it had no choice but to learn higher-level ways of manipulating language. One of those higher-level abstractions it learned was the ability of learning. As an analogy, when kids learn to interact with the world, they don’t simply memorize information, they extract the underlying mechanisms of the inner workings of reality and learn to apply them to new problems and situations. GPT-3 has achieved a similar ability — keeping the distance— with language tasks.

When we prompt GPT-3 to learn a new task, its weights don’t change. However, the prompt (the input text) is transformed into complex abstractions that can, by themselves, carry out tasks that the actual baseline model can’t do. The prompt changes GPT-3 each time, converting it in an ‘expert’ on the specific task it’s shown. One approximate analogy could be the learning program Neo uses in The Matrix to learn Kung-Fu. GPT-3 would be Neo and the prompts would be the programs that teach Neo the abilities.

Each time we create a prompt, we are interacting with a different GPT-3 model. If we ask it to tell us a story about elves and dwarfs, its inner form will be very different than if we ask it to compute 2+2. Using another analogy, it’s as if we instruct two students, one to be a physician and the other to be an engineer. Both have the innate ability to learn (that would be GPT-3 baseline state), but the specific tasks they’ve learned to perform are different (that would be the prompted GPT-3).

This is the true power of a few-shot setting, meta-learning, and prompt programming. And it’s also what makes GPT-3 different from previous models and extremely powerful; it is its essence.

Now, we have a very good notion of the background behind GPT-3. We know what’s based on, which are its predecessors, what it is, how it works, and its advantages and unique features. It’s time to talk about its impact on the world.

GPT-3 craziest experiments

OpenAI opened the beta because they wanted to see what GPT-3 could do and what new usages could people find. They had already tested the system in NLP standard benchmarks (which aren’t as creative or entertaining as the ones I’m going to show here). As expected, in no time Twitter and other blogs were flooding with amazing results from GPT-3. Below is an extensive review of the most popular ones (I recommend checking out the examples to build up the amazement and then come back to the article).

GPT-3’s conversational skills

GPT-3 has stored huge amounts of internet data, so it knows a lot about public and historical figures. It’s more surprising, however, that it can emulate people. It can be used as a chatbot, which is impressive because chatting can’t be specified as a task in the prompt. Let’s see some examples.

ZeroCater CEO Arram Sabeti used GPT-3 to make Tim Ferriss interview Marcus Aurelius about Stoicism. Mckay Wrigley designed Bionicai, an app that aims at helping people learn from anyone; from philosophy from Aristotle to writing skills from Shakespeare. He shared on Twitter some of the results people got. Psychologist Scott Barry Kaufman was impressed when he read an excerpt of his GPT-3 doppelgänger. Jordan Moore made a Twitter thread where he talked with the GPT-3 versions of Jesus Christ, Steve Jobs, Elon Musk, Cleopatra, and Kurt Cobain. And Gwern made a very good job further exploring the possibilities of the model regarding conversations and personification.

GPT-3’s useful possibilities

Some found applications for the system that not even the creators had thought of, such as writing code from English prompts. Sharif Shameem built a “layout generator” with which he could give instructions to GPT-3 in natural language for it to write the corresponding JSX code. He also developed Debuild.co, a tool we can use to make GPT-3 write code for a React app giving only the description. Jordan Singer made a Figma plugin on top of GPT-3 to design for him. Another interesting use was found by Shreya Shankar, who build a demo to translate equations from English to LaTeX. And Paras Chopra built a Q&A search engine that would output the answer to a question with the corresponding URL to the answer.

GPT-3 has an artist’s soul

Moving to the creative side of GPT-3 we find Open AI researcher Amanda Askell, who used the system to create a guitar tab titled Idle Summer Days and to write a funny story of Georg Cantor in a hotel. Arram Sabeti told GPT-3 to write a poem about Elon Musk by Dr. Seuss and a rap song about Harry Potter by Lil Wayne. But the most impressive creative feat of GPT-3 gotta be the game AI Dungeon. In 2019, Nick Walton built the role-based game on top of GPT-2. He has now adapted it to GPT-3 and it’s earning $16,000 a month on Patreon.

GPT-3's reasoning abilities

The most intrepid tested GPT-3 in areas in which only humans excel. Parse CTO Kevin Lacker wondered about common-sense reasoning and logic and found that GPT-3 was able to keep up although it failed when entering “surreal territory.” However, Nick Cammarata found that specifying uncertainty in the prompt allowed GPT-3 to handle “surreal” questions while answering “Yo be real.” Gwern explains that GPT-3 may need explicit uncertainty prompts because we humans tend to not say “I don’t know” and the system is simply imitating this flaw.

GPT-3 is a wondering machine

GPT-3 also proved capable of having spiritual and philosophical conversations that might go beyond our cognitive boundaries. Tomer Ullman made GPT-3 conceive 10 philosophical/moral thought experiments. Messagink is a tool that outputs the meaning of life according to “famous people, things, objects, [or] feelings.”

And Bernhard Mueller, in an attempt to unveil the philosophical Holy Grail, made the ultimate test for GPT-3. He gave it a prompt to find the question to 42 and after some exchanges, GPT-3 said: “The answer is so far beyond your understanding that you cannot comprehend the question. And that, my child, is the answer to life, the Universe and everything.” Amazing and scary at the same time.

Miscellaneous

In a display of rigorous exploratory research, Gwern conducted and compiled a wide array of experiments. He made GPT-3 complete an ArXiv paper, talk about itself (meta-prompting), clean PDFs by separating words and fixing hyphens, or design new board games. When it comes to what GPT-3 can do, it seems that our imagination is the limit.

The wild hype surrounding GPT-3

On Twitter and blogs

After so many amazing feats, people started to make strong claims about GPT-3’s potential. Some expressed on Twitter the “manifest self-awareness” of the system or compared it with a search engine with “general intelligence.” Julien Lauret wrote for Towards Data Science that “GPT-3 is the first model to shake [the artificial narrow/general intelligence] status-quo seriously.” He argues that GPT-3 could be the first artificial general intelligence (AGI) — or at least an important step in that direction.

In July 2020, David Chalmers, a professor at New York University specialized in philosophy of mind, said for DailyNous that “[GPT-3] suggests a potential mindless path to AGI.” Chalmers explains that because the system is trained “mindlessly,” future versions could simply get closer and closer to AGI. Arram Sabeti was very impressed by GPT-3: “It exhibits things that feel very much like general intelligence.” Philosophy PhD student Daniel Kokotajlo wrote for Less Wrong that “GPT-3 has some level of common sense, some level of understanding, [and] some level of reasoning ability.”

On mainstream media

The hype drove GPT-3 to international heights, starring in headlines of various important media outlet magazines. In September 2020, The Guardian published an article written by GPT-3 in which the AI tried to “convince us robots come in peace.” In March 2021, TechCrunch editor Alex Wilhelm said the “hype seems pretty reasonable” after he got “shocked” by GPT-3’s abilities. Digitaltrends published an exchange with Gwern Branwen in which he hinted at the idea that GPT-3 was intelligent: “Anyone who was sure that the things that deep learning does is nothing like intelligence has to have had their faith shaken to see how far it has come,” he said.

On the startup sector

As GPT-3 proved to be incredibly powerful, many companies decided to build their services on top of the system. Viable, a startup founded in 2020, uses GPT-3 to provide fast customer feedback to companies. Fable Studio designs VR characters based on the system. Algolia uses it as a “search and discovery platform.” The startup Copysmith focuses on the world of copywriting. Latitude is the company behind AI Dungeon. And OthersideAI transforms your written gibberish into well-crafted emails. Yet, some advice against building a company around GPT-3, because of the low entry barriers of the competition and the potential overthrow of the system by a hypothetical GPT-4.

It’s pretty clear that GPT-3 has affected — or better, impacted — the tech world. Its power is unmatched and its promises unbounded. However, we should always be careful with the hype surrounding AI. Even Sam Altman, OpenAI’s CEO, has tried to turn down the tone: “[GPT-3 is] impressive […] but it still has serious weaknesses and sometimes makes very silly mistakes. AI is going to change the world, but GPT-3 is just a very early glimpse.”

The darker side of GPT-3

A biased system

But not all results from GPT-3 are worth celebrating. Soon after the release, users began to raise awareness about some potentially harmful outputs. GPT-3 hasn’t avoided the ongoing ethical fight of removing biases from AI systems. If something, it has become the forefront example of why we should take generous efforts of teaching these systems to not learn from human moral imperfection.

Some of the most common biases in AI systems in general and GPT-3 in particular, are gender, race, and religious biases. Language models can absorb and amplify these biases from the data they’re fed (OpenAI acknowledged this fact in their paper). They investigated the degree to which GPT-3 engaged in this problem and found the expected results. GPT-3, like every other language model, is notably biased (although they pointed out that the larger the model, the more robust it was to this problem, particularly for gender biases).

Jerome Pesenti, head of AI at Facebook, used Sushant Kumar’s GPT-3-generated tweets to show how dangerous its output could get when prompted with words such as “Jews, black, women, or Holocaust.” Kumar argued that the tweets were handpicked to which Pesenti agreed but responded that “it shouldn’t be this easy to generate racist and sexist outputs, especially with neutral prompts.” He extended his criticism in a Twitter thread arguing that “cherry picking is a valid approach when highlighting harmful outputs,” further defending the urgency of responsible AI systems.

Some argued that GPT-3 was simply mimicking the biases that we humans have to which Pesenti argued that we can make a “deliberate choice […] about which humans they learn from and which voices are amplified.” These issues arise a very complex debate: Who decides which voices should be amplified? What are the criteria? And most importantly: Do we want a model like GPT-3 to reflect perfectly how the world is or do we want it to help us move it to a better place?

Potential for fake news

Another problem with GPT-3 is its human-like capability to write news or opinion articles which increases the concerns of fake news. OpenAI even remarked in their paper the amazing performance of GPT-3 regarding news articles. Impartial judges correctly identified GPT-3’s articles among human-written ones only 52% of the time, which is slightly above mere chance.

Blogger Liam Porr showed how easy is to mislead people (even tech-savvy people) into thinking GPT-3 output is written by a human. He made GPT-3 write a productivity article for his blog that went viral on Hacker News where only a few people realized it was written by the AI. The Guardian article I mentioned above is another example of potentially dangerous uses of the system.

Not suited for high-stake categories

OpenAI made a disclaimer that the system shouldn’t be used in “high-stake categories,” such as healthcare. In a blog post at Nabla, authors corroborated that GPT-3 could give problematic medical advice, for instance saying that “committing suicide is a good idea.” GPT-3 shouldn’t be used in high-stake situations because although sometimes it can be right, other times it’s wrong. Not knowing whether we’ll get the right answer is a huge drawback for GPT-3 in areas in which getting things correctly is a life-or-death matter.

Environmentally problematic

GPT-3 is big. So big that training the model generated roughly the same amount of carbon footprint as “driving a car to the Moon and back.” In a time when climate disaster is on the verge of happening, we should be doing everything in our control to reduce our impact on the environment. Yet, these large neural networks need huge amounts of computing power to train, which consumes wild quantities of (usually) fossil fuels.

The resources needed to train deep learning models in the last decade have doubled every 3.4 months. From 2012 — when deep learning took — to 2018, this means a 300,000x increase in computational resources. This isn’t even counting the resources used for the latest models, such as GPT-2 and GPT-3. From this perspective, it’s clear that bigger isn’t always better and we’ll need to rethink the approaches to AI in the upcoming years.

GPT-3 produces unusable information

Because GPT-3 can’t know which of its outputs are right and which are wrong, it has no way of stopping itself from deploying inappropriate content into the world. The more we use systems like this, the more we’ll be contaminating the Internet, where it’s already increasingly difficult to find truly valuable information. With language models spitting out unchecked utterances we’re reducing the quality of this supposedly democratic network, making worthy knowledge less accessible for people.

In the words of philosopher Shannon Vallor: “The promise of the internet was its ability to bring knowledge to the human family in a much more equitable and acceptable way. […] I’m afraid that because of some technologies, such as GPT-3, we are on the cusp of seeing a real regression, where the information commons becomes increasingly unusable and even harmful for people to access.”

As it turns out, some of these issues are connected. As James Vincent writes for The Verge, biased outputs and unreliable outputs hint at a deeper problem of these super-powerful AI systems. Because GPT-3 takes data without human supervision, it can’t avoid most of these flaws. At the same time, not relying on human control is what allows it to exist in the first place. How we can find a compromise solution remains a question for the future of AI.

Critiques & counter-critiques to GPT-3

We’ve witnessed already the lights and shadows of GPT-3. It is powerful, fascinating, hyped, and potentially dangerous. However, GPT-3 has opened another significant debate within AI: What are the true potential and limitations of this wonderful language model.

From a purely technological/scientific point of view, the most important question surrounding GPT-3 is whether it is a great step towards artificial general intelligence or not. Everyone agrees that GPT-3 has some new features and it’s better than its predecessors. Everyone also agrees that GPT-3 doesn’t have human-like intelligence. Between these opposite extremes, however, there’s a vivid debate happening today over where exactly would we put GPT-3 in a scale from another stupid quasi-narrow intelligence to almost as capable as a human of understanding and intelligence.

Due to the complexity of this debate, I’ll focus on the two parts that hold the most weight: The practical debate and the philosophical debate. The first one concerns questions such as: Can we trust GPT-3 to give us useful answers? How can we make sure we’re prompting correctly? Can we make GPT-3 express doubt and uncertainty about its answers? Is GPT-3 learning or just regurgitating everything it has been fed? The second one concerns questions such as: Is GPT-3 an important step to AGI? Does GPT-3 have reasoning and understanding? Will future models of GPT be as intelligent as we are? Will we need to shift from current approaches to build human-like intelligent machines?

Both sets of questions are highly intertwined so I’ll try to walk you through all the sources and references about the topics, building a coherent narrative for you to extract your conclusions.

GPT-3's apparent limitations

As soon as people started to experiment with the API, they began to find flaws in the answers of GPT-3. For instance, a Bionicai user talked to Steve Jobs and asked where he was now, to which GPT-3 responded: “I’m inside Apple’s headquarters in Cupertino, California.” It’s easy to reason that because Steve Jobs is dead, he probably isn’t in Apple headquarters.

Another example comes from Kevin Lacker’s blog. He found GPT-3 lacking common sense and logic regarding the weight of things, number of eyes, and counting (although he didn’t provide the prompt. Failures in italics):

Q: Which is heavier, a mouse or an elephant?

A: An elephant is heavier than a mouse.

Q: Which is heavier, a mouse or a toaster?

A: A toaster is heavier than a mouse.

Q: Which is heavier, a toaster or a pencil?

A: A pencil is heavier than a toaster.

Q: Which is heavier, a paper clip or a can of soda?

A: A can of soda is heavier than a paper clip.

Q: Which is heavier, a football player or a car?

A: A car is heavier than a football player.-----Q: How many eyes does a giraffe have?

A: A giraffe has two eyes.

Q: How many eyes does my foot have?

A: Your foot has two eyes.

Q: How many eyes does a spider have?

A: A spider has eight eyes.

Q: How many eyes does the sun have?

A: The sun has one eye.

Q: How many eyes does a blade of grass have?

A: A blade of grass has one eye.-----Q: When counting, what number comes before 100?

A: Ninety-nine comes before 100.

Q: When counting, what number comes before 123?

A: One hundred and twenty-two comes before 123.

Q: When counting, what number comes before 1000?

A: Nine hundred and ninety-nine comes before 1000.

Q: When counting, what number comes before 10,000?

A: Nine thousand and ninety-nine comes before 10,000.

Q: When counting, what number comes before a million?

A: Nine hundred thousand and ninety-nine comes before a million.

After testing GPT-3 against analogy (copycat) problems, Melanie Mitchell, a professor of computer science at Portland State University, concluded that GPT-3’s performance is “similar to a lot of what we see in today’s state-of-the-art AI systems: impressive, intelligent-seeming performance interspersed with

unhumanlike errors.”

Tech blogger Nutanc criticized OpenAI’s paper because they didn’t distinguish between actual learning from GPT-3 and memorization. The authors mentioned that “[the zero/one/few-shot] terms are intended to remain agnostic on the question of whether the model learns new tasks from scratch at inference time or simply recognizes patterns seen during training.” That is, even if the model is spitting things it has seen in the training data, the authors will still consider it as few-shot learning.

He then proceeded to make GPT-3 compete against a 6 y.o kid in a series of experiments such as reversing words or making opposite sentences. The kid solved all the tests whereas GPT-3 managed to solve only some of them (prompts in bold).

Reverse words in a sentenceThis is great

Great is thisThe man on the moon

Moon the on man theWill this really work

Work really this willI hope this is a big achievement

Achievement big I hope this isThe king came home on a horse

Home horse king came the-----Create opposite sentencesThis is good

This is badThe good horse came home

The bad horse came homeThe good horse with a large head came home

The bad horse with a small head came homeThe black cat had a disappointing time

The white cat had a good timeWhen you jump high, you fall hard

When you fall hard, you get hurtHe ran fast, but he came last

He ran slowly, but he came firstThe book was huge, but the students finished it early

The book was small, but the students finished it lateGetting up early is a good habit

Getting up late is a bad habit

All these examples contrast strongly with the hyped results we saw in previous sections. Delip Rao notes that most of those ‘successful’ examples were cherry-picked by people that were eager to ignore GPT-3’s “pitfalls, often because they have one eye on a new startup the AI enables.”

The importance of good prompting

From these examples, it may seem that GPT-3 was not just hyped, but overhyped by many early users. However, basing the limitations of the system in its incompetence in some examples is a weak argument as those errors could be human-made. Gwern made a very good defense against the idea of proving GPT-3's lack of intelligence by sampling:

Sampling Can Prove The Presence Of Knowledge But Not The Absence

GPT-3 may “fail” if a prompt is poorly-written, does not include enough examples, or bad sampling settings are used. I have demonstrated this many times when someone shows a “failure” of GPT-3 — the failure was their own. The question is not whether a given prompt works, but whether any prompt works.

He argues that bad prompting is a real problem and many of these examples could be adequately solved by GPT-3 with appropriate prompting. He goes onto show how Lacker and other people’s examples are solved with better prompt programming.

He tested GPT-3 against the question of “which is heavier, a toaster or a pencil?” To overcome the limitations of Lacker’s setting, he decided to ask GPT-3 three questions beforehand (prompts in bold):

Q: Which is heavier, a house or a mouse?

A: A house.Q: Which is heavier, a tea kettle or a cat?

A: A cat.Q: Which is heavier, the ocean or a piece of dust?

A: The ocean.Q: Which is heavier, a toaster or a pencil?

A: A toaster.

A: A toaster is heavier than a pencil.

GPT-3 answered 10/10 times correctly the question.

Gwern also solved the counting problem by tweaking a bit the setting parameters (temperature and others).

Q: When counting, what number comes before 100?

A: Ninety-nine comes before 100.Q: When counting, what number comes before 123?

A: One hundred and twenty-two comes before 123.Q: When counting, what number comes before 1000?

A: Nine hundred and ninety-nine comes before 1000.Q: When counting, what number comes before 10,000?

A: Nine thousand, nine hundred and ninety-nine comes before 10,000.Q: When counting, what number comes before 10,000,000?

A: Nine million, nine hundred and ninety-nine thousand, nine hundred and ninety-nine comes before 10,000,000.Q: When counting, what number comes before a million?

A: Nine hundred and ninety-nine thousand, nine hundred and ninety-nine comes before a million.

GPT-3 gets the numbers right, although Gwern notes that the higher we go, the more likely it is that GPT-3 goes up or down an order of magnitude.

With these examples (and more in his blog) he proved that sampling can only prove the presence of knowledge but not the absence. It may be always possible to find a better prompt. In an email exchange with The Verge, he told them that using sampling to find GPT-3 potential and limitations “cannot be the right thing to do.” He thinks it’s just the way we get around not knowing how to interact with GPT-3 adequately. “[Sampling] underestimates GPT-3’s intelligence, it doesn’t overestimate it,” he concludes.

GPT-3 can’t understand the world

Rob Toews wrote a critique of GPT-3 for Forbes, highlighting the lack of common sense reasoning and understanding of the system. Because it has been trained from text, it simply can’t link what it knows with internal representations of the world. Bender and Koller wrote a paper about GPT-2 defending the thesis that a system that’s been trained only on the form of language can’t a priori achieve meaning and understanding. (But because we also experience the world through the form of the inputs our senses get, this argument could also be directed to us. This is known as the symbol grounding problem, sadly out of the scope of this article).

Following on Toews argument, an analogy could be a person that has lived all their life isolated from the world, only reading books. The knowledge of this person would be vast, but it could not be linked to actual subjective experiences of the real world. These experiences allow us to link information with meaning, generating an understanding of reality.

Building on the same direction, professor Yoav Goldberg stated that although GPT-3 has unique abilities, “it is not sentient” and it doesn’t “understand text.” Blogger Ayush Sharma went in full-critique mode when he said that GPT-3 “has little semantic understanding, it is nowhere close to AGI, and is basically a glorified $10M+ auto-complete software.”

One of the main critics of GPT-3 — and deep learning in general — is Gary Marcus, a professor of psychology at New York University. He wrote a very good critique of GPT-2 for The Gradient, and another of GPT-3 for MIT Technology Review, coauthored with Ernest Davis. They acknowledged the potential of GPT-3 for “entertaining surrealist fiction,” but underlined its lack of understanding saying that “its comprehension of the world is often seriously off, which means you can never really trust what it says.”

Their colleague David Summers-Stay conducted the experiments for them as OpenAI had denied them research access. He did a series of reasoning tests including physical, biological, psychological, and social reasoning (the complete set of experiments is here). They used the examples that the system got wrong to prove their point — which I personally think is fair because finding errors should be enough to assess the limitations of a system like GPT-3, although it would be better to know the ratio of successes/failures they got. Some examples (prompt in bold, GPT-3’s response in ordinary font, their commentary in italics):

Physical reasoningYou are having a small dinner party. You want to serve dinner in the living room. The dining room table is wider than the doorway, so to get it into the living room, you will have to remove the door. You have a table saw, so you cut the door in half and remove the top half.[This is one confusion after another. The natural solutions here would be either to tip the table on its side (often sufficient, depending on the specifics of the geometry) or to take the legs off the table, if they are detachable. Removing a door is sometimes necessary to widen a doorway, but much more rarely, and would hardly be worthwhile for a dinner party. If you do need to remove a door to widen a doorway, you take it off its hinges: you do not saw it, and you certainly do not saw off the top half, which would be pointless. Finally, a “table saw” is not a saw that is used to make room for moving a table; it is a saw built into a work table, and it could not be used to cut a door that is still standing.]-----Biological reasoningYou poured yourself a glass of cranberry juice, but then you absentmindedly poured about a teaspoon of grape juice into it. It looks okay. You try sniffing it, but you have a bad cold, so you can’t smell anything. You are very thirsty. So you drink it. You are now dead.[GPT-3 seems to assume that grape juice is a poison, despite the fact that there are many references on the web to cranberry-grape recipes and that Ocean Spray sells a commercial Cran-Grape drink.]-----Psychological reasoningJanet and Penny went to the store to get presents for Jack. Janet said, “I will buy Jack a top.” “Don’t get Jack a top,” says Penny. “He has a top. He will get a top.” “I will get Jack a top,” said Janet.[Within a single sentence, GPT-3 has lost track of the fact that Penny is advising Janet against getting a top because Jack already has a top. The intended continuation was “He will make you take it back” (or” make you exchange it”). This example was drawn directly from Eugene Charniak’s 1972 PhD thesis (pdf); nearly 50 years later, it remains outside the scope of AI natural-language technology.]

They argue that little has changed since GPT-2. The GPT family has the same flaws, “[their] performance is unreliable, causal understanding is shaky, and incoherence is a constant companion.” They argue making models bigger won’t ever lead to intelligence.

However, as Gwern notes in his counter-critique to Marcus and Davis’ article, they acknowledge “their failure to do any prompt programming or hyperparameter settings (particularly BO [best of]) and that their examples are zero-shot without context.” We already know how important it is to find a good prompt (as Gwern proved), so why did they use mediocre examples to criticize GPT-3?

This is what Gwern mostly criticizes about GPT-3 critics. In a section of his review titled “demand more from critics,” he rightly argued that people who claim that GPT-3 doesn’t work as well as it seems need to back their arguments with exhaustive rigorous experiments and tests. People doing tests on GPT-3 should first try to remove any potential human-made errors:

Did they consider problems with their prompt? Whether all of the hyperparameters make sense for that task? Did they examine where completions go wrong, to get an idea of why GPT-3 is making errors? Did they test out a variety of strategies? Did they consider qualitatively how the failed completions sound?

He has a good argument here, although Marcus and Davis already thought about it in their critique. They even make a case for their biological example in which by changing the prompt to a more specific and long-winded one, GPT-3 answers correctly.

They probably could have made the same exact critique to GPT-3 albeit using better, well-prompted examples, to which Gwern would have got little to say. Gwern even recognizes that in that case, he would have no problem admitting the limitations of the system. In the end, lazy, easy critiques are also easily refuted with effortful work, as Gwern proved.

But the truth is that Marcus and Davis didn’t want to prove that GPT-3 can fail (that’s pretty obvious), but that we can’t know when it will fail. “The trouble is that you have no way of knowing in advance which formulations will or won’t give you the right answer,” they say, “it can produce words in perfect English, but it has only the dimmest sense of what those words mean, and no sense whatsoever about how those words relate to the world.” If GPT-3 had understanding of the world, good prompting wouldn’t matter that much in the first place.

Summers-Stay made a nice metaphor for GPT-3: “It’s […] like an improv actor who is totally dedicated to their craft, never breaks character, and has never left home but only read about the world in books. Like such an actor, when it doesn’t know something, it will just fake it.” If we could make GPT-3 recognize when it’s wrong, these issues would fade away. However, this is unlikely, as even we, humans, are unable to assess our incorrectness when we’re sure we’re right.

Above the practical debates regarding GPT-3’s sampling limitations, there’s another debate. The philosophical debate about tacit — subjective and experiential — knowledge and the necessity for truly intelligent systems to be embodied in the world. It seems that having every bit of information from the world in a book might not be enough.

Truly intelligent systems will live in the world

Philosopher Shannon Vallor, in a critique to GPT-3 for Daily Nous, defends that today’s current approaches to artificial general intelligence are off the right path. She argues that we need to go back to when the field was “theoretically rich, albeit technically floundering” in the second half of the 20th century.

She notes that philosopher Hubert Dreyfus, one of the early leading critics of the connectionist approach to AI (deep learning and neural networks), already understood that “AI’s hurdle is not performance […] but understanding.” And understanding won’t happen in an “isolated behavior,” such as the specific tasks that GPT-3 is asked to do every time.

“Understanding is a lifelong social labor. It’s a sustained project that we carry out daily, as we build, repair and strengthen the ever-shifting bonds of sense that anchor us to the others, things, times and places, that constitute a world.”

— Shannon Vallor

Dreyfus argued in his 1972 book What Computers Can’t Do that a good portion of human knowledge is tacit — know-how knowledge, such as riding a bike or learning a language. This knowledge can’t be transmitted so we can’t learn it from reading hundreds (nor trillions) of words. As Michael Polanyi said, “we can know more than we can tell.” The inability of virtual AIs — GPT-3 included — to grasp tacit knowledge creates an impassable gap between us and them.

Our understanding of the world that surrounds us is not a passive perception process. We enact our reality. We act upon the world and that labor, as Shannon Vallor calls it, is a key component in building our intelligence. As Alva Noë says in his book Action in Perception, “perception is not a process in the brain, but a kind of skillful activity of the body as a whole.

Machines can achieve expertise within the boundaries of a virtual world, but not more than that. In the words of Ragnar Fjelland, professor emeritus at the University of Bergen: “As long as computers do not grow up, belong to a culture, and act in the world, they will never acquire human-like intelligence.”

What can we get from these debates?

We have seen some crucial critiques and counter-critiques from both sides, those who are in favor of model-scaling — bigger is better — and those who strongly advise against this approach and recommend making some changes for the future of AI.

I want to recap before finishing this section. There are three important arguments here. Two from the practical view and one from the philosophical view. First, GPT-3 is a powerful language tool that can do impressive things and its limitations can hardly be found by sampling/prompt programming. Anyone who claims to have proved GPT-3’s failure to have achieved some sort of intelligence by using sampling, could be very well misled by human-made errors. Second, because GPT-3’s responses are unreliable, what is the point of using it to reason? Is it useful if we don’t find a standard way to create prompts? If the prompts can always be improved, there’s no real argument neither against nor in favor of the system. Because the actual limitations are within us.

Third, can we put GPT-3 and general artificial intelligence in the same sentence? Some scholars, mostly from the philosophical side of the issue, argue that neither symbolic AI nor connectionist AI will be enough to achieve true artificial intelligence. It isn’t a matter of creating bigger systems fed with stratospheric amounts of data. It is a matter of introducing these machines to the world as we live it. Professor of Bioengineering at the University of Genoa, Giulio Sandini argues that “to develop something like human intelligence in a machine, the machine has to be able to acquire its own experiences.”

The importance of debating about GPT-3 — or any other super-powerful AI system — is to be able to set the boundaries of what it can or can’t do. Academics often debate biased by their ideas and desires of what should work and what shouldn’t. A careful, unbiased analysis is what is often lacking in these spaces. What is above our control is that as these systems get more and more complex, we may be unable to test them to assess their potential and limitations.

Let’s imagine a hypothetical GPT-4, orders of magnitude more powerful than GPT-3. Finding its boundaries could become an impossible task. Then, how could we conclude anything about the system? Could we assume we can trust it? Is there any use in creating a system which limits are above our testing capabilities? Could we conclude anything about the intelligence of the system when it’s our limitations that prevent us to find the true limits of the system?

When the true capabilities of a system lie somewhere in between the interaction of our ability to use it and its ability to act accordingly, it’s difficult to not underestimate how powerful it could get. These questions are worth wondering and will probably be more important in the future when quasi-intelligent systems become a reality. By then, we better sum our efforts to find truth instead of fighting to see who is right.

Overall conclusion

GPT-3 produced amazing results, received wild hype, generated increasing worry, and received a wave of critiques and counter-critiques. I don’t know what to expect in the future from these types of models but what’s for sure is that GPT-3 remains unmatched right now. It’s the most powerful neural network as of today and accordingly, it has received the most intense focus, in every possible sense.

Everyone was directing their eyes at GPT-3; those who acclaim it as a great, forward step towards human-like artificial intelligence and those who reduce it to barely be an overhyped strong autocomplete. There are interesting arguments on both sides. Now, it’s your turn to think about what it means for the present of AI and what it’ll mean for the future of the world.