Abstract

The complex traffic network spatial correlation and the characteristic of high nonlinear and dynamic traffic conditions in the time are the challenges to accurate traffic flow forecasting. Existing spatiotemporal models attempt to utilize the static graph to explore spatial dependency and employ RNN-based model to capture temporal dependency. However, the static graph fails to reflect the dynamic changeable correlation between each node. That is some nodes have a strong connection in a real traffic network, whereas a weak connection is in a static predefined graph. To overcome the above problems, we propose a spatial dynamic graph convolutional network (SDGCN) for traffic flow forecasting. With the support of an attention fusion network in graph learning, SDGCN generates the dynamic graph at each time step, which can model the changeable spatial correlation from traffic data. By embedding dynamic graph diffusion convolution into gated recurrent unit, our model can explore spatio-temporal dependency simultaneously. Moreover, to handle long sequence forecasting, ReZero transformer is utilized to detect the global temporal correlation capturing. The experiments are conducted on two public datasets. The experimental results demonstrate the superior performance of our network.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Traffic flow forecasting is a critical component in intelligent transportation system (ITS) [1]. The forecasting accuracy of future traffic flow has been improved by deep learning techniques [2,3,4]. Accurate traffic flow forecasting benefits many applications, including autonomous vehicle operations, energy and smart grid optimization, logistics and supply chain management [5].

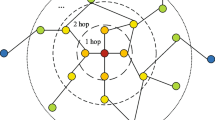

Early methods of traffic flow prediction can be mainly divided into knowledge-driven approaches and data-driven approaches [6]. Traditional knowledge-driven methods apply queuing theory and simulate user behaviors in traffic. Knowledge-driven approaches consist of auto-regressive integrated moving average (ARIMA) model and Kalman filtering [7], etc. These methods are based on the ideal stationary assumption [8]. However, a traffic network is a dynamically changing network with complex spatiotemporal dependency. So these methods are not suitable for dynamic traffic flow forecasting. In order to capture the complex temporal correlation of historical traffic flow of the road networks, recurrent neural networks (RNNs) are introduced to predict traffic flow [9]. Meanwhile, There is an obvious spatial correlation between adjacent nodes of the transportation network. Convolutional neural networks (CNNs) are applied to extract spatial correlation of traffic network [9, 10]. CNNs handle grid structures well. However, the road network is a typical non-Euclidean data, which is a topology structure. Those methods are poor in capturing non-Euclidean correlation to forecast traffic flow sequence [11]. To deal with this issue, Graph Neural Networks (GCNs) was proposed to process spatiotemporal information [12,13,14,15]. GCNs is effective to exploit the non-grid local spatial feature through the Laplacian matrix. GCNs combined with RNNs or Gated-CNN is widely adopted in spatial-temporal graph modeling in traffic network [5, 14, 16]. Different from CNNs and RNNs, GCNs integrating with RNNs or Gated-CNN achieve better performance with spatial and temporal modeling. However, these previous methods still have the following challenges.

First, it is still challenging to effectively extract the dynamics of traffic data in both temporal and spatial dimensions. Most GCN-based methods adopt static graphs and fixed adjacency matrices to capture spatial correlations for feature representation of nodes [14]. However, the correlation of traffic conditions between sensors in road networks changes dynamically over time [17]. GCNs based on static graphs are unable to dynamically capture the features of nodes [18]. Spatial-Temporal Graph Convolution Network (STGCN) based on a static laplacian matrix ignores many dynamic spatial relations hiding in the traffic data [19, 20]. Though Graph WaveNet proposed Gated TCN and self-adaptive adjacency matrix to extract spatial and long-distance temporal information separately, it can not mine the correlation between different dynamic graphs in time steps [14]. Spatial-Temporal Fusion Graph Neural Networks (STFGNN) introduce spatial-temporal fusion graph to simultaneously extracts spatiotemporal features, but it ignores modeling dynamic graphs [16].

Second, current networks have insufficient capture of time series in the time dimension. The forward and backward signals in RNNs must traverse long cyclic paths in the network. The farther the distance between input and output components, the more difficult it is to learn long-term correlation efficiently [21]. The RNN-based network is easy to cause the problem of gradient disappearance and explosion, so its effect on capturing correlation in long sequence model is unsatisfactory [9, 22]. Temporal Convolutional Network (TCN), a special one-dimensional CNN, is a general structure to extract features in time dimension [14, 16, 23]. Considering the limited receptive field of TCNs, they also have difficulty capturing long-range dependencies [24]. To capture temporal long-term patterns, TCN requires a stack of several convolutional layers to connect any two positions in the sequence. But this impairs the ability of TCNs to learn long-term dependencies [24].

To settle the above shortcomings, we propose a novel Spatial Dynamic Graph Convolutional Network (SDGCN) to build dynamic spatio-temporal graphs. First, Not only the static graph is utilized to explore the spatial dependency, but also the dynamic graph as well. A new dynamic graph is generated at each time step by the current traffic features which are captured by spatial mechanism and the past traffic features. In this way, every dynamic graph can preserve the hidden spatial correlation according to the dynamic traffic condition at different times. Second, motivated by STGNN [3], we design the recurrent neural network with Rezero [25] transformer to capture short-term and long-term time series. The dynamic diffusion convolution is embedded into the recurrent neural network [15] to learn global spatial correlation and temporal correlation. Our main contributions to this work are summarized as follows.

-

We propose an attention fusion network with spatial attention and a gated mechanism to generate a dynamic graph at a time node without any prior knowledge. The network is designed to preserve spatial dependency more effectively with dynamic node embedding.

-

We propose an effective module, the dynamic diffusion graph convolutional recurrent module, to capture global spatial correlation and shallow temporal correlation simultaneously. The core idea is to embed the dual dynamic diffusion convolution into the recurrent neural network in a way that in each recurrent unit, the dynamic diffusion graph network tackles the spatial dependency from the past spatial features which is the output from the last recurrent unit and the current traffic condition. To enhance the capacity in long-term series capturing, we add Rezero transformer in the temporal module to extract long temporal sequences.

-

We evaluate our model on two real-world traffic datasets with extensive experiments. The experiments demonstrate that the SDGCN outperforms the state-of-art methods.

2 Related works

2.1 Traffic flow forecasting

We aim to learn a function to predict the traffic flow in the future by mapping the historical traffic flow. A traffic network can be denoted as G = (V,E,A), where V represents the set of nodes |V | = N and E is the set of edges. The spatial adjacency matrix is represented as \(A \in \mathbb {R}^{N\times N}\). Aij = 1 means there is an edge between node i and node j, otherwise 0. \(X_{t}\in \mathbb {R}^{N\times D}\) represented the traffic signal, where D is the number of traffic features.(e.g., road network occupancy, traffic speed, capacity) in time step t, Given the historical traffic flow X(t−P+ 1):t and the future X(t+ 1):(t+Q), the function is formulated as:

where \(X_{(t-P+1):t}=(X_{t-P+1}, X_{t-P+2}, \ldots , X_{t}) \in \mathbb {R}^{P\times N\times D}\) and \(\hat {X}_{(t+1):(t+Q)}=(\hat {X}_{t+1}, \hat {X}_{t+2}, \ldots , \hat {X}_{t+Q})\).

2.2 Graph convolution

Graph convolution is an effective operation in graph-based tasks to extract structural features of nodes. Graph WaveNet [14], STFGNN [16], [12] etc. adopt graph convolution to capture spatial correlation to predict traffic flow. In application of classification and clustering, Graph ST-GDN convolution can learn hidden node representations, such us cluster-gcn [26], streaming GNN [27] and LGL [28]. Let \(\tilde {A}\in \mathbb {R}^{N\times N}\) represent normalized adjacency matrix with self-loops A + I, \(X\in \mathbb {R}^{N\times D}\) denote traffic data, \(Y\in \mathbb {R}^{N\times D}\) is output. Kipf and Welling [29] defined Graph convolution layer as:

where \(W\in \mathbb {R}^{D\times N}\) is a learnable parameter matrix. In addition, many GCN variants have emerged. When the graph direction is double, some methods proposed diffusion graph convolution extract features from backward and forward diffusion [5, 14, 16, 30]. Though a fixed adjacency matrix is limited to learning effective correlations of network with missing genuine relations, some methods proposed adaptive GCN which can capture the hidden dependency to complete incomplete information [14, 31]. When graph network is changing dynamically with many impacting factors, multi-attention GCN with encoder and the decoder was introduced to capture dynamic graphic information [17, 24].

2.3 Attention mechanism

Attention is a fundamental operation to model the dependency between a collection of values and the target under a query. Attention mechanism has been widely applied in natural language processing (NLP) [32, 33], image recognition [34, 35], protein identification [36, 37], recommended system [38] and etc.

Self-attention which emphasizes many-to-many is a variant of Attention mechanism. In recent years, multi-head self-attention has also been applied to traffic flow prediction [3, 17, 24, 31]. First, many attention models are currently attached to the Encoder-Decoder framework. The framework stacks multiple layers to capture the dynamics of traffic data more effectively with multi-head attention [5, 17, 24]. Second, multi-head attention is encapsulated in transformer layers which follow recurrent network structure. The processed sequence information via recurrent network is passed into the attention layer to aggregate information [3]. Lastly, Multi-head attention is also embedded in other types of network structures to predict traffic flow [31, 39, 40]. In this paper, we adopt multi-head self-attention and recurrent neural network to capture the complex dynamics and long-term patterns of traffic flow.

3 Methodology

The framework of Spatial Dynamic Graph Convolution Network is presented in Fig. 1. It consists of three components, graph learning, dynamic diffusion convolutional recurrent module, and rezero transformer. In this section, we first give the brief introduction about three components. Next, we describe them in more details in following subsection.

The framework of SDGCN. At each time step, the dynamic graphs are generated in Graph Learning Module. All dynamic graphs are utilized in graph convolution recurrent units (GCRU) to capture spatial and temporal dependency simultaneously. Rezero transformer layers are to capture global temporal dependency

Graph learning

The framework of Spatial Dynamic Graph Convolution Network is presented in Fig. 1. It consists of three components, graph learning, dynamic diffusion convolutional recurrent module, and rezero transformer. In this section, we first give a brief introduction to three components. Next, we describe them in more detail in the following subsection.

Dynamic diffusion convolutional recurrent module

This module is made of several graph convolutional recurrent units(GCRU). To explore spatial dependency more effectively, we design the dynamic diffusion convolution in each GCRU. Further, we replace the matrix multiplications in GRU with dynamic diffusion convolution to capture the spatial and temporal correlation simultaneously.

Rezero transfomer layer

To better capture the temporal dependency in long sequence traffic flow, based on the standard transformer, we employ rezero transformer [25] to capture global temporal correlations, the architecture of the rezero transformer is presented in Fig. 3.

3.1 Graph learning

Traffic condition is highly dynamic and the correlations between each node are changed with time as well. To model the dynamic spatial dependency, We design an attention fusion network to learn hidden representations from the traffic data and generate a dynamic graph to preserve hidden spatial dependency more effectively. It contains spatial attention and gated fusion, and graph generator presented in Fig. 2.

Spatial attention

At each time step, Attention mechanism [41] is used to adaptively capture current spatial correlation by assigning different weights to different nodes. We project traffic signal \(X_{t} \in \mathbb {R}^{N\times D}\) into three matrices Qt, Kt, Vt. Qt, Kt with dimension dk and Vt with dimension dv. Qt = XtWQ, Kt = XtWK, Vt = XtWV. \(W_{Q}\in \mathbb {R}^{D\times d_{k}}\), \(W_{K} \in \mathbb {R}^{D\times d_{k}}\), \(W_{V}\in \mathbb {R}^{D\times d_{v}}\) are learned parameters. The attention function Attention(Qt,Kt,Vt) is as follows:

Multi-Head Attention can jointly capture information from different representation subspaces at different positions [41]. Based on this, we expand the attention mechanism to multi-head attention to capture more comprehensive features.

\(W^{O} \in \mathbb {R}^{(k\cdot d_{v})\times d_{s}}\) is a projection matrix, k is the number of attention head and ds is the output dimension from multi-head atention. \(Attention({Q_{t}^{i}},{K_{t}^{i}},{V_{t}^{i}})\) is the i-th attention output at time step t. Further, \(MAH^{t} \in \mathbb {R}^{N\times d_{s}}\) is short for MultiHead(Qk,Kt,Vt).

Gated fusion

The correlation between road nodes is not only related to the current traffic conditions but also related to the traffic conditions in the past period time. To model this property, the gated fusion is adopted to adaptively fuse the spatial representation at the current time \(MAH^{t} \in \mathbb {R}^{N\times d_{s}}\) and the past time \(H_{t-1} \in \mathbb {R}^{N\times h}\), h is the dimension of hidden state.

\(AF_{t}\in \mathbb {R}^{N\times d_{s}}\) viewed as the attention based graph filter and \(H^{\prime }_{t-1} \in \mathbb {R}^{N\times d_{s}} \) is the matrix mapped from \(H_{t-1} \in \mathbb {R}^{N\times h}\) to ensure the matrix dimension is the same as MAHt. Wz,1,Wz,2,bz,Wh,bh are the learnable parameters.

Graph generator

The main idea of the Graph generator is to learn an adjacency matrix to capture hidden representation from traffic flow data adaptively during end to end learning. Following MTGNN [42], DGCRN [22], Element-wise multiplication between attention filter AFt and the model parameters \({\Theta } \in \mathbb {R}^{N\times d_{s}}\) is utilized to dynamically adjust the correlation between each node to obtain dynamic node embedding. The formula of generating a graph is described as follows:

where Θ1 and Θ2 are learned parameters during end to end learning. β is a hyper-parameter for controlling the saturation rate of the activation function. 〈 , 〉 is Hadamard product. We name \(D{E_{1}^{t}} \in \mathbb {R}^{N\times d_{s}}\) as the source dynamic node embedding and \(D{E_{2}^{t}} \in \mathbb {R}^{N\times d_{s}}\) as the target dynamic node embedding. By calculating the similarity between source dynamic node embedding \(D{E_{1}^{t}}\) and target dynamic node embedding \(D{E_{2}^{t}}\), the dynamic adjacency matrix DAt can be generated. tanh(⋅) and ReLU(⋅) are the activation function.

3.2 Dynamic diffusion convolutional module

DCRNN [5] is another graph convolution method to explore node representation, which proposed the bidirectional diffusion convolution to capture more influence from both the upstream and the downstream traffic. The formula is described as follows:

where \(P_{f}=D_{o}^{-1}A\) and \(P_{b}=D_{I}^{-1}A^{T}\) represents the transition matrix. Do = diag(A1) and \(D_{I}=diag(\textbf {A}^{T}\textbf {1})\) are the out-degree diagonal matrix and \(\textbf {1}\in \mathbb {R}^{N}\). \({P_{f}^{k}}\) and \({P_{b}^{k}}\) represents the power series of the transition matrix Pf, Pb. 𝜃k,f and 𝜃k,b are learnable parameters.

The Predefined graph reflects the stationary correlation between each node. Instead, a dynamic graph is changed with time to reflect the dynamic spatial correlation among traffic data. We combine the static graph and the dynamic graph in graph representation learning to detect hidden spatial dependency. The bidirectional diffusion convolution is embedded into graph convolution in graph representation learning. Especially, in the part of dynamic graph diffusion convolution, we consider the two-direction traffic flow influence in a dynamic graph at the same time.

where \(\tilde {DA}_{f}^{t}\) represents dynamic forward transition matrix. \(\tilde {DA}_{b}^{t}\) represents dynamic backward transition matrix. 𝜃k,f, 𝜃k,b, 𝜃k,df, 𝜃k,db are learnable parameters.

3.3 Temporal dependency module

We utilize the RNN-based model to capture temporal dependency. Gated Recurrent Units (GRU) with fewer parameters can effectively tackle gradient vanishing and capture long time series. To capture spatial and temporal correlation simultaneously, we replace matrix multiplications in GRU with the dynamic diffusion convolution and name it Graph Convolution Recurrent Unit (GCRU).

Where Xt denotes the traffic signal at time t, Ht− 1 denotes the output from GCRU at time t − 1. r(t), u(t) are reset gate and update gate at time t. ⋆ G denotes the dynamic diffusion convolution which is defined in Fig. 9. Θr, Θu, Θh are learned parameters for the diffusion convolution layer. σ(⋅) is the activation function.

To further explore global temporal dependency more effectively, STGNN [3] employs transformer [41] to learn the long-term temporal dependency. Inspired by it, we improve the transformer architecture with rezero [25] to enhance the capacity of model to handle the long-term series.

Rezero [25] can improve the signal propagation and get the fast convergent in deep transformer by improving model trivially dynamical isometry. Adopting the rezero to the shallow transformer can enhance the capacity of propagation in the transformer to explore more hidden long temporal dependency.

As shown in Fig. 3, the rezero transformer discards the LayerNorm and re-scaling the self-attention block to learn the global temporal dependency. Since multi-Head attention treats the different positional data equally while calculating the attention function, ignoring the positional relationship. So, before calculating attention scores, the output Ht from GCRU needs to combine with position encoding PEt to make the transformer aware of relative position among time series, which is considered as the input \(H^{\prime }_{t}\) to transformer layer. The position encoding PEt is defined by the sine and cosine function as follows:

where Ht,i denotes the feature of node i from GCRU output at time t, dmodel is the same dimension as the hidden state. The rest of rezero transformer is described as follows:

where \(HO^{\prime \ (i-1)} \in \mathbb {R}^{P\times N \times h}\) represents the output from last rezero transformer layer and \(MultiHead(H^{\prime \ (i-1)})\in \mathbb {R}^{P\times N \times h}\) is the output from multi-head attention. Multi-head attention and feed-forward network share the same learned residual weight parameter α.

3.4 Output layer

The spatial-temporal feature \(HO^{\prime \ (i)}\in \mathbb {R}^{M \times N \times h} \) from the last rezero transformer layer goes through a linear transformation. The result from the linear transformation is the final traffic flow prediction \(\hat {Y} \in \mathbb {R}^{Q\times N\times D}\).

where \(W_{o} \in \mathbb {R}^{h\times D},b_{o} \in \mathbb {R}^{N\times D}\) are learnable parameters. Further, we employ mean absolute error (MAE) as the loss function to train SDGCN.

4 Experiments

To verify the performance of SDGCN, we experiment on two public traffic datasets, METR-LA and PEMS-BAY. Utilizing METR-LA with complex traffic conditions and PEMS-Bay with uniform traffic conditions can verify the generalization and effectiveness of the model from many aspects. METR-LA traffic data was collected from 207 sensors on the highway of Los Angeles from March 1st, 2012 to June 30th, 2012. PEMS-BAY records for five months of 325 sensors traffic speed in the Bay Area, ranging from Jan 1st, 2017 to May 31st, 2017. The sensor distributions are shown in Fig. 4. More details about METR-LA and PEMS-BAY are presented in Table 1. We do the same data preprocessing as DCRNN [5]. The sensor graph is constructed by calculating the pairwise road network distances between sensors. The thresholded Gaussian kernel is used to build the static adjacency matrix. We divide two datasets respectively, in which 70% of data is used as the training set, 20% of data as the testing set and the remaining data is used for the validation set (Fig. 5).

The feature correlation between each sensor and the central sensor in different periods after diffusion convolution in the METR-LA dataset. The image in the first row represents the feature correlation in each period centered on the 115th sensor. The image in the second row represents the feature correlation of each period centered on the 158th sensor

4.1 Baselines

We compare the SDGCN with some traditional models and mainstream methods, which mostly are CNN-based and RNN-based. They are described as follows.

-

ARIMA. Auto-Regressive Integrated Moving Average model with Kalman filter [43], a classical and widely used method in time series prediction.

-

SVR. Support vector regression utilizes support vector machine to regression analysis, which is a traditional method in time series tasks as well.

-

FC-LSTM. Fully connected long short-term memory makes up for the lack of capturing long sequence dependency.

-

ASTGCN. Attention Based Spatial-Temporal Graph Convolution Networks [2], a CNN-based method, combines the spatial-temporal attention mechanism and spatial-temporal convolution to capture spatial-temporal correlation in three components, including recent time, daily time and week time.

-

GraphWaveNet. Graph WaveNet for Deep Spatial-Temporal Graph Model [14], a CNN-based method, designs a self-adaptive graph into graph convolution with dilated casual convolution.

-

STGCN. Spatio-Temporal Graph Convolution Networks [19], a CNN-based method, uses several graph convolutions and 1-D casual convolutions to forecast traffic data.

-

DCRNN. Diffusion convolutional recurrent neural network [5], a CNN-based and RNN-based method, employs the dual directional diffusion convolution on gated recurrent unit.

4.2 Experimental settings

We conduct our experiments under the Linux environment with one Intel(R) Xeon(R) Gold 6139 CPU @ 2.30GHZ and two NVIDIA Geforce RTX2080 Ti GPU cards. In our model, we set the batch size to 64 [44] and the hidden dimension h to 64 for METR-LA and PEMS-BAY datasets. In the graph learning part, the number of attention heads is set to 5, the output dimension from multi-head attention ds is 40 and the hyper-parameter β is 3. In GCRU, the depth of dynamic graph diffusion recurrent convolution layer is set to 1, and the depth of graph diffusion convolution K is 2. We set the number of rezero transformer layers as 1 and the number of attention heads in the transformer layer as 2. The Adam optimization [45] with an initial learning rate of 0.001 is utilized to train the model. The maximum epoch is set to 100. To avoid overfitting, we employ early stopping during training. Further, Mean Absolute Error(MAE), Root Mean Square Error(RMSE), and Mean Absolute Percentage Error(MAPE) are used as the evaluation metrics to demonstrate the effectiveness of our model.

4.3 Experimental results

Table 2 illustrates the performance of SDGCN and seven baselines for 15 minutes, 30 minutes, and 60 minutes. SDGCN outperforms both the temporal model (ARIMA, SVR, FC-LSTM) and the spatio-temporal model(ASTGCN, GraphWaveNet, STGCN, DCRNN) at different time periods. We observe the following experimental phenomenons.

-

As to the temporal models (ARIMA, SVR), their performance is the worst among all comparable methods, since traffic conditions depend on time and space, temporal models attempt to treat the traffic condition as independent time series to forecast traffic flow, which will ignore the influence of traffic conditions at the spatial level and damage the expression of node features.

-

As to the spatio-temporal model, ASTGCN model employs attention mechanism to capture spatial and temporal correlation as well, however, its performance is even worst than a simple architecture FC-LSTM, their model might lack the capacity to learn key information from complex graph structures and fail to explore long temporal dependency.

-

Benefits from the adaptive graph, which can be learned during end-to-end learning, the performance of GraphWaveNet works better than other spatio-temporal models (STGCN, DCRNN) in short-term traffic flow forecasting. The adaptive graph preserves hidden spatial dependency which the static graph doesn’t have which is helpful in short-term forecasting. However, the adaptive graph is different from the dynamic graph. The Adaptive graph is updated in each iteration, instead, the dynamic graph in SDGCN is updated in each time step, which can dynamically reflect the current traffic topology structure more effectively.

-

Compared with DCRNN, the second best performance model, the experiment indicates that SDGCN works better than DCRNN. SDGCN employs dual diffusion convolution to capture spatial dependency as well. The first different place between DCRNN and SDGCN is that SDGCN generates the dynamic graph at each time step, combing with the static graph to dual graph diffusion convolution. The dynamic graph provides more real-time node correlation to help the diffusion convolution to capture spatial dependencies more effectively. The second different place is that based on GRU, we employ Rezero transformer. Rezero transformer enhances the capacity to capture long-term series correlation with the key component of multi-head attention and rezero. The above two key differences make the SDGCN works better than DCRNN.

The METR-LA dataset is characteristic of complex traffic conditions, which will be more challenging for traditional graph convolution networks to capture complex spatial features. It’s natural that the performance of SDGCN in PEMS-BAY is better than the performance in METR-LA. However, no matter whether training in METR-LA or PEMS-BAY, SDGCN achieves high improvement in short-term and long-term traffic speed forecasting. On the 60 minutes horizon, PEMS-BAY even achieves promising forecasting and realizes nearly 5% enhancement. Further, we calculate the average performance of GraphWaveNet and AGCRN [46] by training 5 times on METR-LA. Figure 6 illustrates that SDGCN is better than GraphWaveNet and AGCRN which both use an adaptive graph to model the node correlation in traffic networks in forecasting complex traffic conditions.

we visualize the 15 minutes ahead forecasting result and the real result in Fig. 7. SDGCN outputs the smooth prediction in METR-LA and PEMS-BAY and accurately predicts the trend of traffic speed in a day. We also observe that the accuracy of 15 minutes ahead is better than one hour ahead. 15 minutes ahead of forecasting can detect more mutations, which is helpful to improve prediction accuracy. Showing at Figs. 8 and 9, Advanced forecast in 15 minutes more similar to the real traffic condition in an hour compared with one hour ahead traffic forecasting.

4.4 Ablation experiments

To confirm the effectiveness of different components in SDGCN. We conduct an ablation experiment on five variants which are introduced as follows.

-

w/o fusion. In graph learning, it just contains attention filter AF to generate a dynamic adjacency matrix, without combing the previous traffic feature.

-

w/o wttention. In graph learning, we replace the multi-head attention with static graph convolution to generate graph feature filter.

-

w/o diffusion convolution. The diffusion convolution in dynamic diffusion convolutional Module was replaced by graph convolution to capture spatial dependency.

-

w/o DAf ~,DAb ~. In dynamic graph diffusion convolution, we discard the dynamic graph diffusion convolution, remaining the static graph diffusion convolution.

-

w/o Pf,Pb. It is the opposite of w/oDA, only dynamic graph diffusion convolution is worked.

-

w/o Rezero. We utilize the encoder part of transformer to conduct the experiment.

In Table 2, compared with the result of complete SDGCN, the performance of six variants all declined on METR-LA, especially variant of w/o attention, w/o DAf ~,DAb ~ in long series forecasting and w/o Pf,Pb. On PEMS-BAY, the performance of diffusion convolution variants is close to the graph convolution’s results. The ablation phenomenon proves that each component in SDGCN is effective, except for the use of diffusion convolution to explore spatial features on the PEMS-BAY dataset. Further, we draw some conclusions as follows:

-

For the attention filter. Multi-Head Attention plays an important role in capturing spatial correlation. Different from the graph convolution which is limited to the graph structure, it treats each node equally. Each node can be influenced by the distant node, multi-head attention can model this property to capture more global spatial correlation. Therefore, benefitting from using multi-head attention to generate graphs, our model efficiently explores more hidden features. Further, we discuss the effectiveness of the number of attention heads on spatial feature extraction, illustrating in Table 3. We observe that when K = 5, the model average performance is the best on the METR-LA datasets. On the PEMS-BAY datasets, the performance is close when k = 4 or k = 5. The probable reason is that different attention heads offer more different views to extract spatial features, helping explore more effective spatial dependency.

-

For dynamic graph and static graph. Combing the static graph diffusion convolution and the dynamic graph diffusion convolution to do graph representation learning works better than the variants of just keeping one of them. The static graph provides the existing graph information and the dynamic graph provides the newest graph relationship, both of them help the model achieve high performance in traffic forecasting tasks.

-

For the diffusion convolution. We observed that the performance of diffusion convolution on METR-LA works better than on PEMS-BAY datasets, the performance in METR-LA is improved by 2.74%. As to PEMS-BAY, the performance of stacking multi-graph neural network layers is the same as diffusion convolution to capture the spatial node features. The probable reason is that diffusion convolution is more effective in capturing the complex spatial dependence between upstream and downstream with the help of a bidirectional transfer matrix. Further, we visualize the effect of diffusion convolution at different times and different central sensors on METR-LA datasets. As Fig. 5 shows, Whether centered on the 115th sensor or the 158th sensor, the correlation of surrounding sensors gradually weakens from inside to outside at different periods, which is consistent with the effective capture of forward-backward feature relations by diffusion convolution.

Besides, fusing the previous traffic feature to generate the dynamic graph also slightly improves the effective graph learning in our model. As Table 2 shows, adding rezero to transformer prompts the model’s capacity in long temporal correlation learning, and the performance is nearly enhanced to 4%.

5 Conclusion

In this paper, we propose a spatial dynamic graph convolutional network to realize the traffic flow forecasting tasks. We design an attention fusion network to generate dynamic graphs without any prior knowledge to preserve hidden spatial dependency. Moreover, we propose making dynamic Graph diffusion convolution embed into the gated recurrent unit to capture spatial-temporal dependency simultaneously. Further, the rezero transformer in SDGCN is to enhance the model capacity to explore long-term temporal dependency. Extensive experiments on two public traffic datasets demonstrate that our SDGCN outperforms the state of art methods.

References

Huakang L u, Ge Z, Song Y, Jiang D, Zhou T, Qin J (2021) A temporal-aware lstm enhanced by loss-switch mechanism for traffic flow forecasting. Neurocomputing 427:169–178

Guo S, Lin Y, Feng N, Song C, Wan H (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 922–929

Wang X, Ma Y, Wang Y, Jin W, Wang X, Tang J, Jia C, Jian Y u (2020) Traffic flow prediction via spatial temporal graph neural network. In: Proceedings of the web conference 2020, pp 1082–1092

Yang S, Li H, Luo Y u, Li J, Song Y, Zhou T (2022) Spatiotemporal adaptive fusion graph network for short-term traffic flow forecasting. Mathematics 10(9):1594

Li Y, Yu R, Cyrus S, Liu Y (2018) Diffusion convolutional recurrent neural network: data-driven traffic forecasting. In: International conference on learning representations

Cui Z, Huang B, Dou H, Cheng Y, Guan J, Zhou T (2022) A two-stage hybrid extreme learning model for short-term traffic flow forecasting. Mathematics 10:2087

Cai L, Zhang Z, Yang J, Yidan Y u, Zhou T, Qin J (2019) A noise-immune kalman filter for short-term traffic flow forecasting. Phys: Stat Mech Appl 536:122601

Zhou T, Jiang D, Lin Z, Han G, Xuemiao X u, Qin J (2019) Hybrid dual kalman filtering model for short-term traffic flow forecasting. IET Intell Transp Syst 13(6):1023–1032

Yu H, Wu Z, Wang S, Wang Y, Ma X (2017) Spatiotemporal recurrent convolutional networks for traffic prediction in transportation networks. Sensors 17(7):1501

Du B, Peng H, Wang S, Md ZAB, Wang L, Gong Q, Liu L, Li J (2019) Deep irregular convolutional residual lstm for urban traffic passenger flows prediction. IEEE Trans Intell Transp Syst 21(3):972–985

Cai L, Lei M, Zhang S, Yidan Y u, Zhou T, Qin J (2020) A noise-immune lstm network for short-term traffic flow forecasting. Chaos 30(2):023135

Zhang X, Huang C, Yong X u, Xia L, Dai P, Bo L, Zhang J, Zheng Y u (2021) Traffic flow forecasting with spatial-temporal graph diffusion network. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 15008–15015

Sun K, Zhu Z, Lin Z (2020) Adagcn: adaboosting graph convolutional networks into deep models. In: International conference on learning representations

Wu Z, Pan S, Long G, Jing J, Zhang C (2019) Graph wavenet for deep spatial-temporal graph modeling. In: Proceedings of the 28th international joint conference on artificial lntelligence, IJCAI ’19. AAAI Press, pp 1907–1913

Zonghan W u, Pan S, Chen F, Long G, Zhang C, Philip S Y u (2020) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learning Syst 32(1):4–24

Li M, Zhu Z (2021) Spatial-temporal fusion graph neural networks for traffic flow forecasting. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 4189–4196

Zheng C, Fan X, Wang C, Jianzhong Qi (2020) Gman: a graph multi-attention network for traffic prediction. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 1234–1241

Chen C, Li K, Teo SG, Zou X, Wang K, Wang J, Zeng Z (2019) Gated residual recurrent graph neural networks for traffic prediction. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 485–492

Bing Y u, Yin H, Zhanxing Zhu (2018) Spatio-temporal graph convolutional networks: a deep learning framework for traffic forecasting. IJCAI’18. AAAI Press, pp 3634–3640

Zhang X, Haghani A, Yang S (2019) Is dynamic traffic sensor network profitable for network-level real-time information prediction? Transport Res Part C: Emerging Technol 102:32–59

Hochreiter S, Bengio Y, Frasconi P, Schmidhuber J et al (2001) Gradient flow in recurrent nets: the difficulty of learning long-term dependencies

Li F, Feng J, Yan H, Jin G, Yang F, Sun F, Jin D, Li Y (2021) Dynamic graph convolutional recurrent network for traffic prediction: benchmark and solution. ACM Trans Knowl Discovery Data (TKDD)

Zhao L, Song Y, Zhang C, Liu Y u, Wang P u, Lin T, Deng M, Li H (2019) T-gcn: a temporal graph convolutional network for traffic prediction. IEEE Trans Intell Transp Syst 21(9):3848–3858

Guo S, Lin Y, Wan H, Li X, Cong G (2021) Learning dynamics and heterogeneity of spatial-temporal graph data for traffic forecasting. IEEE Trans Knowl Data Eng

Bachlechner T, Majumder BP, Mao H, Cottrell G, McAuley J (2021) Rezero is all you need: fast convergence at large depth. In: Uncertainty in artificial intelligence. PMLR, pp 1352–1361

Chiang Wei-Lin, Liu X, Si S i, Li Y, Bengio S, Hsieh C-J (2019) Cluster-gcn: an efficient algorithm for training deep and large graph convolutional networks. In: Inproceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 257–266

Wang J, Song G, Yi W u, Wang L (2020) Streaming graph neural networks via continual learning. In: Proceedings of the 29th ACM international conference on information & knowledge management, pp 1515–1524

Wang C, Qiu Y, Gao D, Scherer S (2022) Lifelong graph learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13719–13728

Welling M, Kipf TN (2016) Semi-supervised classification with graph convolutional networks. In: J. international conference on learning representations (ICLR 2017)

Huakang L u, Huang D, Youyi S, Jiang D, Zhou T, Jing Qin (2020) St-trafficnet: a spatial-temporal deep learning network for traffic forecasting. Electronics 9(9):1–17

Kong X, Zhang J, Wei X, Xing W, Wei L u (2022) Adaptive spatial-temporal graph attention networks for traffic flow forecasting. Appl Intell 52(4):4300–4316

Young T, Hazarika D, Poria S, Cambria E (2018) Recent trends in deep learning based natural language processing. IEEE ComputIntell Magazine 13(3):55–75

Liu G, Guo J (2019) Bidirectional lstm with attention mechanism and convolutional layer for text classification. Neurocomputing 337:325–338

Zakir Hossain MD, Sohel F, Shiratuddin MF, Laga H (2019) A comprehensive survey of deep learning for image captioning. ACM Computing Surveys (CsUR) 51(6):1–36

Huang B, Tan G, Song Y, Zhou T, Dou H, Cui Z (2022) Mutual gain adaptive network for segmenting brain stroke lesions. Appl Soft Comput

Zhou T, Dou H, Tan J, Song Y, Wang F, Wang J (2022) Small dataset solves big problem: an outlier-insensitive binary classifier for inhibitory potency prediction knowledge-based systems

Dou H, Tan J, Wei H, Wang F, Yang J, Ma X-G, Wang J, Teng Z (2022) Transfer inhibitory potency prediction to binary classification: a model only needs a small training set computer methods and programs in biomedicine

Zheng L, Guo N, Chen W, Jin Y u, Jiang D (2020) Sentiment-guided sequential recommendation. In: Proceedings of the 43rd international ACM SIGIR conference on research and development in information retrieval, pp 1957–1960

Fang W, Zhuo W, Yan J, Song Y, Jiang D, memory Teng Zhou. (2022) Attention meets long short-term a deep learning network for traffic flow forecasting. Physica A: Stat Mech Appl 587:126485

Huang R, Huang C, Liu Y, Dai G, Kong W (2020) Lsgcn: long short-term traffic prediction with graph convolutional networks. IJCAI, pp 2355–2361

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Łukasz, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, pp 5998–6008

Zonghan W u, Pan S, Long G, Jiang J, Chang X, Zhang Chengqi (2020) Connecting the dots Multivariate time series forecasting with graph neural networks. In: Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery & data mining, pp 753–763

Lippi M, Bertini M, forecasting Paolo Frasconi. (2013) Short-term traffic flow an experimental comparison of time-series analysis and supervised learning. IEEE Trans Intell Transp Syst 14(2):871–882

Keskar NS, Mudigere D, Nocedal J, Smelyanskiy M, Tang PTP (2017) On large-batch training for deep learning: generalization gap and sharp minima. In: 5th international conference on learning representations, ICLR 2017, Toulon, France, 24-26, April 2017, conference track proceedings. OpenReview.net

Smith LN (2017) Cyclical learning rates for training neural networks. In: 2017 IEEE Winter conference on applications of computer vision (WACV). IEEE, pages 464–472

Bai Lei, Yao Lina, Li Can, Wang Xianzhi, Wang Can (2020) Adaptive graph convolutional recurrent network for traffic forecasting. In: Advances in neural information processing systems 33: annual conference on neural information processing systems 2020, NeurIPS 2020, 6-12, December 2020, virtual

Acknowledgements

The data that support the findings of this study are available from the corresponding author upon reasonable request. Huaying Li and Shumin Yang contribute equally. This work was supported by the National Natural Science Foundation of China (No. 61902232), the 2022 Guangdong Basic and Applied Basic Research Foundation (No. 2022A1515011590), the STU Incubation Project for the Research of Digital Humanities and New Liberal Arts (No. 2021DH-3), the 2020 Li Ka Shing Foundation Cross-Disciplinary Research Grant (No. 2020LKSFG05D), the Innovation School Project of Guangdong Province (No. 2017KCXTD015), and the Open Fund of Guangdong Provincial Key Laboratory of Infectious Diseases and Molecular Immunopathology (No. GDKL202212).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Huaying Li and Shumin Yang contribute equally.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, H., Yang, S., Song, Y. et al. Spatial dynamic graph convolutional network for traffic flow forecasting. Appl Intell 53, 14986–14998 (2023). https://doi.org/10.1007/s10489-022-04271-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04271-z