Optimization Stories: KKT Conditions

Connect KKT conditions with optimality in optimization problems

Key Concepts: Complementary Slackness, Duality, Duality Gap, KKT conditions, Lagrangian Function, Lagrange multipliers, Simplex Algorithm

The goal of this article is to help readers understand what role the key concepts above play in the search for optimality in optimization problems. Throughout this article, I will cover all the concepts in a top-down way, starting with the fundamental question: what is the goal of optimization.

Goal of Optimization

The goal of an optimization problem is to find the best solution given certain constraints, or find a “good” enough solution within certain amount of time, such as training the parameters in a deep neural network model to minimize its loss function (a non-convex optimization problem). The following linear programming problem is an example of the former case.

For linear programming problem, optimal solution (if exists) can be found by the efficient Simplex algorithm. For problem (I), optimal solution is

Simplex algorithm and some other optimization algorithms are iterative algorithms that, from a start point x₀, update x in each iteration through

until the optimal solution is found, or some stopping condition is met.

But how do we know the current solution is the optimal solution? This is where KKT conditions chime in.

KKT Conditions

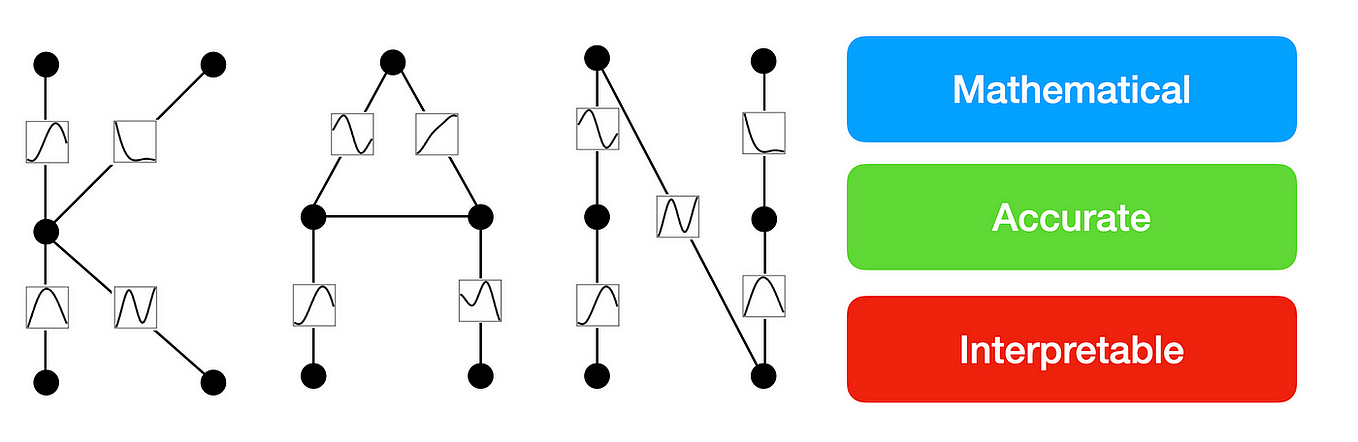

Karush-Kuhn-Tucker (KKT) conditions form the backbone of linear and nonlinear programming as they are

- Necessary and sufficient for optimality in linear programming.

- Necessary and sufficient for optimality in convex optimization, such as least square minimization in linear regression.

- Necessary for optimality in non-convex optimization problem, such as deep learning model training.

KKT Conditions for Linear Program with Inequality Constraints

Consider the following problem (II):

KKT conditions: x is optimal to the foregoing problem if and only if conditions (1)-(3) hold true.

Condition (1) merely states that x is a feasible solution, which is usually referred as primal feasibility. Condition (2), usually referred as dual feasibility, states that x is also a feasible solution to the dual problem. λ and v are called the Lagrangian multipliers (or dual variables) corresponding to the constraints Ax ≥ b and x ≥ 0, respectively. Finally, condition (3) is called complementary slackness. Specifically, λ(Ax - b) = 0 indicates that, at the optimal solution x, without loss of generality, either λᵢ = 0 or Aᵢx = bᵢ (i.e., the ith inequality constraint is binding). Naturally, vx = 0 can be interpreted in a similar way.

Revisiting problem (I)

For linear program, optimal solution (if exists) always resides on the vertex. In this case, [0, 0], [4, 0], [4/3, 8/3], and [0, 2] are the 4 candidates. We can then use KKT conditions to verify which one is the optimal solution.

For [0, 0], the binding constraints are x₁≥0 and x₂≥0, so w₁=w₂=0 by complementary slackness. However, the dual feasibility condition λA+v=c leads to v=(-1, -3), which violates another dual feasibility condition v≥0.

Similar process can be applied to the other 3 vertex points until we verify that [4/3, 8/3] satisfies all the KKT conditions, and claim it is the optimal solution.

KKT Conditions for Linear Program with Equality Constraints

Consider the following problem (III):

KKT conditions: x is optimal to the foregoing problem if and only if conditions (4)-(6) hold true.

After seeing the KKT conditions (1)-(3) for problem (II), (4)-(6) become intuitive. Given that constraints Ax ≥ b in problem (I) now become all binding cases Ax=b, the corresponding Lagrangian multipliers λ become unrestricted.

KKT Conditions for Nonlinear Problems

Consider the following nonlinear optimization problem (IV):

KKT conditions: conditions (7)-(9) are necessary for x to be the optimal solution for the foregoing problem (IV). If (IV) is convex, (7)-(9) become also the sufficient conditions.

(7), similar to (1), is the primal feasibility condition. Let’s jump to condition (9) first, the complementary slackness condition for inequality constraints gᵢ(x) ≤ 0, corresponds to condition (3) in problem (II).

Finally, (8) is the dual feasibility condition to guarantee the feasibility in dual problem. It is not difficult to find out that condition (3) in problem (II) is a special case of (8), with

With λ and v respectively represent the Lagrangian multipliers for Ax ≥ b and x ≥ 0, we have

The first part of condition (8) is also called first order condition for nonlinear optimization problem.

It is worth mentioning, or you may have already noticed, if the inequality constraints in the problem are in “≥” format, then the corresponding Lagrangian Multipliers are non-positive. Alternatively, you can also change the first order condition to the following format to keep them non-negative.

So far, we have reviewed KKT conditions for various problems. While primal feasibility conditions are quite intuitive, questions arise as how we should interpret dual feasibility and complementary slackness at the optimality.

Duality

An optimization problem can be presented from two perspectives, the primal problem or the dual problem. Sometimes, a problem can be solved in a much more efficient way in its dual format as oppose to its primal format.

For a minimization problem, any feasible solution in its dual version provides a lower bound to its primal version solution. Hence, the difference between dual optimal solution and primal optimal solution is known as Duality Gap.

For linear problems and convex nonlinear problems, duality gap is zero. For these problems, a solution cannot be feasible to both primal and dual unless it’s the optimal solution. Therefore, searching for optimality is equivalent to searching for a solution that are feasible in both primal and dual.

Linear Problem

Revisiting problem (II)

The dual problem of (II) is

By the problem definition, we have the following deduction

Because duality gap is zero for linear problem, we have

which is the complementary slackness condition in primal problem.

Nonlinear Problem

Revisiting problem (IV)

The dual problem of (IV) is given

The objective function of dual problem is also known as Lagrangian Dual Function. Recall that the first order derivative of Lagrangian Function, along with the two sets of constraints, are the dual feasibility and complementary slackness conditions for the nonlinear case.

Economic Interpretation of Complementary Slackness

Complementary Slackness can also be interpreted in an economic way. Recall that, at the optimality, Complementary Slackness requires only one of the two following cases hold true for a constraint (in a non-degeneracy situation):

- The constraint is binding (i.e., equality)

- The Lagrangian multiplier of this constraint is zero

If we think of the constraint as a resource constraint (e.g., materials, space, time, etc.) and the Lagrangian multiplier as the value or price of that resource, then Complementary Slackness is saying:

- If the constraint is binding, it means we are running out of this resource when maximizing the objective. This means that, should we have more of this resource, our objective value would increase. Hence, this resource is valuable and its price (Lagrangian multiplier) should be positive. That’s why sometimes Lagrangian multiplier is also called shadow price.

- If the constraint is non-binding, then this means this resource is useless as far as the underlying problem is concerned. Hence, its price (Lagrangian multiplier) should be zero.