Think:Act Magazine "It’s time to rethink AI"

Two computer scientists, two visions

Historical questions around Artificial Intelligence vs Intelligence Augmentation are still relevant today

by John Markoff

Artwork by Carsten Gueth

Sixty years ago, computing split into two visions of how a then-nascent technology would grow – by seeking to expand the power of the human mind, or working to replace it. While personal computers have since become indispensable, many technologists now believe that new artificial intelligence advances are a potential threat to human existence. But what if the threat is not to our existence, and rather to what it means to be human?

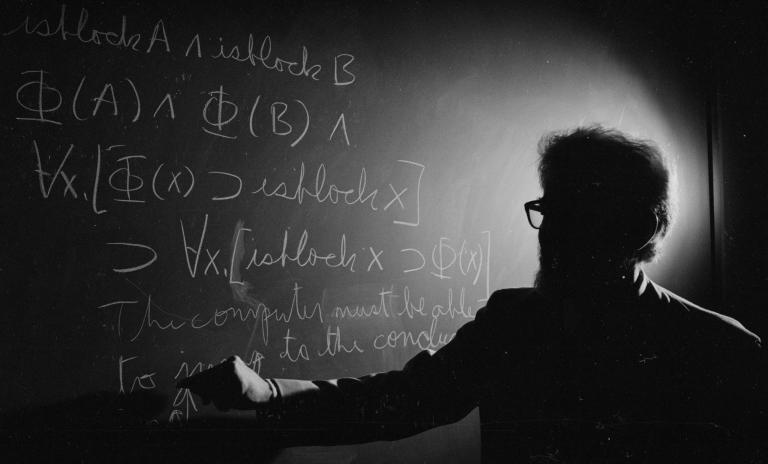

The modern computer world first came into view in the early 1960s in two computer research laboratories located on either side of Stanford University, each pursuing diametrically opposed visions of the future. John McCarthy, the computer scientist who had coined the term "artificial intelligence" established SAIL, the Stanford AI Laboratory, with the goal of designing a thinking machine over the next decade. The goal was to build a machine to replicate all of the physical and mental capabilities of a human. Another computer scientist, Douglas Engelbart, was engaged in creating a system to extend the capabilities of the human mind simultaneously on the other side of campus. He coined the phrase "intelligence augmentation," or IA.

The computer world had been set on two divergent paths. Both laboratories were funded by the Pentagon, yet their differing philosophies would create a tension and a dichotomy at the dawn of the interactive computing age: One laboratory had set out to extend the human mind; the other to replace it. That tension, which has remained at the heart of the digital world until this day, also presents a contradiction. While AI seeks to replace human activity, IA, which increases the power of the human mind, also foretells a world in which fewer humans are necessary. Indeed Engelbart's vision of IA was the first to take shape with the emergence of the personal computer industry during the 1970s, and that despite the fact that he was initially seen as a dreamer and an outsider. Steve Jobs perhaps described it best when he referred to the PC as a "bicycle for the mind."

John McCarthy was a pioneer in computer science and interactive computing. He is known as one of the founders of AI.

Six decades after the two labs began their research, we are now on the cusp of realizing McCarthy's vision as well. On the streets of San Francisco, cars without human drivers are a routine sight and Microsoft researchers recently published a paper claiming that in the most powerful AI systems, known as large language models or chatbots, they are seeing "sparks of artificial general intelligence" – machines with the reasoning powers of the human mind.

To be sure, AI researchers' recent success has led to an acrimonious debate over whether Silicon Valley has become overwrought and once more caught up in its own hype. Indeed, there are some indications that the AI revolution may be arriving more slowly than advocates claim. For example, no one has figured out how to make chatbots less predisposed to what are called "hallucinations" – one way to describe their disturbing tendency to just make facts up from thin air.

Even worse, some critics charge that perhaps more than anything, the latest set of advances in chatbots has unleashed a new tendency to anthropomorphize human-machine interactions – that very real human tendency to see ourselves in inanimate objects, ranging from pet rocks to robots to software programs. In an effort to place the advances in a more restricted context, University of Washington linguist Emily Bender coined the phrase "stochastic parrots," suggesting that superhuman capabilities are more illusory than real.

"We understand human mental processes only slightly better than a fish understands swimming."

Whichever the case, the Valley is caught in a frenzy of anticipation over the near-term arrival of superhuman machines and technologists are rehashing all the dark visions of a half-century of science fiction lore. From killing machines like the Terminator and HAL 9000 to cerebral lovers like the ethereal voice of Scarlett Johansson in the movie Her, a set of fantasies about superhuman machines have ominously reemerged and many of the inventors themselves are now calling on governments to quickly regulate their industry. What is fancifully being called "the paperclip problem" – the specter of a superintelligent machine that destroys the human race while in the process of innocently fulfilling its mission to manufacture a large number of paperclips – has been advanced to highlight how artificial intelligence will lack the human ability to reason about moral choices.

But what if all the handwringing about the imminent existential threat posed by artificial intelligence is misplaced? What if the real impact of the latest artificial intelligence advances is something that is neither about the IA vs AI dichotomy, but rather some strange amalgam of the two that is now already transforming what it means to be human? This new relationship is characterized by a more seamless integration of human intelligence and machine capabilities, with AI and IA merging to transform the nature of human interaction and decision-making. More than anything else, the sudden and surprising arrival of natural human language as a powerful interface between humans and computers marks this as a new epoch.

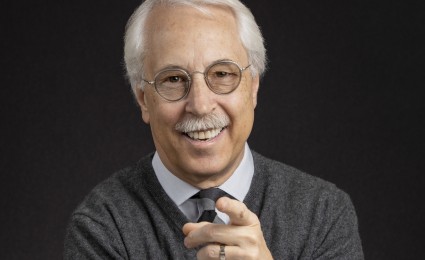

Douglas Engelbart was a computer and internet pioneer. A founder of the field of human-computer interaction, he is also known as the creator of the computer mouse and a developer of hypertext.

Mainframe computers were once accessed by only a specialized cadre of corporate, military and scientific specialists. Gradually as modern semiconductor technology evolved and microprocessor chips became more powerful and less expensive at an accelerating rate – exponential improvement has not only meant that computing has gotten faster, faster, but also cheaper, faster – each new generation of computing has reached a larger percentage of the human population.

In the 1970s, minicomputers extended the range of computing to corporate departments; a decade later personal computers reached white-collar workers, home computers broadened computing into the family room and the study and finally smartphones touched half the human population. We are now seeing the next step in the emergence of a computational fabric that is blanketing the globe – having mastered language, computing will be accessible to the entire human species.

"The key thing about all the world's big problems is that they have to be dealt with collectively. If we don't get collectively smarter, we're doomed."

In considering the ramifications of the advent of true AI, the television series Star Trek is worth reconsidering. Star Trek described an enemy alien race known as the Borg that extended its power by forcibly transforming individual beings into drones by surgically augmenting them with cybernetic components. The Borg's rallying cry was "resistance is futile, you will be assimilated." Despite warnings by computer scientists going at least as far back as Joseph Weizenbaum's 1976 book Computer Power and Human Reason – that computers could be used to extend but should never replace humans – there has not been enough consideration given to our relationship to the machines we are creating.

The nature of what it means to be human was well expressed by philosopher Martin Buber in his description of what he called the "I - thou" relationship. He defined this as when humans engage with each other in a direct, mutual, open and honest way. In contrast, he also described an "I - it" relationship where people dealt with inanimate objects as well in some cases as treating other humans as objects to be valued only in their usefulness. Today we must add a new kind of relationship which can be described as "I - it - thou" which has become widespread in the new networked digital world.

As computer networks have spread human communication around the globe a computational fabric has quickly emerged ensuring that most social, economic and political interaction is now mediated by algorithms. Whether it is commerce, dating or, in the Covid-19 era, meetings for business via video chat, most human interaction is no longer face-to-face, but rather through a computerized filter that defines whom we meet, what we read and to a growing degree synthesizes a digital world that surrounds us.

What are the consequences of this new digitalized society? The advent of facile conversational AI systems is heralding the end of the advertising-funded internet. There is already a venture capital-funded gold rush underway as technology corporations race to develop chatbots that can both interact with and convince – that is manipulate – humans as part of modern commerce.

At its most extreme is Silicon Valley man-boy Elon Musk, who both wants to take civilization to Mars and simultaneously warns us that artificial intelligence is a growing threat to civilization. In 2016 he founded Neuralink, a company intent on placing a spike in human brains to create a brain-computer interface. Supposedly, according to Musk, this will allow humans to control AI systems, thereby warding off the domination of our species by some future Terminator-style AI. However, it seems the height of naïveté to assume that such a tight human-machine coupling will not permit just the opposite from occurring as well.

Computer networks are obviously two-way streets, something that the United States has painfully learned in the past seven years as its democracy has come under attack by foreign agents intent on spreading misinformation and political chaos. The irony, of course, is that just the opposite was originally believed – that the internet would be instrumental in sowing democracy throughout the world.

It will be essential for society to maintain a bright line between what is human and what is machine. As artificial intelligence becomes more powerful, tightly coupling humans with AI risks creating dangerous dependencies, diminishing human agency and autonomy as well as limiting our ability to function without technological assistance. Removable interfaces will preserve human control over when and how we utilize AI tools. That will allow humans to benefit from AI's positives while mitigating risks of over-reliance and loss of independent decision-making.

A bright line won't be enough. Above all, we must resist the temptation to humanize our new AI companions. In the 1980s, Ronald Reagan popularized the notion "trust, but verify" in defining the relationship between the United States and the Soviet Union. But how do you trust a machine that does not have a moral compass? An entire generation must be taught the art of critical thinking, approaching our new intellectual partners with a level of skepticism that we have in the past reserved for political opponents. The mantra for this new age of AI must remain, "verify, but never trust."